Inertial Sensor Fusion

Inertial navigation with IMU and GPS, sensor fusion, custom filter

tuning

Inertial sensor fusion uses filters to improve and combine readings from IMU, GPS, and other sensors. To model specific sensors, see Sensor Models.

For simultaneous localization and mapping, see SLAM.

Functions

Blocks

| AHRS | Orientation from accelerometer, gyroscope, and magnetometer readings |

| Complementary Filter | Estimate orientation using complementary filter (Since R2023a) |

| IMU Filter | Estimate orientation using IMU Filter (Since R2023b) |

| ecompass | Compute orientation from accelerometer and magnetometer readings (Since R2024a) |

Topics

Sensor Fusion

- Choose Inertial Sensor Fusion Filters

Applicability and limitations of various inertial sensor fusion filters. - Estimate Orientation Through Inertial Sensor Fusion

This example shows how to use 6-axis and 9-axis fusion algorithms to compute orientation. - Estimate Orientation with a Complementary Filter and IMU Data

This example shows how to stream IMU data from an Arduino board and estimate orientation using a complementary filter. - Logged Sensor Data Alignment for Orientation Estimation

This example shows how to align and preprocess logged sensor data. - Lowpass Filter Orientation Using Quaternion SLERP

This example shows how to use spherical linear interpolation (SLERP) to create sequences of quaternions and lowpass filter noisy trajectories. - Pose Estimation from Asynchronous Sensors

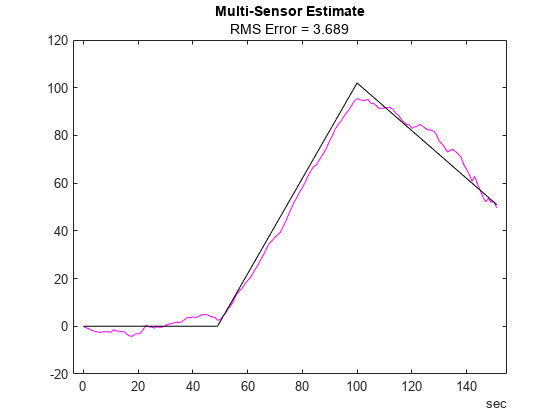

This example shows how you might fuse sensors at different rates to estimate pose. - Custom Tuning of Fusion Filters

Use thetunefunction to optimize the noise parameters of several fusion filters, including theahrsfilterobject. - Fuse Inertial Sensor Data Using insEKF-Based Flexible Fusion Framework

TheinsEKFfilter object provides a flexible framework that you can use to fuse inertial sensor data. - Autonomous Underwater Vehicle Pose Estimation Using Inertial Sensors and Doppler Velocity Log

This example shows how to fuse data from a GPS, Doppler Velocity Log (DVL), and inertial measurement unit (IMU) sensors to estimate the pose of an autonomous underwater vehicle (AUV) shown in this image.

Applications

- Binaural Audio Rendering Using Head Tracking

Track head orientation by fusing data received from an IMU, and then control the direction of arrival of a sound source by applying head-related transfer functions (HRTF). - Tilt Angle Estimation Using Inertial Sensor Fusion and ADIS16505

Get data from Analog Devices ADIS16505 IMU sensor and use sensor fusion on the data to compute the tilt of the sensor. (Since R2024a) - Wireless Data Streaming and Sensor Fusion Using BNO055

This example shows how to get data from a Bosch BNO055 IMU sensor through an HC-05 Bluetooth® module, and to use the 9-axis AHRS fusion algorithm on the sensor data to compute orientation of the device.