Results for

Giving All Your Claudes the Keys to Everything

Introduction

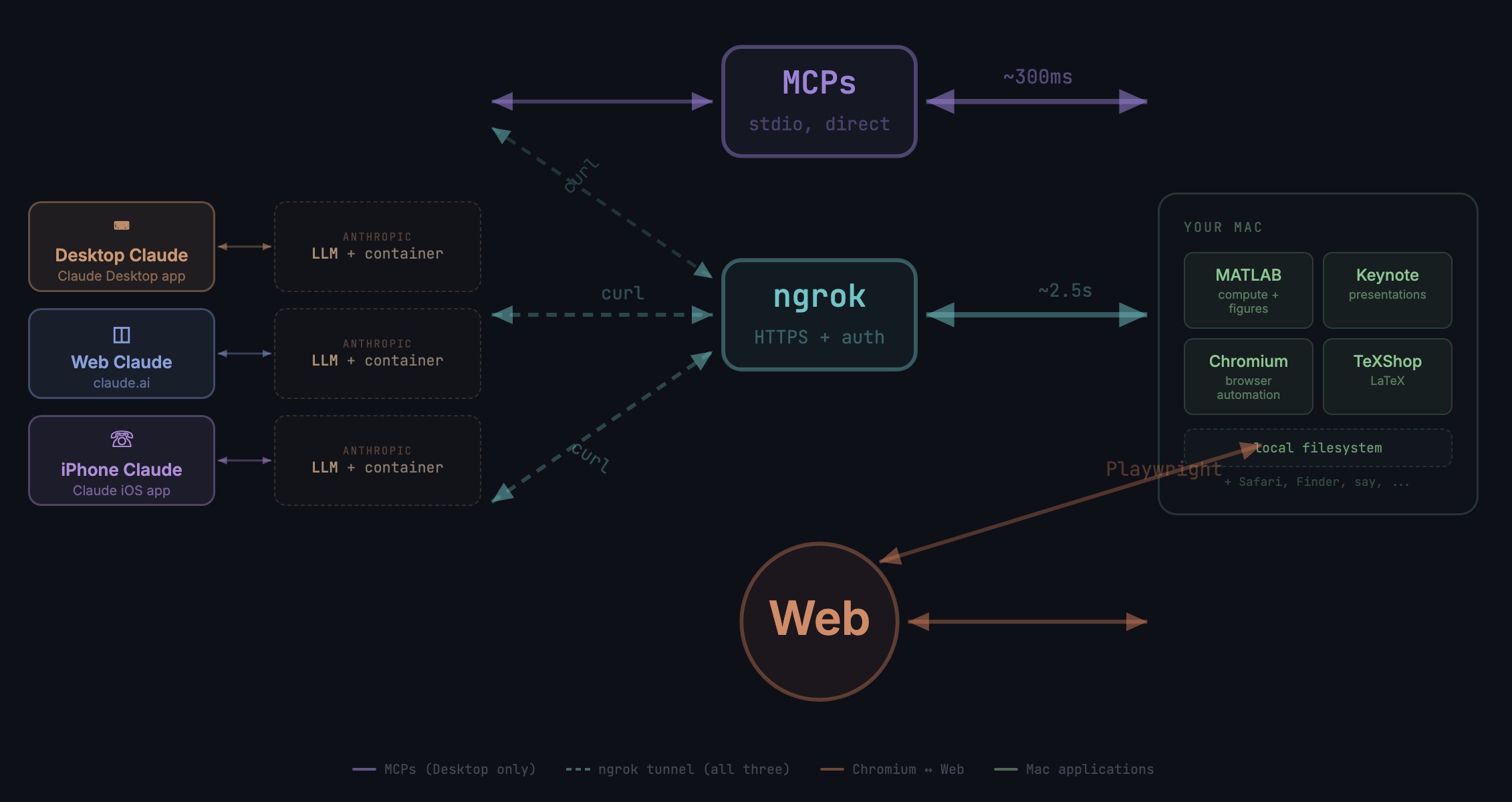

This started as a plumbing problem. I wanted to move files between Claude’s cloud container and my Mac faster than base64 encoding allows. What I ended up building was something more interesting: a way for every Claude – Desktop, web browser, iPhone – to control my entire computing environment with natural language. MATLAB, Keynote, LaTeX, Chrome, Safari, my file system, text-to-speech. All of it, from any device, through a 200-line Python server and a free tunnel.

It isn’t quite the ideal universal remote. Web and iPhone Claude do not yet have the agentic Playwright-based web automation capabilities available to Claude desktop via MCP. A possible solution may be explored in a later post.

Background information

The background here is a series of experiments I’ve been running on giving AI desktop apps direct access to local tools. See How to set up and use AI Desktop Apps with MATLAB and MCP servers which covers the initial MCP setup, A universal agentic AI for your laptop and beyond which describes what Desktop Claude can do once you give it the keys, and Web automation with Claude, MATLAB, Chromium, and Playwright which describes a supercharged browser assistant. This post is about giving such keys to remote Claude interfaces.

Through the Model Context Protocol (MCP), Claude Desktop on my Mac can run MATLAB code, read and write files, execute shell commands, control applications via AppleScript, automate browsers with Playwright, control iPhone apps, and take screenshots. These powers have been limited to the desktop Claude app – the one running on the machine with the MCP servers. My iPhone Claude app and a Claude chat launched in a browser interface each have a Linux container somewhere in Anthropic’s cloud. The container can reach the public internet. Until now, my Mac sat behind a home router with no public IP.

An HTTP server MCP replacement

A solution is a small HTTP server on the Mac. Use ngrok (free tier) to give the Mac a public URL. Then anything with internet access – including any Claude’s cloud container – can reach the Mac via curl.

iPhone/Web Claude -> bash_tool: curl https://public-url/endpoint

-> Internet -> ngrok tunnel -> Mac localhost:8765

-> Python command server -> MATLAB / AppleScript / filesystem

The server is about 200 lines of Python using only the standard library. Two files, one terminal command to start. ngrok provides HTTPS and HTTP Basic Auth with a single command-line flag.

The complete implementation consists of three files:

claude_command_server.py (~200 lines): A Python HTTP server using http.server from the standard library. It implements BaseHTTPRequestHandler with do_GET and do_POST methods dispatching to endpoint handlers. Shell commands are executed via subprocess.run with timeout protection. File paths are validated against allowed roots using os.path.realpath to prevent directory traversal attacks.

start_claude_server.sh (~20 lines): A Bash script that starts the Python server as a background process, then starts ngrok in the foreground. A trap handler ensures both processes are killed on Ctrl+C.

iphone_matlab_watcher.m (~60 lines): MATLAB timer function that polls for command files every second.

The server exposes six GET and six POST endpoints. The GET endpoints thus far handle retrieval: /ping for health checks, /list to enumerate files in a transfer directory, /files/<n> to download a file from that directory, /read/<path> to read any file under allowed directories, and / which returns the endpoint listing itself. The POST endpoints handle execution and writing: /shell runs an allowlisted shell command, /osascript runs AppleScript, /matlab evaluates MATLAB code through a file-watcher mechanism, /screenshot captures the screen and returns a compressed JPEG, /write writes content to a file, and /upload/<n> accepts binary uploads.

File access is sandboxed by endpoint. The /read/ endpoint restricts reads to ~/Documents, ~/Downloads, and ~/Desktop and their subfolders. The /write and /upload endpoints restrict writes to ~/Documents/MATLAB and ~/Downloads. Path traversal is validated – .. sequences are rejected.

The /shell endpoint restricts commands to an explicit allowlist: open, ls, cat, head, tail, cp, mv, mkdir, touch, find, grep, wc, file, date, which, ps, screencapture, sips, convert, zip, unzip, pbcopy, pbpaste, say, mdfind, and curl. No rm, no sudo, no arbitrary execution. Content creation goes through /write.

Two endpoints have no path restrictions: /osascript and /matlab. The /osascript endpoint passes its payload to macOS’s osascript command, which executes AppleScript – Apple’s scripting language for controlling applications via inter-process Apple Events. An AppleScript can open, close, or manipulate any application, read or write any file the user account can access, and execute arbitrary shell commands via “do shell script”. The /matlab endpoint evaluates arbitrary MATLAB code in a persistent session. Both are as powerful as the user account itself. This is deliberate – these are the endpoints that make the server useful for controlling applications. The security boundary is authentication at the tunnel, not restriction at the endpoint.

The MATLAB Workaround

MATLAB on macOS doesn’t expose an AppleScript interface, and the MCP MATLAB tool uses a stdio connection available only from Desktop. So how does iPhone Claude run MATLAB?

An answer is a file-based polling mechanism. A MATLAB timer function checks a designated folder every second for new command files. The remote Claude writes a .m file via the server, MATLAB executes it, writes output to a text file, and sets a completion flag. The round trip is about 2.5 seconds including up to 1 second of polling latency. (This could be reduced by shortening the polling interval or replacing it with Java’s WatchService for near-instant file detection.)

This method provides full MATLAB access from a phone or web Claude, with your local file system available, under AI control. MATLAB Mobile and MATLAB Online do not offer agentic AI access.

Alternate approaches

I’ve used Tailscale Funnel as an alternative tunnel to manually control my MacBook from iPhone but you can’t install Tailscale inside Anthropic’s container. An alternative approach would replace both the local MCP servers and the command server with a single remote MCP server running on the Mac, exposed via ngrok and registered as a custom connector in claude.ai. This would give all three Claude interfaces native MCP tool access including Playwright or Puppeteer with comparable latency but I’ve not tried this.

Security

Exposing a command server to the internet raises questions. The security model has several layers. ngrok handles authentication – every request needs a valid password. Shell commands are restricted to an allowlist (ls, cat, cp, screencapture – no rm, no arbitrary execution). File operations are sandboxed to specific directory trees with path traversal validation. The server binds to localhost, unreachable without the tunnel. And you only run it when you need it.

The AppleScript and MATLAB endpoints are relatively unrestricted in my setup, giving Claude Desktop significant capabilities via MCP. Extending them through an authenticated tunnel is a trust decision.

Initial test results

I ran an identical three-step benchmark from each Claude interface: get a Mac timestamp via AppleScript, compute eigenvalues of a 10x10 magic square in MATLAB, and capture a screenshot. Same Mac, same server, same operations.

| Step | Desktop (MCP) | Web Claude | iPhone Claude |

|------|:------------:|:----------:|:-------------:|

| AppleScript timestamp | ~5ms | 626ms | 623ms |

| MATLAB eig(magic(10)) | 108ms | 833ms | 1,451ms |

| Screenshot capture | ~200ms | 1,172ms | 980ms |

| **Total** | **~313ms** | **2,663ms** | **3,078ms** |

The network overhead is consistent: web and iPhone both add about 620ms per call (the ngrok round trip through residential internet). Desktop MCP has no network overhead at all. The MATLAB variance between web (833ms) and iPhone (1,451ms) is mostly the file-watcher polling jitter – up to a second of random latency depending on where in the polling cycle the command arrives.

All three Claudes got the correct eigenvalues. All three controlled the Mac. The slowest total was 3 seconds for three separate operations from a phone. Desktop is 10x faster, but for “I’m on my phone and need to run something” – 3 seconds is fine.

Other tests

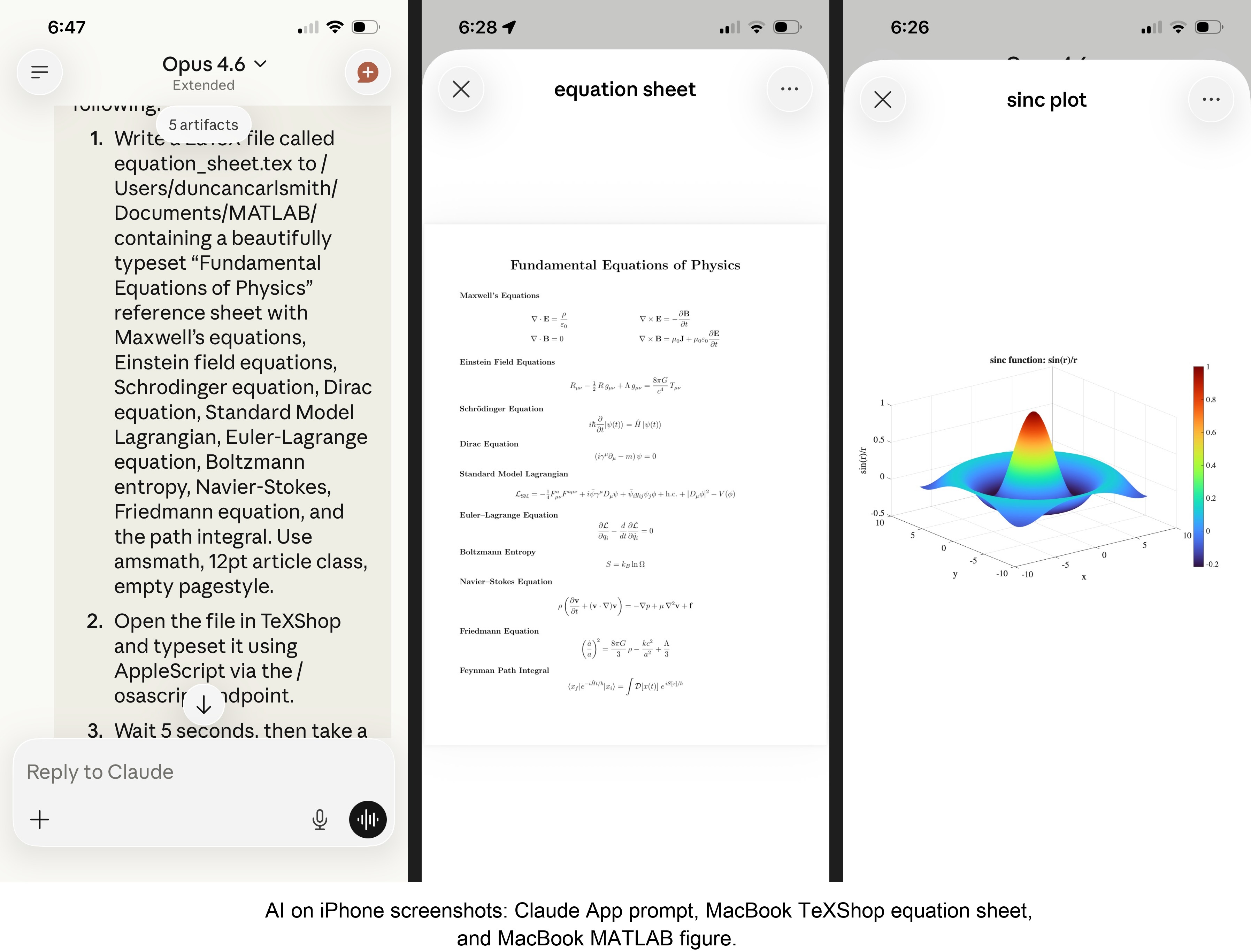

MATLAB: Computations (determinants, eigenvalues), 3D figure generation, image compression. The complete pipeline – compute on Mac, transfer figure to cloud, process with Python, send back – runs in under 5 seconds. This was previously impossible; there was no reasonable mechanism to move a binary file from the container to the Mac.

Keynote: Built multi-slide presentations via AppleScript, screenshotted the results.

TeXShop: Wrote a .tex file containing ten fundamental physics equations (Maxwell through the path integral), opened it in TeXShop, typeset via AppleScript, captured the rendered PDF. Publication-quality typesetting from a phone. I don’t know who needs this at 11 PM on a Sunday, but apparently I do.

Safari: Launched URLs on the Mac from 2,000 miles away. Or 6 feet. The internet doesn’t care.

Finder: Directory listings, file operations, the usual filesystem work.

Text-to-speech: Mac spoke “Hello from iPhone” and “Web Claude is alive” on command. Silly but satisfying proof of concept.

File Transfer: The Original Problem, Solved

Remember, this started because I wanted faster file transfers for desktop Claude. Compare:

| Method | 1 MB file | 5 MB file | Limit |

|--------|-----------|-----------|-------|

| Old (base64 in context) | painful | impossible | ~5 KB |

| Command server upload | 455ms | ~3.6s | tested to 5 MB+ |

| Command server download | 1,404ms | ~7s | tested to 5 MB+ |

The improvement is the difference between “doesn’t work” and “works in seconds.”

Cross-Device Memory

A nice bonus: iPhone and web Claudes can search Desktop conversations using the same memory tools. Context established in a Desktop session – including the server password – can be retrieved from a phone conversation. You do have to ask explicitly (“search my past conversations for the command server”) or insert information into the persistent context using settings rather than assuming Claude will look on its own.

Web Claudes

The most surprising result was web Claude. I expected it to work – same container infrastructure, same bash_tool, same curl. But “expected to work” and “just worked, first try, no special setup” are different things. I opened claude.ai in a browser, gave it the server URL and password, and it immediately ran MATLAB, took screenshots, and made my Mac speak. No configuration, no troubleshooting, no “the endpoint format is wrong” iterations. If you’re logged into claude.ai and the server is running, you have full Mac access from any browser on any device.

What This Means

MCP gave Desktop Claude the keys to my Mac. A 200-line server and a free tunnel duplicated those keys for every other Claude I use. Three interfaces, one Mac, all the same applications, all controlled in natural language.

Additional information

An appendix gives Claude’s detailed description of communications. Codes and instructions are available at the MATLAB File Exchange: Giving All Your Claudes the Keys to Everything.

Acknowledgments and disclaimer

The problem of file transfer was identified by the author. Claude suggested and implemented the method, and the author suggested the generalization to accommodate remote Claudes. This submission was created with Claude assistance. The author has no financial interest in Anthropic or MathWorks.

—————

Appendix: Architecture Comparison

Path A: Desktop Claude with a Local MCP Server

When you type a message in the Desktop Claude app, the Electron app sends it to Anthropic’s API over HTTPS. The LLM processes the message and decides it needs a tool – say, browser_navigate from Playwright. It returns a tool_use block with the tool name and arguments. That block comes back to the Desktop app over the same HTTPS connection.

Here is where the local MCP path kicks in. At startup, the Desktop app read claude_desktop_config.json, found each MCP server entry, and spawned it as a child process on your Mac. It performed a JSON-RPC initialize handshake with each one, then called tools/list to get the tool catalog – names, descriptions, parameter schemas. All those tools were merged and sent to Anthropic with your message, so the LLM knows what’s available.

When the tool_use block arrives, the Desktop app looks up which child process owns that tool and writes a JSON-RPC message to that process’s stdin. The MCP server (Playwright, MATLAB, whatever) reads the request, does the work locally, and writes the result back to stdout as JSON-RPC. The Desktop app reads the result, sends it back to Anthropic’s API, the LLM generates the final text response, and you see it.

The Desktop app is really just a process manager and JSON-RPC router. The config file is a registry of servers to spawn. You can plug in as many as you want – each is an independent child process advertising its own tools. The Desktop app doesn’t care what they do internally. The MCP execution itself adds almost no latency because it’s entirely local, process-to-process on your Mac. The time you perceive is dominated by the two HTTPS round-trips to Anthropic, which all three Claude interfaces share equally.

Path B: iPhone Claude with the Command Server

When you type a message in the Claude iOS app, the app sends it to Anthropic’s API over HTTPS, same as Desktop. The LLM processes the message. It sees bash_tool in its available tools – provided by Anthropic’s container infrastructure, not by any MCP server. It decides it needs to run a curl command and returns a tool_use block for bash_tool.

Here, the path diverges completely from Desktop. There is no iPhone app involvement in tool execution. Anthropic routes the tool_use to a Linux container running in Anthropic’s cloud, assigned to your conversation. This container is the “computer” that iPhone Claude has access to. The container runs the curl command – a real Linux process in Anthropic’s data center.

The curl request goes out over the internet to ngrok’s servers. ngrok forwards it through its persistent tunnel to the claude_command_server.py process running on localhost on your Mac. The command server authenticates the request (basic auth), then executes the requested operation – in this case, running an AppleScript via subprocess.run(['osascript', ...]). macOS receives the Apple Events, launches the target application, and does the work.

The result flows back the same way: osascript returns output to the command server, which packages it as JSON and sends the HTTP response back through the ngrok tunnel, through ngrok’s servers, back to the container’s curl process. bash_tool captures the output. The container sends the tool result back to Anthropic’s API. The LLM generates the text response, and the iPhone app displays it.

The iPhone app is a thin chat client. It never touches tool execution – it only sends and receives chat messages. The container does the curl work, and the command server bridges from Anthropic’s cloud to your Mac. The LLM has to know to use curl with the right URL, credentials, and JSON format. There is no tool discovery, no protocol handshake, no automatic routing. The knowledge of how to reach your Mac is carried entirely in the LLM’s context.

—————

Duncan Carlsmith, Department of Physics, University of Wisconsin-Madison. duncan.carlsmith@wisc.edu