resnetLayers

resnetLayers is not recommended. Use the resnetNetwork

function instead. For more information, see Version History.

Description

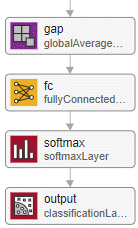

lgraph = resnetLayers(inputSize,numClasses)inputSize and a number of classes specified by

numClasses.

lgraph = resnetLayers(___,Name=Value)InitialNumFilters=32

specifies 32 filters in the initial convolutional layer.

Tip

To load a pretrained ResNet neural network, use the imagePretrainedNetwork function.

Examples

Input Arguments

Name-Value Arguments

Output Arguments

More About

Tips

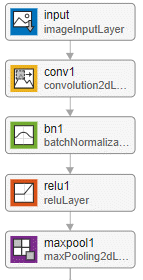

When working with small images, set the

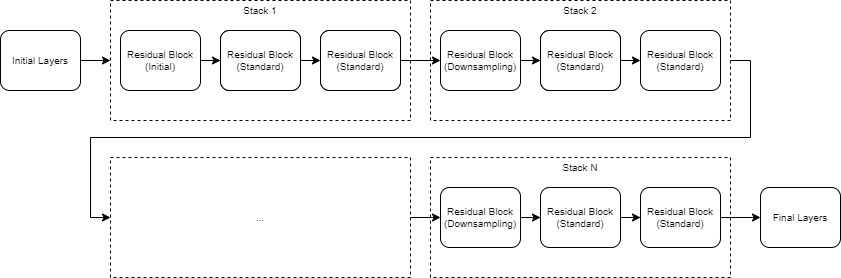

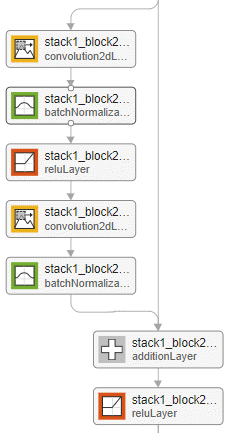

InitialPoolingLayeroption to"none"to remove the initial pooling layer and reduce the amount of downsampling.Residual networks are usually named ResNet-X, where X is the depth of the network. The depth of a network is defined as the largest number of sequential convolutional or fully connected layers on a path from the input layer to the output layer. You can use the following formula to compute the depth of your network:

where si is the depth of stack i.

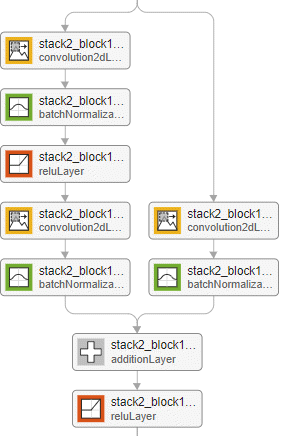

Networks with the same depth can have different network architectures. For example, you can create a ResNet-14 architecture with or without a bottleneck:

The relationship between bottleneck and nonbottleneck architectures also means that a network with a bottleneck will have a different depth than a network without a bottleneck.resnet14Bottleneck = resnetLayers([224 224 3],10, ... StackDepth=[2 2], ... NumFilters=[64 128]); resnet14NoBottleneck = resnetLayers([224 224 3],10, ... BottleneckType="none", ... StackDepth=[2 2 2], ... NumFilters=[64 128 256]);

resnet50Bottleneck = resnetLayers([224 224 3],10); resnet34NoBottleneck = resnetLayers([224 224 3],10, ... BottleneckType="none");

References

[1] He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Deep Residual Learning for Image Recognition.” Preprint, submitted December 10, 2015. https://arxiv.org/abs/1512.03385.

[2] He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. “Identity Mappings in Deep Residual Networks.” Preprint, submitted July 25, 2016. https://arxiv.org/abs/1603.05027.

[3] He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. "Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification." In Proceedings of the 2015 IEEE International Conference on Computer Vision, 1026–1034. Washington, DC: IEEE Computer Vision Society, 2015.