trainnet

Syntax

Description

netTrained = trainnet(images,net,lossFcn,options)net for image tasks using the

images and targets specified by images and the training options

defined by options.

netTrained = trainnet(sequences,net,lossFcn,options)sequences.

netTrained = trainnet(features,net,lossFcn,options)features.

netTrained = trainnet(data,net,lossFcn,options)

[ also returns information on the training using any of

the previous syntaxes.netTrained,info]

= trainnet(___)

Examples

If you have a data set of images, then you can train a deep neural network using an image input layer.

Unzip the digit sample data and create an image datastore. The imageDatastore function automatically labels the images based on folder names.

unzip("DigitsData.zip") imds = imageDatastore("DigitsData", ... IncludeSubfolders=true, ... LabelSource="foldernames");

Divide the data into training and test data sets, so that each category in the training set contains 750 images, and the test set contains the remaining images from each label. splitEachLabel splits the image datastore into two new datastores for training and test.

numTrainFiles = 750;

[imdsTrain,imdsTest] = splitEachLabel(imds,numTrainFiles,"randomized");Define the convolutional neural network architecture. Specify the size of the images in the input layer of the network and the number of classes in the final fully connected layer. Each image is 28-by-28-by-1 pixels.

inputSize = [28 28 1];

classNames = categories(imds.Labels);

numClasses = numel(classNames);

layers = [

imageInputLayer(inputSize)

convolution2dLayer(5,20)

batchNormalizationLayer

reluLayer

fullyConnectedLayer(numClasses)

softmaxLayer];Specify the training options.

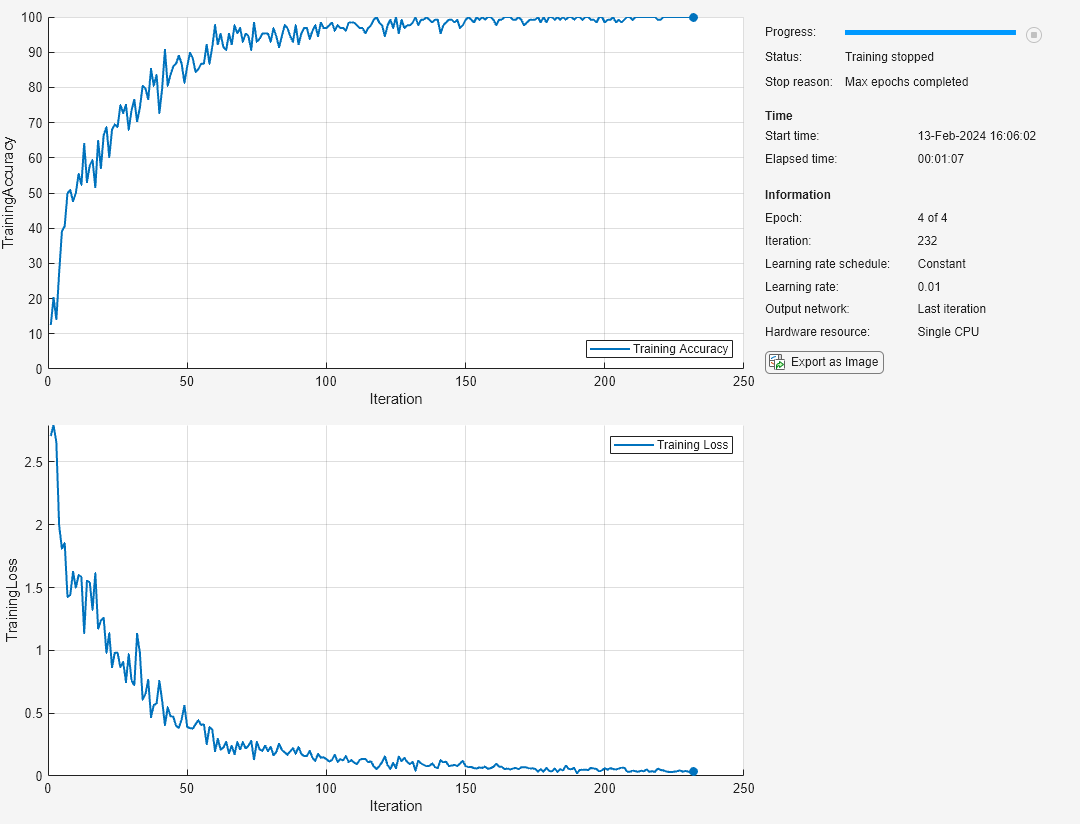

Train using the SGDM solver.

Train for four epochs.

Monitor the training progress in a plot and monitor the accuracy metric.

Disable the verbose output.

options = trainingOptions("sgdm", ... MaxEpochs=4, ... Verbose=false, ... Plots="training-progress", ... Metrics="accuracy");

Train the neural network. For classification, use cross-entropy loss.

net = trainnet(imdsTrain,layers,"crossentropy",options);

Test the network using the labeled test set. For single-label classification, evaluate the accuracy. The accuracy is the percentage of the labels that the network predicts correctly.

accuracy = testnet(net,imdsTest,"accuracy")accuracy = 98.2400

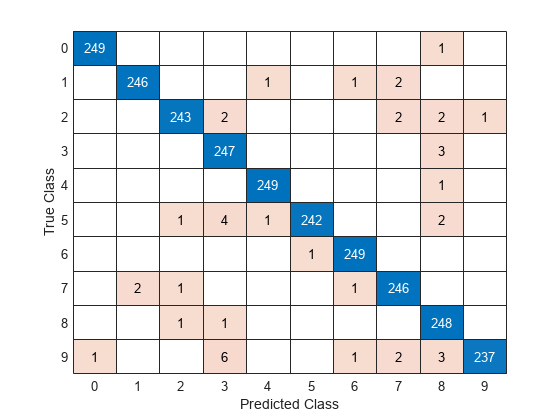

Predict the classification scores using the trained network, then convert the labels to predictions using the scores2label function.

scoresTest = minibatchpredict(net,imdsTest); YTest = scores2label(scoresTest,classNames);

Visualize the predictions in a confusion chart.

confusionchart(imdsTest.Labels,YTest)

If you have a data set of numeric features (for example tabular data without spatial or time dimensions), then you can train a deep neural network using a feature input layer.

Read the transmission casing data from the CSV file "transmissionCasingData.csv".

filename = "transmissionCasingData.csv"; tbl = readtable(filename,TextType="String");

Convert the labels for prediction to categorical using the convertvars function.

labelName = "GearToothCondition"; tbl = convertvars(tbl,labelName,"categorical");

To train a network using categorical features, you must first convert the categorical features to numeric. First, convert the categorical predictors to categorical using the convertvars function by specifying a string array containing the names of all the categorical input variables. In this data set, there are two categorical features with names "SensorCondition" and "ShaftCondition".

categoricalPredictorNames = ["SensorCondition" "ShaftCondition"]; tbl = convertvars(tbl,categoricalPredictorNames,"categorical");

Loop over the categorical input variables. For each variable, convert the categorical values to one-hot encoded vectors using the onehotencode function.

for i = 1:numel(categoricalPredictorNames) name = categoricalPredictorNames(i); tbl.(name) = onehotencode(tbl.(name),2); end

View the first few rows of the table. Notice that the categorical predictors have been split into multiple columns.

head(tbl)

SigMean SigMedian SigRMS SigVar SigPeak SigPeak2Peak SigSkewness SigKurtosis SigCrestFactor SigMAD SigRangeCumSum SigCorrDimension SigApproxEntropy SigLyapExponent PeakFreq HighFreqPower EnvPower PeakSpecKurtosis SensorCondition ShaftCondition GearToothCondition

________ _________ ______ _______ _______ ____________ ___________ ___________ ______________ _______ ______________ ________________ ________________ _______________ ________ _____________ ________ ________________ _______________ ______________ __________________

-0.94876 -0.9722 1.3726 0.98387 0.81571 3.6314 -0.041525 2.2666 2.0514 0.8081 28562 1.1429 0.031581 79.931 0 6.75e-06 3.23e-07 162.13 0 1 1 0 No Tooth Fault

-0.97537 -0.98958 1.3937 0.99105 0.81571 3.6314 -0.023777 2.2598 2.0203 0.81017 29418 1.1362 0.037835 70.325 0 5.08e-08 9.16e-08 226.12 0 1 1 0 No Tooth Fault

1.0502 1.0267 1.4449 0.98491 2.8157 3.6314 -0.04162 2.2658 1.9487 0.80853 31710 1.1479 0.031565 125.19 0 6.74e-06 2.85e-07 162.13 0 1 0 1 No Tooth Fault

1.0227 1.0045 1.4288 0.99553 2.8157 3.6314 -0.016356 2.2483 1.9707 0.81324 30984 1.1472 0.032088 112.5 0 4.99e-06 2.4e-07 162.13 0 1 0 1 No Tooth Fault

1.0123 1.0024 1.4202 0.99233 2.8157 3.6314 -0.014701 2.2542 1.9826 0.81156 30661 1.1469 0.03287 108.86 0 3.62e-06 2.28e-07 230.39 0 1 0 1 No Tooth Fault

1.0275 1.0102 1.4338 1.0001 2.8157 3.6314 -0.02659 2.2439 1.9638 0.81589 31102 1.0985 0.033427 64.576 0 2.55e-06 1.65e-07 230.39 0 1 0 1 No Tooth Fault

1.0464 1.0275 1.4477 1.0011 2.8157 3.6314 -0.042849 2.2455 1.9449 0.81595 31665 1.1417 0.034159 98.838 0 1.73e-06 1.55e-07 230.39 0 1 0 1 No Tooth Fault

1.0459 1.0257 1.4402 0.98047 2.8157 3.6314 -0.035405 2.2757 1.955 0.80583 31554 1.1345 0.0353 44.223 0 1.11e-06 1.39e-07 230.39 0 1 0 1 No Tooth Fault

View the class names of the data set.

classNames = categories(tbl{:,labelName})classNames = 2×1 cell

{'No Tooth Fault'}

{'Tooth Fault' }

Set aside data for testing. Partition the data into a training set containing 85% of the data and a test set containing the remaining 15% of the data. To partition the data, use the trainingPartitions function, attached to this example as a supporting file. To access this file, open the example as a live script.

numObservations = size(tbl,1); [idxTrain,idxTest] = trainingPartitions(numObservations,[0.85 0.15]); tblTrain = tbl(idxTrain,:); tblTest = tbl(idxTest,:);

Convert the data to a format that the trainnet function supports. Convert the predictors and targets to numeric and categorical arrays, respectively. For feature input, the network expects data with rows that correspond to observations and columns that correspond to the features. If your data has a different layout, then you can preprocess your data to have this layout or you can provide layout information using data formats. For more information, see Deep Learning Data Formats.

predictorNames = ["SigMean" "SigMedian" "SigRMS" "SigVar" "SigPeak" "SigPeak2Peak" ... "SigSkewness" "SigKurtosis" "SigCrestFactor" "SigMAD" "SigRangeCumSum" ... "SigCorrDimension" "SigApproxEntropy" "SigLyapExponent" "PeakFreq" ... "HighFreqPower" "EnvPower" "PeakSpecKurtosis" "SensorCondition" "ShaftCondition"]; XTrain = table2array(tblTrain(:,predictorNames)); TTrain = tblTrain.(labelName); XTest = table2array(tblTest(:,predictorNames)); TTest = tblTest.(labelName);

Define a network with a feature input layer and specify the number of features. Also, configure the input layer to normalize the data using Z-score normalization.

numFeatures = size(XTrain,2);

numClasses = numel(classNames);

layers = [

featureInputLayer(numFeatures,Normalization="zscore")

fullyConnectedLayer(16)

layerNormalizationLayer

reluLayer

fullyConnectedLayer(numClasses)

softmaxLayer];Specify the training options:

Train using the L-BFGS solver. This solver suits tasks with small networks and when the data fits in memory.

Train using the CPU. Because the network and data is small, the CPU is better suited.

Display the training progress in a plot.

Suppress the verbose output.

options = trainingOptions("lbfgs", ... ExecutionEnvironment="cpu", ... Plots="training-progress", ... Verbose=false);

Train the network using the trainnet function. For classification, use cross-entropy loss.

net = trainnet(XTrain,TTrain,layers,"crossentropy",options);

Test the network using the labeled test set. For single-label classification, evaluate the accuracy. The accuracy is the percentage of the labels that the network predicts correctly.

accuracy = testnet(net,XTest,TTest,"accuracy")accuracy = 100

Predict the labels of the test data using the trained network. Predict the classification scores using the trained network then convert the predictions to labels using the scores2label function.

scoresTest = minibatchpredict(net,XTest); YTest = scores2label(scoresTest,classNames);

Visualize the predictions in a confusion chart.

confusionchart(TTest,YTest)

Input Arguments

Data

Image data, specified as a numeric array, dlarray object,

datastore, minibatchqueue object, or categorical array.

Tip

For sequences of images, for example video data, use the

sequences input argument.

If you have data that fits in memory that does not require additional processing

such as data augmentation, then specifying the input data as a numeric or categorical

array is usually the easiest option. If you want to train with image files stored on

disk, or want to apply additional processing such as data augmentation, then using

datastores is usually the easiest option. For neural networks with multiple outputs, you

must use a TransformedDatastore,

CombinedDatastore, or

minibatchqueue

object.

Tip

Neural networks expect input data with a specific layout. For example, image classification networks typically expect image representations to be h-by-w-by-c numeric arrays, where h, w, and c are the height, width, and number of channels of the images, respectively. Neural networks typically have an input layer that specifies the expected layout of the data.

Most datastores and functions output data in the layout that the network expects. If your data

is in a different layout than what the network expects, then indicate that your data has a

different layout by using the InputDataFormats training option,

specifying the data as a minibatchqueue object and specifying the

MiniBatchFormat property, or by specifying input data as a formatted

dlarray object. Specifying data formats is usually easier than

preprocessing the input data. If you specify both the InputDataFormats

training option and the MiniBatchFormat

minibatchqueue property, then they must match.

For neural networks that do not have input layers, you must use the

InputDataFormats training option, specify the data as a

minibatchqueue object and use the InputDataFormats

property, or use formatted dlarray objects.

Loss functions expect data with a specific layout. For example for sequence-to-vector regression networks, the loss function typically expects target vectors to be represented as a 1-by-R vector, where R is the number of responses.

Most datastores and functions output data in the layout that the loss function expects. If

your target data is in a different layout than what the loss function expects, then indicate

that your targets have a different layout by using the TargetDataFormats

training option, specifying the data as a minibatchqueue object and

specifying the TargetDataFormats property, or by specifying the target

data as a formatted dlarray object. Specifying data formats is usually

easier than preprocessing the target data. If you specify both the

TargetDataFormats training option and the

TargetDataFormats

minibatchqueue property, then they must match.

For more information, see Deep Learning Data Formats.

Numeric Array or dlarray Object

For data that fits in memory and does not require additional processing like

augmentation, you can specify a data set of images as a numeric array or a

dlarray object. If you specify images as a numeric array or a

dlarray object, then you must also specify the

targets argument.

The layout of numeric arrays and unformatted dlarray objects

depend on the type of image data and must be consistent with the

InputDataFormats training option.

Most networks expect image data in these layouts.

| Data | Layout |

|---|---|

| 2-D images | h-by-w-by-c-by-N array, where h, w, and c are the height, width, and number of channels of the images, respectively, and N is the number of images. Data in this layout has the data

format |

| 3-D images | h-by-w-by-d-by-c-by-N array, where h, w, d, and c are the height, width, depth, and number of channels of the images, respectively, and N is the number of images. Data

in this layout has the data format |

For data in a different layout, indicate that your data has a different layout by

using the InputDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

Categorical Array (since R2025a)

For images of categorical values (such as labeled pixel maps) that fit in memory and does not require additional processing, you can specify the images as categorical arrays.

If you specify images as a categorical array, then you must also specify the

targets argument.

The software automatically converts categorical inputs to numeric values and

passes them to the neural network. To specify how the software converts categorical

inputs to numeric values, use the CategoricalInputEncoding argument of the training options function. The

layout of categorical arrays depend on the type of image data and must be consistent

with the InputDataFormats argument of the training options function.

Most networks expect categorical image data passed to the

trainnet function in the layouts in this table.

| Data | Layout |

|---|---|

| 2-D categorical images | h-by-w-by-1-by-N array, where h and w are the height and width of the images, respectively, and N is the number of images. After the software converts this data to

numeric arrays, data in this layout has the data format

|

| 3-D categorical images | h-by-w-by-d-by-1-by-N array, where h, w, and d are the height, width, and depth of the images, respectively, and N is the number of images. Data in this layout has the data format

|

For data in a different layout, indicate that your data has a different layout by

using the InputDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

Datastore

Datastores read batches of images and targets. Datastores are best suited when you have data that does not fit in memory or when you want to apply augmentations or transformations to the data.

For image data, the trainnet function supports these

datastores:

| Datastore | Description | Example Usage |

|---|---|---|

ImageDatastore | Datastore of images saved on disk. | Train image classification neural network with images saved on

disk, where the images are the same size. When the images are different

sizes, use an

|

augmentedImageDatastore | Datastore that applies random affine geometric transformations, including resizing, rotation, reflection, shear, and translation. |

|

TransformedDatastore | Datastore that transforms batches of data read from an underlying datastore using a custom transformation function. |

|

CombinedDatastore | Datastore that reads from two or more underlying datastores. |

|

randomPatchExtractionDatastore (Image Processing Toolbox) | Datastore that extracts pairs of random patches from images or pixel label images and optionally applies identical random affine geometric transformations to the pairs. | Train neural network for object detection. |

denoisingImageDatastore (Image Processing Toolbox) | Datastore that applies randomly generated Gaussian noise. | Train neural network for image denoising. |

| Custom mini-batch datastore | Custom datastore that returns mini-batches of data. | Train neural network using data in a layout that other datastores do not support. For details, see Develop Custom Mini-Batch Datastore. |

To specify the targets, the datastore must return cell arrays or tables with

numInputs+numOutputs columns, where

numInputs and numOutputs are the

number of network inputs and outputs, respectively. The first

numInputs columns correspond to the network inputs. The

last numOutput columns correspond to the network outputs. The

InputNames and OutputNames properties

of the neural network specify the order of the input and output data,

respectively.

Tip

ImageDatastoreobjects allow batch reading of JPG or PNG image files using prefetching. For efficient preprocessing of images for deep learning, including image resizing, use anaugmentedImageDatastoreobject. Do not use theReadFcnproperty ofImageDatastoreobjects. If you set theReadFcnproperty to a custom function, then theImageDatastoreobject does not prefetch image files and is usually significantly slower.For best performance, if you are training a network using a datastore with a

ReadSizeproperty, such as animageDatastore, then set theReadSizeproperty andMiniBatchSizetraining option to the same value. If you are training a network using a datastore with aMiniBatchSizeproperty, such as anaugmentedImageDatastore, then set theMiniBatchSizeproperty of the datastore and theMiniBatchSizetraining option to the same value.

You can use other built-in datastores for testing deep learning neural networks by using the transform and combine functions. These functions can convert the data read from datastores to the layout required by the trainnet function. The required layout of the datastore output depends on the neural network architecture. For more information, see Datastore Customization.

minibatchqueue Object (since R2024a)

For greater control over how the software processes and transforms mini-batches, you can

specify data as a minibatchqueue

object that returns the predictors and targets.

If you specify data as a minibatchqueue object, then the

trainnet function ignores the MiniBatchSize

property of the object and uses the MiniBatchSize training option

instead.

To specify the targets, the minibatchqueue must have

numInputs+numOutputs outputs, where numInputs and

numOutputs are the number of network inputs and outputs,

respectively. The first numInputs outputs, correspond to the network

inputs. The last numOutput outputs correspond to the network outputs. The

InputNames and OutputNames property of the neural

network specifies the order of the input and output data, respectively.

Note

This argument supports complex-valued predictors and targets.

Sequence or time series data, specified a numeric array, a cell array of numeric

arrays, a dlarray object, a cell array of dlarray

objects, datastore, or minibatchqueue object.

If you have sequences of the same length that fit in memory and do not require

additional processing, then specifying the input data as a numeric array is usually the

easiest option. If you have sequences of different lengths that fit in memory and do not

require additional processing, then it is specifying the input data as a cell array of

numeric arrays is usually the easiest option. If you want to train with sequences stored

on disk, or want to apply additional processing such as custom transformations, then

using datastores is usually the easiest option. For neural networks with multiple

inputs, you must use a TransformedDatastore

or CombinedDatastore

object.

Tip

Neural networks expect input data with a specific layout. For example, vector-sequence classification networks typically expect vector-sequence representations to be t-by-c arrays, where t and c are the number of time steps and channels of sequences, respectively. Neural networks typically have an input layer that specifies the expected layout of the data.

Most datastores and functions output data in the layout that the network expects. If your data

is in a different layout than what the network expects, then indicate that your data has a

different layout by using the InputDataFormats training option,

specifying the data as a minibatchqueue object and specifying the

MiniBatchFormat property, or by specifying input data as a formatted

dlarray object. Specifying data formats is usually easier than

preprocessing the input data. If you specify both the InputDataFormats

training option and the MiniBatchFormat

minibatchqueue property, then they must match.

For neural networks that do not have input layers, you must use the

InputDataFormats training option, specify the data as a

minibatchqueue object and use the InputDataFormats

property, or use formatted dlarray objects.

Loss functions expect data with a specific layout. For example for sequence-to-vector regression networks, the loss function typically expects target vectors to be represented as a 1-by-R vector, where R is the number of responses.

Most datastores and functions output data in the layout that the loss function expects. If

your target data is in a different layout than what the loss function expects, then indicate

that your targets have a different layout by using the TargetDataFormats

training option, specifying the data as a minibatchqueue object and

specifying the TargetDataFormats property, or by specifying the target

data as a formatted dlarray object. Specifying data formats is usually

easier than preprocessing the target data. If you specify both the

TargetDataFormats training option and the

TargetDataFormats

minibatchqueue property, then they must match.

For more information, see Deep Learning Data Formats.

Numeric Array, Categorical Array, dlarray Object, or Cell Array

For data that fits in memory and does not require additional processing like

custom transformations, you can specify a single sequence as a numeric array,

categorical array, or a dlarray object, or a data set of sequences as

a cell array of numeric arrays, categorical arrays, or dlarray

objects. If you specify sequences as a numeric array, categorical array, cell array,

or a dlarray object, then you must also specify the

targets argument.

For cell array input, the cell array must be an

N-by-1 cell array of numeric arrays, categorical arrays, or

dlarray objects, where N is the number of

observations.

The software automatically converts categorical

inputs to numeric values and passes them to the neural network. To specify how the

software converts categorical inputs to numeric values, use the CategoricalInputEncoding argument of the training options

function.

The size and shape of the numeric arrays, categorical arrays, or

dlarray objects that represent sequences depend on the type of

sequence data and must be consistent with the InputDataFormats

training option.

Most networks with a sequence input layer expect sequence data passed to the

trainnet function in the layouts in this table.

| Data | Layout |

|---|---|

| Vector sequences | s-by-c matrices, where s and c are the numbers of time steps and channels (features) of the sequences, respectively. |

| Categorical vector sequences | s-by-1 categorical arrays, where s is the number of time steps of the sequences. |

| 1-D image sequences | h-by-c-by-s arrays, where h and c correspond to the height and number of channels of the images, respectively, and s is the sequence length. |

| Categorical 1-D image sequences | h-by-1-by-s categorical arrays, where h corresponds to the height of the images and s is the sequence length. |

| 2-D image sequences | h-by-w-by-c-by-s arrays, where h, w, and c correspond to the height, width, and number of channels of the images, respectively, and s is the sequence length. |

| Categorical 2-D image sequences | h-by-w-by-1-by-s arrays, where h and w correspond to the height and width of the images, respectively, and s is the sequence length. |

| 3-D image sequences | h-by-w-by-d-by-c-by-s, where h, w, d, and c correspond to the height, width, depth, and number of channels of the 3-D images, respectively, and s is the sequence length. |

| Categorical 3-D image sequences | h-by-w-by-d-by-1-by-s, where h, w, and d correspond to the height, width, and depth of the 3-D images, respectively, and s is the sequence length. |

For data in a different layout, indicate that your data has a different layout by

using the InputDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

Datastore

Datastores read batches of sequences and targets. Datastores are best suited when you have data that does not fit in memory or when you want to apply transformations to the data.

For sequence and time-series data, the trainnet function

supports these datastores:

| Datastore | Description | Example Usage |

|---|---|---|

TransformedDatastore | Datastore that transforms batches of data read from an underlying datastore using a custom transformation function. |

|

CombinedDatastore | Datastore that reads from two or more underlying datastores. | Combine predictors and targets from different data sources. |

| Custom mini-batch datastore | Custom datastore that returns mini-batches of data. | Train neural network using data in a layout that other datastores do not support. For details, see Develop Custom Mini-Batch Datastore. |

To specify the targets, the datastore must return cell arrays or tables with

numInputs+numOutputs columns, where

numInputs and numOutputs are the

number of network inputs and outputs, respectively. The first

numInputs columns correspond to the network inputs. The

last numOutput columns correspond to the network outputs. The

InputNames and OutputNames properties

of the neural network specify the order of the input and output data,

respectively.

You can use other built-in datastores by using the transform and

combine

functions. These functions can convert the data read from datastores to the layout required

by the trainnet function. For example, you can transform and combine

data read from in-memory arrays and CSV files using ArrayDatastore and

TabularTextDatastore objects, respectively. The required layout of the

datastore output depends on the neural network architecture. For more information, see Datastore Customization.

minibatchqueue Object (since R2024a)

For greater control over how the software processes and transforms mini-batches, you can

specify data as a minibatchqueue

object that returns the predictors and targets.

If you specify data as a minibatchqueue object, then the

trainnet function ignores the MiniBatchSize

property of the object and uses the MiniBatchSize training option

instead.

To specify the targets, the minibatchqueue must have

numInputs+numOutputs outputs, where numInputs and

numOutputs are the number of network inputs and outputs,

respectively. The first numInputs outputs, correspond to the network

inputs. The last numOutput outputs correspond to the network outputs. The

InputNames and OutputNames property of the neural

network specifies the order of the input and output data, respectively.

Note

This argument supports complex-valued predictors and targets.

Feature or tabular data, specified as a numeric array, datastore, table, or

minibatchqueue object.

If you have data that fits in memory that does not require additional processing,

then specifying the input data as a numeric array or table is usually the easiest

option. If you want to train with feature or tabular data stored on disk, or want to

apply additional processing such as custom transformations, then using datastores is

usually the easiest option. For neural networks with multiple inputs, you must use a

TransformedDatastore

or CombinedDatastore

object.

Tip

Neural networks expect input data with a specific layout. For example feature classification networks typically expect feature and tabular data representations to be 1-by-c vectors, where c is the number features of the data. Neural networks typically have an input layer that specifies the expected layout of the data.

Most datastores and functions output data in the layout that the network expects. If your data

is in a different layout than what the network expects, then indicate that your data has a

different layout by using the InputDataFormats training option,

specifying the data as a minibatchqueue object and specifying the

MiniBatchFormat property, or by specifying input data as a formatted

dlarray object. Specifying data formats is usually easier than

preprocessing the input data. If you specify both the InputDataFormats

training option and the MiniBatchFormat

minibatchqueue property, then they must match.

For neural networks that do not have input layers, you must use the

InputDataFormats training option, specify the data as a

minibatchqueue object and use the InputDataFormats

property, or use formatted dlarray objects.

Loss functions expect data with a specific layout. For example for sequence-to-vector regression networks, the loss function typically expects target vectors to be represented as a 1-by-R vector, where R is the number of responses.

Most datastores and functions output data in the layout that the loss function expects. If

your target data is in a different layout than what the loss function expects, then indicate

that your targets have a different layout by using the TargetDataFormats

training option, specifying the data as a minibatchqueue object and

specifying the TargetDataFormats property, or by specifying the target

data as a formatted dlarray object. Specifying data formats is usually

easier than preprocessing the target data. If you specify both the

TargetDataFormats training option and the

TargetDataFormats

minibatchqueue property, then they must match.

For more information, see Deep Learning Data Formats.

Numeric Array or dlarray Object

For feature data that fits in memory and does not require additional processing

like custom transformations, you can specify feature data as a numeric array. If you

specify feature data as a numeric array, then you must also specify the

targets argument.

The layout of numeric arrays and unformatted dlarray objects must

be consistent with the InputDataFormats training option. Most

networks with feature input expect input data specified as a

N-by-numFeatures array, where

N is the number of observations and

numFeatures is the number of features of the input data.

For data in a different layout, indicate that your data has a different layout by

using the InputDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

Categorical Array (since R2025a)

For discrete features that fit in memory and does not require additional processing like custom transformations, you can specify the feature data as a categorical array.

If you specify features as a categorical array, then you must also specify the

targets argument.

The software automatically converts categorical inputs to numeric values and

passes them to the neural network. To specify how the software converts categorical

inputs to numeric values, use the CategoricalInputEncoding argument of the training options function. The

layout of categorical arrays must be consistent with the InputDataFormats argument of the training options function.

Most networks with categorical feature input expect input data specified as a

N-by-1 vector, where N is the number of

observations. After the software converts this data to numeric arrays, data in this

layout has the data format "BC" (batch, channel). The size of the

"C" (channel) dimension depends on the CategoricalInputEncoding argument of the training options

function.

For data in a different layout, indicate that your data has a different layout by

using the InputDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

Table (since R2024a)

For feature data that fits in memory and does not require additional processing

like custom transformations, you can specify feature data as a table. If you specify

feature data as a table, then you must not specify the targets

argument.

To specify feature data as a table, specify a table with

numObservations rows and numFeatures+1

columns, where numObservations and numFeatures

are the number of observations and features of the input data, respectively. The

trainnet function uses the first numFeatures

columns as the input features and uses the last column as the targets.

Datastore

Datastores read batches of feature data and targets. Datastores are best suited when you have data that does not fit in memory or when you want to apply transformations to the data.

For feature and tabular data, the trainnet function supports

these datastores:

| Data Type | Description | Example Usage |

|---|---|---|

TransformedDatastore | Datastore that transforms batches of data read from an underlying datastore using a custom transformation function. |

|

CombinedDatastore | Datastore that reads from two or more underlying datastores. |

|

| Custom mini-batch datastore | Custom datastore that returns mini-batches of data. | Train neural network using data in a layout that other datastores do not support. For details, see Develop Custom Mini-Batch Datastore. |

To specify the targets, the datastore must return cell arrays or tables with

numInputs+numOutputs columns, where

numInputs and numOutputs are the

number of network inputs and outputs, respectively. The first

numInputs columns correspond to the network inputs. The

last numOutput columns correspond to the network outputs. The

InputNames and OutputNames properties

of the neural network specify the order of the input and output data,

respectively.

You can use other built-in datastores for training deep learning neural networks

by using the transform

and combine

functions. These functions can convert the data read from datastores to the table or

cell array format required by trainnet. For more information, see

Datastore Customization.

minibatchqueue Object (since R2024a)

For greater control over how the software processes and transforms mini-batches, you can

specify data as a minibatchqueue

object that returns the predictors and targets.

If you specify data as a minibatchqueue object, then the

trainnet function ignores the MiniBatchSize

property of the object and uses the MiniBatchSize training option

instead.

To specify the targets, the minibatchqueue must have

numInputs+numOutputs outputs, where numInputs and

numOutputs are the number of network inputs and outputs,

respectively. The first numInputs outputs, correspond to the network

inputs. The last numOutput outputs correspond to the network outputs. The

InputNames and OutputNames property of the neural

network specifies the order of the input and output data, respectively.

Note

This argument supports complex-valued predictors and targets.

Generic data or combinations of data types, specified as a numeric array,

dlarray object, datastore, or minibatchqueue

object.

If you have data that fits in memory that does not require additional processing,

then specifying the input data as a numeric array is usually the easiest option. If you

want to train with data stored on disk, or want to apply additional processing, then

using datastores is usually the easiest option. For neural networks with multiple

inputs, you must use a TransformedDatastore

or CombinedDatastore

object.

Tip

Neural networks expect input data with a specific layout. For example, vector-sequence classification networks typically expect vector-sequence representations to be t-by-c arrays, where t and c are the number of time steps and channels of sequences, respectively. Neural networks typically have an input layer that specifies the expected layout of the data.

Most datastores and functions output data in the layout that the network expects. If your data

is in a different layout than what the network expects, then indicate that your data has a

different layout by using the InputDataFormats training option,

specifying the data as a minibatchqueue object and specifying the

MiniBatchFormat property, or by specifying input data as a formatted

dlarray object. Specifying data formats is usually easier than

preprocessing the input data. If you specify both the InputDataFormats

training option and the MiniBatchFormat

minibatchqueue property, then they must match.

For neural networks that do not have input layers, you must use the

InputDataFormats training option, specify the data as a

minibatchqueue object and use the InputDataFormats

property, or use formatted dlarray objects.

Loss functions expect data with a specific layout. For example for sequence-to-vector regression networks, the loss function typically expects target vectors to be represented as a 1-by-R vector, where R is the number of responses.

Most datastores and functions output data in the layout that the loss function expects. If

your target data is in a different layout than what the loss function expects, then indicate

that your targets have a different layout by using the TargetDataFormats

training option, specifying the data as a minibatchqueue object and

specifying the TargetDataFormats property, or by specifying the target

data as a formatted dlarray object. Specifying data formats is usually

easier than preprocessing the target data. If you specify both the

TargetDataFormats training option and the

TargetDataFormats

minibatchqueue property, then they must match.

For more information, see Deep Learning Data Formats.

Numeric Arrays, Categorical Arrays, or dlarray Objects

For data that fits in memory and does not require additional processing like

custom transformations, you can specify data as a numeric array, categorical array, or

a dlarray object. If you specify data as a numeric array, then you

must also specify the targets argument.

For a neural network with an inputLayer object, the expected

layout of input data is a given by the InputFormat property of the

layer.

The software automatically converts categorical inputs to numeric values and passes

them to the neural network. To specify how the software converts categorical inputs to

numeric values, use the CategoricalInputEncoding argument of the training options function. The

layout of categorical arrays must be consistent with the InputDataFormats argument of the training options function.

For data in a different layout, indicate that your data has a different layout by

using the InputDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

Datastores

Datastores read batches of data and targets. Datastores are best suited when you have data that does not fit in memory or when you want to apply transformations to the data.

Generic data or combinations of data types, the trainnet

function supports these datastores:

| Data Type | Description | Example Usage |

|---|---|---|

TransformedDatastore | Datastore that transforms batches of data read from an underlying datastore using a custom transformation function. |

|

CombinedDatastore | Datastore that reads from two or more underlying datastores. |

|

| Custom mini-batch datastore | Custom datastore that returns mini-batches of data. | Train neural network using data in a format that other datastores do not support. For details, see Develop Custom Mini-Batch Datastore. |

To specify the targets, the datastore must return cell arrays or tables with

numInputs+numOutputs columns, where

numInputs and numOutputs are the

number of network inputs and outputs, respectively. The first

numInputs columns correspond to the network inputs. The

last numOutput columns correspond to the network outputs. The

InputNames and OutputNames properties

of the neural network specify the order of the input and output data,

respectively.

You can use other built-in datastores by using the transform and

combine

functions. These functions can convert the data read from datastores to the table or cell

array format required by trainnet. For more information, see Datastore Customization.

minibatchqueue Object (since R2024a)

For greater control over how the software processes and transforms mini-batches, you can

specify data as a minibatchqueue

object that returns the predictors and targets.

If you specify data as a minibatchqueue object, then the

trainnet function ignores the MiniBatchSize

property of the object and uses the MiniBatchSize training option

instead.

To specify the targets, the minibatchqueue must have

numInputs+numOutputs outputs, where numInputs and

numOutputs are the number of network inputs and outputs,

respectively. The first numInputs outputs, correspond to the network

inputs. The last numOutput outputs correspond to the network outputs. The

InputNames and OutputNames property of the neural

network specifies the order of the input and output data, respectively.

Note

This argument supports complex-valued predictors and targets.

Training targets, specified as a categorical array, numeric array, or a cell array of sequences.

To specify targets for networks with multiple outputs, specify the targets using the

images,

sequences,

features, or

data

arguments.

Tip

Loss functions expect data with a specific layout. For example for sequence-to-vector regression networks, the loss function typically expects target vectors to be represented as a 1-by-R vector, where R is the number of responses.

Most datastores and functions output data in the layout that the loss function expects. If

your target data is in a different layout than what the loss function expects, then indicate

that your targets have a different layout by using the TargetDataFormats

training option, specifying the data as a minibatchqueue object and

specifying the TargetDataFormats property, or by specifying the target

data as a formatted dlarray object. Specifying data formats is usually

easier than preprocessing the target data. If you specify both the

TargetDataFormats training option and the

TargetDataFormats

minibatchqueue property, then they must match.

For more information, see Deep Learning Data Formats.

The expected layout of the targets depends on the loss function and the type of task. The targets listed here are only a subset. The loss functions may support additional targets with different layouts such as targets with additional dimensions. For custom loss functions, the software uses the format information of the network output data to determine the type of target data and applies the corresponding layout in this table.

| Loss Function | Target | Target Layout |

|---|---|---|

"crossentropy" | Categorical labels | N-by-1 categorical vector of labels, where N is the number of observations. |

| Sequences of categorical labels |

| |

"index-crossentropy" | Categorical labels | N-by-1 categorical vector of labels, where N is the number of observations. |

| Class indices | N-by-1 numeric vector of class indices, where N is the number of observations. | |

| Sequences of categorical labels |

| |

| Sequences of class indices |

| |

"binary-crossentropy" | Binary labels (single label) | N-by-1 vector, where N is the number of observations. |

| Binary labels (multilabel) | N-by-c matrix, where N and c are the numbers of observations and classes, respectively. | |

| Numeric scalars | N-by-1 vector, where N is the number of observations. |

| Numeric vectors | N-by-R matrix, where N is the number of observations and R is the number of responses. | |

| 2-D images | h-by-w-by-c-by-N numeric array, where h, w, and c are the height, width, and number of channels of the images, respectively, and N is the number of images. | |

| 3-D images |

| |

| Numeric sequences of scalars |

| |

| Numeric sequences of vectors |

| |

| Sequences of 1-D images |

| |

| Sequences of 2-D images |

| |

| Sequences of 3-D images |

|

For targets in a different layout, indicate that your targets has a different layout

by using the TargetDataFormats training option or use a formatted

dlarray object instead. For more information, see Deep Learning Data Formats.

The software automatically converts categorical targets to numeric values and passes

them to the loss and metrics functions. If you train using the

"index-crossentropy" loss function, then the software converts

categorical targets to their integer values. Otherwise, the software converts

categorical targets to one-hot encoded vectors.

To specify how the software converts

categorical targets to numeric values, use the CategoricalTargetEncoding argument of the training options

function. (since R2025a)

Tip

Normalizing the targets often helps to stabilize and speed up training of neural networks for regression. For more information, see Train Convolutional Neural Network for Regression.

Training Details

Neural network architecture, specified as a dlnetwork object or a layer array.

For a list of built-in neural network layers, see List of Deep Learning Layers.

Loss function to use for training, specified as one of these values:

"crossentropy"— Cross-entropy loss for classification tasks, normalized by dividing by the number of non-channel elements of the network output."index-crossentropy"(since R2024b) — Index cross-entropy loss for classification tasks, normalized by dividing by the number of elements in the targets. Use this option to save memory when there are many categorical classes."binary-crossentropy"— Binary cross-entropy loss for binary and multilabel classification tasks, normalized by dividing by the number of elements of the network output."mae"/"mean-absolute-error"/"l1loss"— Mean absolute error for regression tasks, normalized by dividing by the number of elements of the network output."mse"/"mean-squared-error"/"l2loss"— Mean squared error for regression tasks, normalized by dividing by the number of elements of the network output."huber"— Huber loss for regression tasks, normalized by dividing by the number of elements of the network output.Function handle with the syntax

loss = f(Y1,...,Yn,T1,...,Tm), whereY1,...,Ynaredlarrayobjects that correspond to thennetwork predictions andT1,...,Tmaredlarrayobjects that correspond to themtargets.deep.DifferentiableFunctionobject (since R2024a) — Function object with custom backward function.

Tip

For weighted cross-entropy, use the function handle

@(Y,T)crossentropy(Y,T,weights).

For more information about defining a custom function, see Define Custom Deep Learning Operations.

Training options, specified as a TrainingOptionsSGDM,

TrainingOptionsRMSProp, TrainingOptionsADAM,

TrainingOptionsLBFGS, or TrainingOptionsLM object

returned by the trainingOptions function.

Output Arguments

Trained network, returned as a dlnetwork object.

Training information, returned as a TrainingInfo

object with these properties:

TrainingHistory— Information about training iterationsValidationHistory— Information about validation iterationsOutputNetworkIteration— Iteration that corresponds to trained networkStopReason— Reason why training stopped

You can also use info to open and close the training progress

plot using the show and

close

functions.

More About

By default, the software performs computations using single-precision, floating-point arithmetic to train a neural network using the trainnet function. The trainnet function returns a network with single-precision learnables and state parameters.

When you use prediction or validation functions with a dlnetwork object with single-precision learnable and state parameters, the software performs the computations using single-precision, floating-point arithmetic.

To provide the best performance, deep learning using a GPU in

MATLAB® is not guaranteed to be deterministic. Depending on your network architecture,

under some conditions you might get different results when using a GPU to train two identical

networks or make two predictions using the same network and data. If you require determinism

when performing deep learning operations using a GPU, use the deep.gpu.deterministicAlgorithms function (since R2024b).

If you use the rng function to set the same random number generator and seed, then training

using a CPU is reproducible unless:

You set the

PreprocessingEnvironmenttraining option to"background"or"parallel".Your training data is a

minibatchqueueobject with thePreprocessingEnvironmentproperty set to"background"or"parallel".

Tips

For regression tasks, normalizing the targets often helps to stabilize and speed up training. For more information, see Train Convolutional Neural Network for Regression.

In most cases, if the predictor or targets contain

NaNvalues, then they are propagated through the network and the training fails to converge.To convert a numeric array to a datastore, use

ArrayDatastore.When you combine layers in a neural network with mixed types of data, you may need to reformat the data before passing it to a combination layer (such as a concatenation or an addition layer). To reformat the data, you can use a flatten layer to flatten the spatial dimensions into the channel dimension, or create a

FunctionLayerobject or custom layer that reformats and reshapes the data.

Algorithms

Most datastores output data in the layout that neural networks expect. If you create your own datastore, or apply custom transformations to datastores, then you must ensure that the datastore outputs data in the supported layout.

There are two main aspects:

The structure of the batch of data. The datastore must output a table or cell array with rows that correspond to observations, and columns that correspond to the inputs and targets.

The layout of the predictors and targets. For example, the predictors and targets must be in a layout that is supported by the network and loss function.

When you use a datastore for training a neural network, the structure of the datastore output depends on the neural network architecture.

| Neural Network Architecture | Datastore Output | Example Cell Array Output | Example Table Output |

|---|---|---|---|

| Single input layer and single output | Table or cell array with two columns. The first and second columns specify the predictors and targets, respectively. Table elements must be scalars, row vectors, or 1-by-1 cell arrays containing a numeric array. Custom mini-batch datastores must output tables. | Cell array for neural network with one input and one output: data = read(ds) data =

4×2 cell array

{224×224×3 double} {[2]}

{224×224×3 double} {[7]}

{224×224×3 double} {[9]}

{224×224×3 double} {[9]} | Table for neural network with one input and one output: data = read(ds) data =

4×2 table

Predictors Response

__________________ ________

{224×224×3 double} 2

{224×224×3 double} 7

{224×224×3 double} 9

{224×224×3 double} 9

|

| Multiple input layers or multiple outputs | Cell array with ( The first The order of inputs and outputs are

given by the | Cell array for neural network with two inputs and two outputs. data = read(ds) data =

4×4 cell array

{224×224×3 double} {128×128×3 double} {[2]} {[-42]}

{224×224×3 double} {128×128×3 double} {[2]} {[-15]}

{224×224×3 double} {128×128×3 double} {[9]} {[-24]}

{224×224×3 double} {128×128×3 double} {[9]} {[-44]} | Not supported |

The datastore must return data in a table or cell array. Custom mini-batch datastores must output tables.

Neural networks and loss functions expect input data with a specific layout. For example vector-sequence classification networks typically expect a sequence to be represented as a t-by-c numeric array, where t and c are the number of time steps and channels of sequences, respectively. Neural networks typically have an input layer that specifies the expected layout of the data.

Most datastores and functions output data in the layout that the networks and loss

functions expect. If your data is in a different layout than what the network or loss

function expects, then indicate that your data has a different layout by using the

InputDataFormats and TargetDataFormats training

options or by specifying the data as a formatted dlarray objects.

Adjusting the InputDataFormats and

TargetDataFormats training options is usually easier than

preprocessing the input data.

For neural networks that do not have input layers, you must use the

InputDataFormats training option or use formatted

dlarray objects.

For more information, see Deep Learning Data Formats.

Most networks expect these data layouts of predictors:

Image Input

| Data | Predictor Layout |

|---|---|

| 2-D images | h-by-w-by-c numeric array, where h, w, and c are the height, width, and number of channels of the images, respectively. |

| 3-D images | h-by-w-by-d-by-c numeric array, where h, w, d, and c are the height, width, depth, and number of channels of the images, respectively. |

Sequence Input

| Data | Predictor Layout |

|---|---|

| Vector sequence | s-by-c matrix, where s is the sequence length and c is the number of features of the sequence. |

| 1-D image sequence | h-by-c-by-s array, where h and c correspond to the height and number of channels of the image, respectively, and s is the sequence length. Each sequence in the batch must have the same sequence length. |

| 2-D image sequence | h-by-w-by-c-by-s array, where h, w, and c correspond to the height, width, and number of channels of the image, respectively, and s is the sequence length. Each sequence in the batch must have the same sequence length. |

| 3-D image sequence | h-by-w-by-d-by-c-by-s array, where h, w, d, and c correspond to the height, width, depth, and number of channels of the image, respectively, and s is the sequence length. Each sequence in the batch must have the same sequence length. |

Feature Input

| Data | Predictor Layout |

|---|---|

| Features | c-by-1 column vectors, where c is the number of features. |

Most loss functions expect these data layouts for targets:

| Target | Target Layout |

|---|---|

| Categorical labels | Categorical scalar. |

| Sequences of categorical labels | t-by-1 categorical vector, where t is the number of time steps. |

| Binary labels (single label) | Numeric scalar |

| Binary labels (multilabel) | 1-by-c vector, where c is the numbers of classes, respectively. |

| Numeric scalars | Numeric scalar |

| Numeric vectors | 1-by-R vector, where R is the number of responses. |

| 2-D images | h-by-w-by-c numeric array, where h, w, and c are the height, width, and number of channels of the images, respectively. |

| 3-D images | h-by-w-by-d-by-c numeric array, where h, w, d, and c are the height, width, depth, and number of channels of the images, respectively. |

| Numeric sequences of scalars | t-by-1 vector, where t is the numbers of time steps. |

| Numeric sequences of vectors | t-by-c array, where t, and c are the numbers of time steps and channels, respectively. |

| Sequences of 1-D images | h-by-c-by-t array, where h, c, and t are the height, number of channels, and number of time steps of the sequences, respectively. |

| Sequences of 2-D images | h-by-w-by-c-by-t array, where h, w, c, and t are the height, width, number of channels, and number of time steps of the sequences, respectively. |

| Sequences of 3-D images | h-by-w-by-d-by-c-by-t array, where h, w, d, c, and t are the height, width, depth, number of channels, and number of time steps of the sequences, respectively. |

For more information, see Deep Learning Data Formats.

Extended Capabilities

This function fully supports GPU acceleration.

By default, the trainnet function uses a GPU if one is

available. You can specify the hardware that the trainnet function

uses by setting the ExecutionEnvironment training option using the trainingOptions function.

For more information, see Scale Up Deep Learning in Parallel, on GPUs, and in the Cloud.

Version History

Introduced in R2023bThe software automatically converts categorical input data and targets to numeric values

and passes them to the neural network and loss function, respectively. To specify how the

software encodes categorical input data and targets, use the CategoricalInputEncoding and CategoricalTargetEncoding arguments of the trainingOptions function, respectively.

Index cross-entropy loss, also known as sparse cross-entropy loss,

is a more memory and computationally efficient alternative to the standard cross-entropy

loss algorithm. Unlike the "crossentropy" loss function, which requires

converting categorical targets to one-hot encoded vectors, the

"index-crossentropy" function operates on the integer values of the

categorical targets directly.

Using index cross-entropy loss is well suited for predictions over many classes, where one-hot encoded data presents unnecessary memory overheads.

To specify index cross-entropy loss, specify the lossFcn argument

as "index-crossentropy".

The trainnet function has these advantages and is recommended over

the trainNetwork function:

trainnetsupportsdlnetworkobjects, which support a wider range of network architectures that you can create or import from external platforms.trainnetenables you to easily specify loss functions. You can select from built-in loss functions or specify a custom loss function.trainnetoutputs adlnetworkobject, which is a unified data type that supports network building, prediction, built-in training, visualization, compression, verification, and custom training loops.trainnetis typically faster thantrainNetwork.

Specify in-memory feature data as a minibatchqueue

object.

Specify in-memory feature data as a table using the features

argument.

Specify the loss function as deep.DifferentiableFunction object.

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)