predict

Predict responses for new observations from ECOC incremental learning classification model

Since R2022a

Description

label = predict(Mdl,X,Name=Value)ObservationsIn=columns to indicate that observations in the predictor

data are oriented along the columns of X.

[

uses any of the input argument combinations in the previous syntaxes and additionally returns:label,NegLoss,PBScore] = predict(___)

An array of negated average binary losses (

NegLoss). For each observation inX,predictassigns the label of the class yielding the largest negated average binary loss (or, equivalently, the smallest average binary loss).An array of positive-class scores (

PBScore) for the observations classified by each binary learner.

Examples

Create an incremental learning model by converting a traditionally trained ECOC model, and predict class labels using both models.

Load the human activity data set.

load humanactivityFor details on the data set, enter Description at the command line.

Fit a multiclass ECOC classification model to the entire data set.

Mdl = fitcecoc(feat,actid);

Mdl is a ClassificationECOC model object representing a traditionally trained ECOC classification model.

Convert the traditionally trained ECOC classification model to a model for incremental learning.

IncrementalMdl = incrementalLearner(Mdl)

IncrementalMdl =

incrementalClassificationECOC

IsWarm: 1

Metrics: [1×2 table]

ClassNames: [1 2 3 4 5]

ScoreTransform: 'none'

BinaryLearners: {10×1 cell}

CodingName: 'onevsone'

Decoding: 'lossweighted'

Properties, Methods

IncrementalMdl is an incrementalClassificationECOC model object prepared for incremental learning.

The incrementalLearner function initializes the incremental learner by passing the coding design and model parameters for binary learners to it, along with other information Mdl extracts from the training data. IncrementalMdl is warm (IsWarm is 1), which means that incremental learning functions can track performance metrics and make predictions.

An incremental learner created from converting a traditionally trained model can generate predictions without further processing.

Predict class labels for all observations using both models.

ttlabels = predict(Mdl,feat); illables = predict(IncrementalMdl,feat); isequal(ttlabels,illables)

ans = logical

1

Both models predict the same labels for each observation.

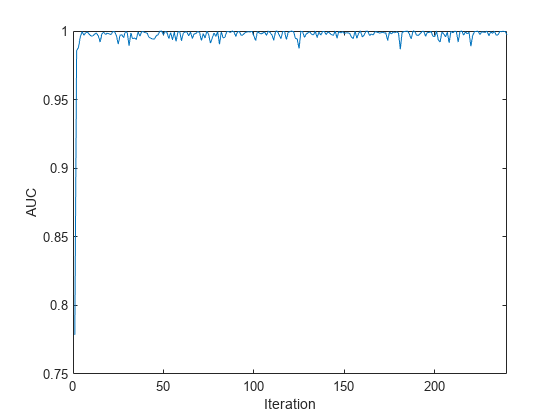

Prepare an incremental ECOC model for predict by fitting the model to a chunk of observations. Compute negated average binary losses for streaming data by using the predict function, and evaluate the model performance using the area under the receiver operating characteristic (ROC) curve, or AUC.

Load the human activity data set. Randomly shuffle the data.

load humanactivity n = numel(actid); rng(10) % For reproducibility idx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enter Description at the command line.

Create an ECOC model for incremental learning. Specify the class names. Prepare the model for predict by fitting the model to the first 10 observations.

Mdl = incrementalClassificationECOC(ClassNames=unique(Y)); initobs = 10; Mdl = fit(Mdl,X(1:initobs,:),Y(1:initobs));

Mdl is an incrementalClassificationECOC model. All its properties are read-only. The model is configured to generate predictions.

Simulate a data stream, and perform the following actions on each incoming chunk of 100 observations.

Call

predictto compute negated average binary losses for each observation in the incoming chunk of data. Specify to use the"lossbased"decoding scheme.Call

rocmetricsto compute the AUC using the negated average binary losses, and store the AUC value, averaged over all classes. This AUC is an incremental measure of how well the model predicts the activities on average.Call

fitto fit the model to the incoming chunk. Overwrite the previous incremental model with a new one fitted to the incoming observations.

numObsPerChunk = 100; nchunk = floor((n - initobs)/numObsPerChunk); auc = zeros(nchunk,1); % Incremental learning for j = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1 + initobs); iend = min(n,numObsPerChunk*j + initobs); idx = ibegin:iend; [~,NegLoss] = predict(Mdl,X(idx,:),Decoding="lossbased"); mdlROC = rocmetrics(Y(idx),NegLoss,Mdl.ClassNames); [~,~,~,auc(j)] = average(mdlROC,"micro"); Mdl = fit(Mdl,X(idx,:),Y(idx)); end

Mdl is an incrementalClassificationECOC model object trained on all the data in the stream.

Plot the AUC values for each incoming chunk of data.

plot(auc) xlim([0 nchunk]) ylabel("AUC") xlabel("Iteration")

The plot suggests that the classifier predicts the activities well during incremental learning.

Input Arguments

ECOC classification model for incremental learning, specified as an incrementalClassificationECOC model object. You can create

Mdl by calling

incrementalClassificationECOC directly, or by converting a

supported, traditionally trained machine learning model using the incrementalLearner function.

You must configure Mdl to predict labels for a batch of observations.

If

Mdlis a converted, traditionally trained model, you can predict labels without any modifications.Otherwise, you must fit

Mdlto data usingfitorupdateMetricsAndFit.

Batch of predictor data, specified as a floating-point matrix of

n observations and Mdl.NumPredictors predictor

variables. The value of the ObservationsIn name-value

argument determines the orientation of the variables and observations. The default

ObservationsIn value is "rows", which indicates that

observations in the predictor data are oriented along the rows of

X.

Note

predict supports only floating-point

input predictor data. If your input data includes categorical data, you must prepare an encoded

version of the categorical data. Use dummyvar to convert each categorical variable

to a numeric matrix of dummy variables. Then, concatenate all dummy variable matrices and any

other numeric predictors. For more details, see Dummy Variables.

Data Types: single | double

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Example: BinaryLoss="quadratic",Decoding="lossbased" specifies the

quadratic binary learner loss function and the loss-based decoding scheme for aggregating

the binary losses.

Binary learner loss function, specified as a built-in loss function name or function handle.

This table describes the built-in functions, where yj is the class label for a particular binary learner (in the set {–1,1,0}), sj is the score for observation j, and g(yj,sj) is the binary loss formula.

Value Description Score Domain g(yj,sj) "binodeviance"Binomial deviance (–∞,∞) log[1 + exp(–2yjsj)]/[2log(2)] "exponential"Exponential (–∞,∞) exp(–yjsj)/2 "hamming"Hamming [0,1] or (–∞,∞) [1 – sign(yjsj)]/2 "hinge"Hinge (–∞,∞) max(0,1 – yjsj)/2 "linear"Linear (–∞,∞) (1 – yjsj)/2 "logit"Logistic (–∞,∞) log[1 + exp(–yjsj)]/[2log(2)] "quadratic"Quadratic [0,1] [1 – yj(2sj – 1)]2/2 The software normalizes binary losses so that the loss is 0.5 when yj = 0. Also, the software calculates the mean binary loss for each class [1].

For a custom binary loss function, for example

customFunction, specify its function handleBinaryLoss=@customFunction.customFunctionhas this form:bLoss = customFunction(M,s)

Mis the K-by-B coding matrix stored inMdl.CodingMatrix.sis the 1-by-B row vector of classification scores.bLossis the classification loss. This scalar aggregates the binary losses for every learner in a particular class. For example, you can use the mean binary loss to aggregate the loss over the learners for each class.K is the number of classes.

B is the number of binary learners.

For an example of a custom binary loss function, see Predict Test-Sample Labels of ECOC Model Using Custom Binary Loss Function. This example is for a traditionally trained model. You can define a custom loss function for incremental learning as shown in the example.

For more information, see Binary Loss.

Data Types: char | string | function_handle

Decoding scheme, specified as "lossweighted" or

"lossbased".

The decoding scheme of an ECOC model specifies how the software aggregates the binary losses and determines the predicted class for each observation. The software supports two decoding schemes:

"lossweighted"— The predicted class of an observation corresponds to the class that produces the minimum sum of the binary losses over binary learners."lossbased"— The predicted class of an observation corresponds to the class that produces the minimum average of the binary losses over binary learners.

For more information, see Binary Loss.

Example: Decoding="lossbased"

Data Types: char | string

Predictor data observation dimension, specified as "rows" or

"columns".

Example: ObservationsIn="columns"

Data Types: char | string

Output Arguments

Predicted responses (labels), returned as a categorical or character array;

floating-point, logical, or string vector; or cell array of character vectors with

n rows. n is the number of observations in

X, and label( is

the predicted response for observation j)j

label has the same data type as the class names stored in

Mdl.ClassNames. (The software treats string arrays as cell arrays of character

vectors.)

The predict function predicts the

classification of an observation by assigning the observation to the class yielding the largest

negated average binary loss (or, equivalently, the smallest average binary loss). For an

observation with NaN loss values, the function classifies the observation

into the majority class, which makes up the largest proportion of the training labels.

Negated average binary losses, returned as an

n-by-K numeric matrix. n is

the number of observations in X, and K is the

number of distinct classes in the training data

(numel(Mdl.ClassNames)).

NegLoss(i,k) is the negated average binary loss for classifying observation

i into the kth class.

If

Decodingis'lossbased', thenNegLoss(i,k)is the negated sum of the binary losses divided by the total number of binary learners.If

Decodingis'lossweighted', thenNegLoss(i,k)is the negated sum of the binary losses divided by the number of binary learners for the kth class.

For more details, see Binary Loss.

Positive-class scores for each binary learner, returned as an

n-by-B numeric matrix. n is

the number of observations in X, and B is the

number of binary learners (numel(Mdl.BinaryLearners)).

More About

The binary loss is a function of the class and classification score that determines how well a binary learner classifies an observation into the class. The decoding scheme of an ECOC model specifies how the software aggregates the binary losses and determines the predicted class for each observation.

Assume the following:

mkj is element (k,j) of the coding design matrix M—that is, the code corresponding to class k of binary learner j. M is a K-by-B matrix, where K is the number of classes, and B is the number of binary learners.

sj is the score of binary learner j for an observation.

g is the binary loss function.

is the predicted class for the observation.

The software supports two decoding schemes:

Loss-based decoding [2] (

Decodingis"lossbased") — The predicted class of an observation corresponds to the class that produces the minimum average of the binary losses over all binary learners.Loss-weighted decoding [3] (

Decodingis"lossweighted") — The predicted class of an observation corresponds to the class that produces the minimum average of the binary losses over the binary learners for the corresponding class.The denominator corresponds to the number of binary learners for class k. [1] suggests that loss-weighted decoding improves classification accuracy by keeping loss values for all classes in the same dynamic range.

The predict, resubPredict, and

kfoldPredict functions return the negated value of the objective

function of argmin as the second output argument

(NegLoss) for each observation and class.

This table summarizes the supported binary loss functions, where yj is a class label for a particular binary learner (in the set {–1,1,0}), sj is the score for observation j, and g(yj,sj) is the binary loss function.

| Value | Description | Score Domain | g(yj,sj) |

|---|---|---|---|

"binodeviance" | Binomial deviance | (–∞,∞) | log[1 + exp(–2yjsj)]/[2log(2)] |

"exponential" | Exponential | (–∞,∞) | exp(–yjsj)/2 |

"hamming" | Hamming | [0,1] or (–∞,∞) | [1 – sign(yjsj)]/2 |

"hinge" | Hinge | (–∞,∞) | max(0,1 – yjsj)/2 |

"linear" | Linear | (–∞,∞) | (1 – yjsj)/2 |

"logit" | Logistic | (–∞,∞) | log[1 + exp(–yjsj)]/[2log(2)] |

"quadratic" | Quadratic | [0,1] | [1 – yj(2sj – 1)]2/2 |

The software normalizes binary losses so that the loss is 0.5 when yj = 0, and aggregates using the average of the binary learners [1].

Do not confuse the binary loss with the overall classification loss (specified by the

LossFun name-value argument of the loss and

predict object functions), which measures how well an ECOC classifier

performs as a whole.

Algorithms

If the prior class probability distribution is known (in other words, the prior distribution is not empirical), predict normalizes observation weights to sum to the prior class probabilities in the respective classes. This action implies that the default observation weights are the respective prior class probabilities.

If the prior class probability distribution is empirical, the software normalizes the specified observation weights to sum to 1 each time you call predict.

References

[1] Allwein, E., R. Schapire, and Y. Singer. “Reducing multiclass to binary: A unifying approach for margin classifiers.” Journal of Machine Learning Research. Vol. 1, 2000, pp. 113–141.

[2] Escalera, S., O. Pujol, and P. Radeva. “Separability of ternary codes for sparse designs of error-correcting output codes.” Pattern Recog. Lett. Vol. 30, Issue 3, 2009, pp. 285–297.

[3] Escalera, S., O. Pujol, and P. Radeva. “On the decoding process in ternary error-correcting output codes.” IEEE Transactions on Pattern Analysis and Machine Intelligence. Vol. 32, Issue 7, 2010, pp. 120–134.

Extended Capabilities

Usage notes and limitations:

Use

saveLearnerForCoder,loadLearnerForCoder, andcodegen(MATLAB Coder) to generate code for thepredictfunction. Save a trained model by usingsaveLearnerForCoder. Define an entry-point function that loads the saved model by usingloadLearnerForCoderand calls thepredictfunction. Then usecodegento generate code for the entry-point function.To generate single-precision C/C++ code for

predict, specifyDataType="single"when you call theloadLearnerForCoderfunction.Use a homogeneous data type for all floating-point input arguments and object properties, specifically, either

singleordouble.This table contains notes about the arguments of

predict. Arguments not included in this table are fully supported.Argument Notes and Limitations MdlFor usage notes and limitations of the model object, see

incrementalClassificationECOC.XBatch-to-batch, the number of observations can be a variable size.

The number of predictor variables must equal

Mdl.NumPredictors.Xmust besingleordouble.

For more information, see Introduction to Code Generation.

Version History

Introduced in R2022a

See Also

Functions

Objects

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)