Generate Scenario from Recorded GPS and Lidar Data

This example shows how to generate a driving scenario by extracting recorded data from global positioning systems (GPS) and lidar sensors mounted on an ego vehicle. In this example, you use prerecorded sensor data and follow these steps to generate the scenario:

Read data from the GPS sensor. Use the GPS data to:

Import the map data from OpenStreetMap®.

Extract the road information and driving route from the imported map data.

Compute the waypoints for the ego vehicle.

Estimate the ego speed and trajectory.

Read data from the lidar sensor. Use the lidar data to:

Find the dimension and the type of non-ego actors in the driving scene.

Estimate the entry times, exit times, positions, velocities, speeds, yaws, and trajectories of non-ego actors by using the lidar data.

Generate the driving scenario by using the extracted road network and the estimated ego and non-ego data.

Read GPS Sensor Data

Create a folder in the temporary directory on your computer to store the sensor data.

sensorData = fullfile(tempdir,"AutomotiveDataset"); if ~isfolder(sensorData) mkdir(sensorData) end

Download the .mat file containing the recorded GPS sensor data and save it to a specified folder in the temporary directory. Load the GPS sensor data from the .mat file into the MATLAB® workspace.

if ~exist("data","var") url = "https://ssd.mathworks.com/supportfiles/driving/data/highwayLaneChangeDatase/ins2.mat"; filePath = fullfile(sensorData,"ins.mat"); if ~isfile(filePath) websave(filePath,url); end data = load(filePath); end

Display the GPS sensor data. The data is stored as a structure with fields timeStamp, velocity, yawRate, latitude, longitude, and altitude. The latitude and the longitude coordinates specify the driving route of the ego vehicle.

data.ins

ans=1×1158 struct array with fields:

timeStamp

velocity

yawRate

latitude

longitude

altitude

Select a part of the recorded sensor data by specifying start and end timestamp values. Units are in microseconds. Typically, the units depend on the data logging configuration of the sensors.

sim.startTime = 1461634424950000; sim.endTime = 1461634434950000;

Compute the number of data points within the specified start and end timestamps. Also, find the start and end indices of the timestamps by using the helperTimestampIndex function.

[count.gps,sim.gps.startstep,sim.gps.endstep] = helperTimestampIndex([data.ins.timeStamp].',sim.startTime,sim.endTime);

Extract the timestamp, latitude, longitude, and elevation parameters of all data points between the start and end indices. Store the extracted parameters in a table.

data.ins = data.ins(sim.gps.startstep : sim.gps.endstep); gps = table([data.ins.timeStamp].',[data.ins.latitude].',[data.ins.longitude].',[data.ins.altitude].',VariableNames=["timestamp","lat","lon","elevation"]);

Extract Road Network Using GPS Sensor Data

Specify the minimum and maximum values of the latitude and longitude coordinates, for the selected data, to download a road network using the OpenStreetMap® website. You can also specify an offset value in order to extract extended map data that lies within the range of the GPS sensor.

map.gpsExtend = 0.0001; map.minLat = min(gps.lat) - map.gpsExtend; map.maxLat = max(gps.lat) + map.gpsExtend; map.minLon = min(gps.lon) - map.gpsExtend; map.maxLon = max(gps.lon) + map.gpsExtend;

Download the map data from the OpenStreetMap® website https://www.openstreetmap.org, which provides access to crowd-sourced map data from all over the world. The data is licensed under the Open Data Commons Open Database License (ODbL), https://opendatacommons.org/licenses/odbl/.

Extract the map data from within the minimum and maximum latitude and longitude coordinates. Specify a file name to save the downloaded map data. In this example, the file name is set as the name of a road in the driving route.

url = ['https://api.openstreetmap.org/api/0.6/map?bbox=' ... num2str(map.minLon, '%.10f') ',' num2str(map.minLat,'%.10f') ',' ... num2str(map.maxLon, '%.10f') ',' num2str(map.maxLat,'%.10f')]; fileName = "BanfieldFreeway.osm"; websave(fileName,url,weboptions(ContentType="xml"));

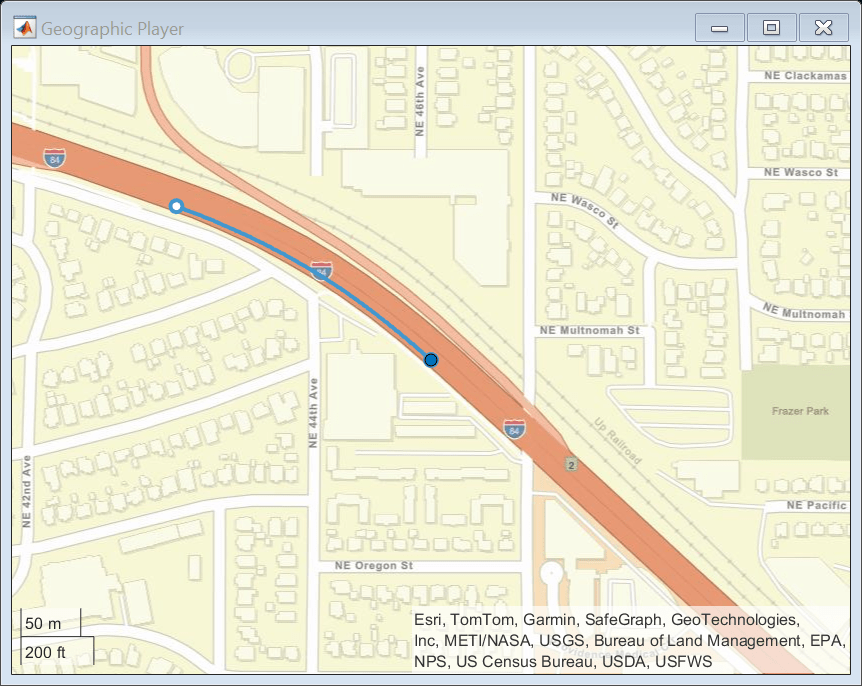

Plot the driving route on a map by using the geoPlayer function.

map.midLat = (map.minLat + map.maxLat)/2; map.midLon = (map.minLon + map.maxLon)/2; zoomLevel = 17; player = geoplayer(map.midLat,map.midLon,zoomLevel); figure plotRoute(player,gps.lat,gps.lon); for i = 1:count.gps plotPosition(player,gps.lat(i),gps.lon(i)); end

Get Road Information from Extracted Road Network

To get information from the extracted road network, you must first import the map data to a drivingScenario object. Then, use the helperGetRoadHistoryAttributes function to extract the road information.

Compute the total simulation time by using the start and end timestamps of the GPS data. Units are in seconds.

sim.TotalTime = (sim.endTime - sim.startTime)/10^6;

Specify the sample time for the driving scenario. The sample time is the time interval between scenario simulation steps, and also defines the sample time for the ego vehicle. Units are in seconds.

sim.egoSampleTime = 0.01;

Create an empty driving scenario object by using the drivingScenario function.

importedScenario = drivingScenario(SampleTime=sim.egoSampleTime,StopTime=sim.TotalTime);

Import the extracted map data to the driving scenario by using the roadNetwork function.

roadNetwork(importedScenario,OpenStreetMap=fileName);

Read the road information stored in the scenario object by using the helperGetRoadHistoryAttributes function. The road properties include the road centers, road width, banking angles, lane specifications, and road names.

[roadCenters,roadWidth,bankingAngles,laneSpec,roadNames] = helperGetRoadHistoryAttributes(importedScenario);

Create Driving Scenario and Add Roads to Scenario

Create a new drivingScenario object and add roads to the new scenario. Set the properties of these roads by using the extracted road information.

Specify the sample time for the new scenario.

sim.sampleTime = 0.1; scenario = drivingScenario(SampleTime=sim.sampleTime,StopTime=sim.TotalTime);

Specify a georeference point and use the latlon2local function to convert the GPS sensor data from GPS coordinates to local east-north-up Cartesian coordinates.

refPoint = [map.midLat map.midLon,0];

[gps.x,gps.y,gps.elevation] = latlon2local(gps.lat,gps.lon,gps.elevation,refPoint);

filteredData = smoothdata([gps.x,gps.y gps.elevation],'sgolay');

gps.x = filteredData(:,1);

gps.y = filteredData(:,2);

gps.elevation = filteredData(:,3);Specify a bounding box that define the range for the map coordinates.

map.localExtend = 70; map.xmin = min(gps.x) - map.localExtend; map.xmax = max(gps.x) + map.localExtend; map.ymin = min(gps.y) - map.localExtend; map.ymax = max(gps.y) + map.localExtend;

Specify the names of the roads in the exported road network to be added to the scenario. You can find the names of the roads in the roadNames output of the helperGetRoadHistoryAttributes function.

map.keepRoads = "Banfield Freeway";Check if the desired roads are within the bounding box and, if they are, add them to the driving scenario.

for i = 1:size(roadNames,1) flag = 0; for j = 1: size(map.keepRoads,1) if contains(roadNames{i,1},map.keepRoads(j,1),IgnoreCase=true) flag = 1; end end if flag k = 0; for l = 1:size(roadCenters{i,1},1) k = k+1; % Remove road centers that lie outside the bounding box. if roadCenters{i,1}(k,1) < map.xmin || ... roadCenters{i,1}(k,1) > map.xmax || ... roadCenters{i,1}(k,2) < map.ymin || ... roadCenters{i,1}(k,2) > map.ymax roadCenters{i,1}(k,:) = []; k = k-1; end end if k > 1 % Add roads by using the road centers. Set the road width to 12. roadwidth = 12; road(scenario,roadCenters{i,1},roadwidth,Name=roadNames{i,1}); end end end

Compute Ego Data Using GPS Sensor Data

The GPS sensor data corresponds to the ego vehicle, so you can use the GPS measurements to compute the ego vehicle waypoints and speed.

The latitude and longitude values specify the waypoints for the ego vehicle in GPS coordinates. Compute the GPS time relative to the start time of the simulation and find the ego speed value at each waypoint.

gps.relativeTime = double(gps.timestamp - sim.startTime)/10^6;

Add the ego vehicle to the imported road network and compute its trajectory.

egoVehicleGPS = vehicle(importedScenario,ClassID=1,Position=[gps.x(1),gps.y(1),0]); trajectory(egoVehicleGPS,[gps.x,gps.y,zeros(count.gps,1)],TimeofArrival=gps.relativeTime);

Extract information about the position, velocity, and yaw of the ego vehicle from the imported scenario by using the actorPoses function.

positionIndex = [1 3 6]; velocityIndex = [2 4 7]; i = 1; while advance(importedScenario) position(1,:) = actorPoses(importedScenario).Position; simEgo.data(i,positionIndex) = position; velocity(1,:) = actorPoses(importedScenario).Velocity; simEgo.data(i,velocityIndex) = velocity; simEgo.data(i,5) = i; yaw = actorPoses(importedScenario).Yaw; simEgo.data(i,8) = yaw; simEgo.data(i,9) = importedScenario.SimulationTime; i = i + 1; end

The computed ego data values include the time, waypoints, velocity, and yaw of the ego vehicle. Store the ego data to a table and inspect the values.

table(simEgo.data(:,9),simEgo.data(:,positionIndex), ... simEgo.data(:,velocityIndex),simEgo.data(:,8), ... VariableNames=["Time","Waypoints","Velocity","Yaw"])

ans=997×4 table

Time Waypoints Velocity Yaw

____ _____________________________ ____________________________ _______

0.01 -106.81 64.526 0 23.955 -8.6128 0 -19.776

0.02 -106.81 64.526 0 23.965 -8.6166 0 -19.776

0.03 -106.77 64.513 0 23.976 -8.6205 0 -19.776

0.04 -106.53 64.427 0 23.986 -8.6259 0 -19.78

0.05 -106.29 64.34 0 23.995 -8.6337 0 -19.789

0.06 -106.05 64.254 0 24.003 -8.6439 0 -19.805

0.07 -105.81 64.167 0 24.011 -8.6566 0 -19.826

0.08 -105.57 64.081 0 24.021 -8.6728 0 -19.852

0.09 -105.33 63.994 0 24.04 -8.693 0 -19.88

0.1 -105.09 63.907 0 24.047 -8.7078 0 -19.907

0.11 -104.85 63.82 0 24.041 -8.7174 0 -19.931

0.12 -104.61 63.733 0 24.022 -8.7215 0 -19.954

0.13 -104.37 63.645 0 23.998 -8.7231 0 -19.976

0.14 -104.13 63.558 0 23.996 -8.7324 0 -19.997

0.15 -103.89 63.471 0 23.981 -8.7375 0 -20.019

0.16 -103.65 63.383 0 23.955 -8.7384 0 -20.041

⋮

Add Ego and Non-Ego Actors to Scenario

To add ego and non-ego actors to the scenario:

Read data recorded by lidar sensor.

Estimate the position, velocity, speed, and yaw for the ego vehicle by considering both GPS and lidar timestamps. Compute the ego trajectory.

Find the number of non-ego actors in the scenario. Compute their waypoints, velocities, speeds, and yaws by using the recorded lidar sensor. Compute the trajectories of the non-ego actors.

Convert the waypoints of the non-ego actors from the ego frame to the scenario frame.

Add the ego and non-ego actors to the driving scenario.

Read Lidar Sensor Data

Download the .mat file containing the recorded lidar sensor data and save it to a specified folder in the temporary directory. Load the lidar sensor data from the .mat file into the MATLAB workspace.

if ~exist("newData","var") url = "https://ssd.mathworks.com/supportfiles/driving/data/highwayLaneChangeDatase/lidar2.mat"; filePath = fullfile(sensorData,'lidar.mat'); if ~isfile(filePath) websave(filePath,url); end newData = load(filePath); end

Compute the number of data points within the specified start and end timestamps. Also, find start and end indices of the timestamps by using the helperTimestampIndex function.

[count.lidar,sim.lidar.startstep,sim.lidar.endstep] = helperTimestampIndex([newData.lidar.timeStamp].',sim.startTime,sim.endTime);

Extract the timestamp and point cloud data that lies between the start and end indices. Store the extracted parameters in a table.

newData.lidar = newData.lidar(sim.lidar.startstep : sim.lidar.endstep);

lidar = table([newData.lidar.timeStamp].',{newData.lidar.ptCloud}.',VariableNames=["timestamp","ptCloud"])lidar=100×2 table

timestamp ptCloud

________________ ________________

1461634425028360 {1×1 pointCloud}

1461634425128114 {1×1 pointCloud}

1461634425228122 {1×1 pointCloud}

1461634425327849 {1×1 pointCloud}

1461634425427574 {1×1 pointCloud}

1461634425528347 {1×1 pointCloud}

1461634425627513 {1×1 pointCloud}

1461634425728613 {1×1 pointCloud}

1461634425828486 {1×1 pointCloud}

1461634425928594 {1×1 pointCloud}

1461634426028230 {1×1 pointCloud}

1461634426128400 {1×1 pointCloud}

1461634426228515 {1×1 pointCloud}

1461634426327968 {1×1 pointCloud}

1461634426427685 {1×1 pointCloud}

1461634426527961 {1×1 pointCloud}

⋮

Estimate Ego Data With Regard to GPS and Lidar Timestamps

Find the lidar timestamps relative to the start time of the simulation. Units are in seconds.

lidar.relativeTime = double(lidar.timestamp - sim.startTime)/10^6;

Find the ego position, velocity, speed, and yaw by using the ego data from the GPS measurements as reference.

temp = zeros(1,9); i = 1; for j = 2 : size(simEgo.data,1) t = simEgo.data(j,9); if i <= count.lidar && lidar.relativeTime(i) <= t tratio = (t - lidar.relativeTime(i)) / (t - simEgo.data(j-1,9)); for k = [1:4,8] temp(1,k) = simEgo.data(j,k) - ... ((simEgo.data(j,k) - simEgo.data(j-1,k))*tratio); end ego.position(i,:) = temp(1,positionIndex); ego.velocity(i,:) = temp(1,velocityIndex); ego.speed(i,:) = sqrt(temp(1,2)^2 + temp(1,4)^2); ego.yaw(i,:) = temp(1,8); i = i + 1; elseif i == count.lidar && size(simEgo.data,1) == j ego.position(i,:) = simEgo.data(j,positionIndex); ego.velocity(i,:) = simEgo.data(j,velocityIndex); ego.speed(i,:) = sqrt(simEgo.data(j,2)^2 + simEgo.data(j,4)^2); ego.yaw(i,:) = simEgo.data(j,8); end end ego.position = smoothdata(ego.position,'sgolay'); ego.yaw = smoothdata(ego.yaw);

Add Ego Vehicle to Scenario

Add the ego vehicle to the driving scenario and compute its trajectory.

egoVehicle = vehicle(scenario,ClassID=1,Position=ego.position(1,:),Yaw=ego.yaw(1), ...

Mesh=driving.scenario.carMesh);

trajectory(egoVehicle,ego.position,TimeofArrival=lidar.relativeTime,Yaw=ego.yaw);Estimate Non-Ego Data

Create a track list from the lidar point cloud data by using the helperPointCloudToTracks function. The track list contains information about the objects detected in the point cloud data.

if ~exist("trackList","var") trackList.object = helperPointCloudToTracks(lidar.ptCloud); trackList.timestamp = lidar.timestamp; trackList.relativeTime = lidar.relativeTime; for i = 1:count.lidar trackList.nObj(i,1) = size(trackList.object{i,1},1); end end

Convert the track list data to non-ego data by using the helperComputeNonEgoData function. The function computes the dimensions, entry times, exit times, yaws, and speeds of the non-ego actors in the scenario. The function also converts the positions of the non-ego actors computed in the ego frame to scenario or map frame, and then smooths the position values.

[count.nonEgo,nonEgo] = helperComputeNonEgoData(trackList,count,ego)

count = struct with fields:

gps: 200

lidar: 100

nonEgo: 6

nonEgo=6×18 table

trackCount id length width height age entryIndex entryTime exitIndex exitTime classID mesh posInEgoFrame time yaw posInMapFrame speed smoothPos

__________ ____ ______ ______ ______ ___ __________ _________ _________ ________ _______ ______________________ _____________ _____________ _____________ _____________ _____________ _____________

94 37 4.6931 1.7953 1.451 100 7 0.7 100 10 1 1×1 extendedObjectMesh {94×3 double} {94×1 double} {94×1 double} {94×3 double} {94×1 double} {94×3 double}

45 38 4.6931 1.7932 1.4241 51 7 0.7 51 5.1 1 1×1 extendedObjectMesh {45×3 double} {45×1 double} {45×1 double} {45×3 double} {45×1 double} {45×3 double}

94 49 4.6942 1.7974 1.4007 100 7 0.7 100 10 1 1×1 extendedObjectMesh {94×3 double} {94×1 double} {94×1 double} {94×3 double} {94×1 double} {94×3 double}

28 99 4.6924 1.7942 1.399 34 8 0.8 35 3.5 1 1×1 extendedObjectMesh {28×3 double} {28×1 double} {28×1 double} {28×3 double} {28×1 double} {28×3 double}

44 940 4.6938 1.7981 1.4171 50 54 5.4 97 9.7 1 1×1 extendedObjectMesh {44×3 double} {44×1 double} {44×1 double} {44×3 double} {44×1 double} {44×3 double}

42 1021 4.6928 1.7965 1.4271 48 59 5.9 100 10 1 1×1 extendedObjectMesh {42×3 double} {42×1 double} {42×1 double} {42×3 double} {42×1 double} {42×3 double}

Add Non-Ego Actors to Scenario

Add the non-ego actors to the driving scenario and compute their trajectories.

for i= 1:count.nonEgo if nonEgo.classID(i) == 1 nonEgoVehicle(i) = vehicle(scenario, ... ClassID=nonEgo.classID(i),Name=nonEgo.id(i,:), ... Position=nonEgo.smoothPos{i,1}(1,:), ... Length=nonEgo.length(i,1),Width=nonEgo.width(i,1), ... Height=nonEgo.height(i,1),Mesh=nonEgo.mesh(i,1), ... EntryTime=nonEgo.entryTime(i,1), ... ExitTime=nonEgo.exitTime(i,1)); else nonEgoVehicle(i) = actor(scenario, ... ClassID=nonEgo.classID(i),Name=nonEgo.id(i,:), ... Position=nonEgo.smoothPos{i,1}(1,:), ... Length=nonEgo.length(i,1),Width=nonEgo.width(i,1), ... Height=nonEgo.height(i,1),Mesh=nonEgo.mesh(i,1), ... EntryTime=nonEgo.entryTime(i,1), ... ExitTime=nonEgo.exitTime(i,1)); end trajectory(nonEgoVehicle(i),nonEgo.smoothPos{i,1},TimeOfArrival=nonEgo.time{i,1}); end

Simulate and Visualize Generated Scenario

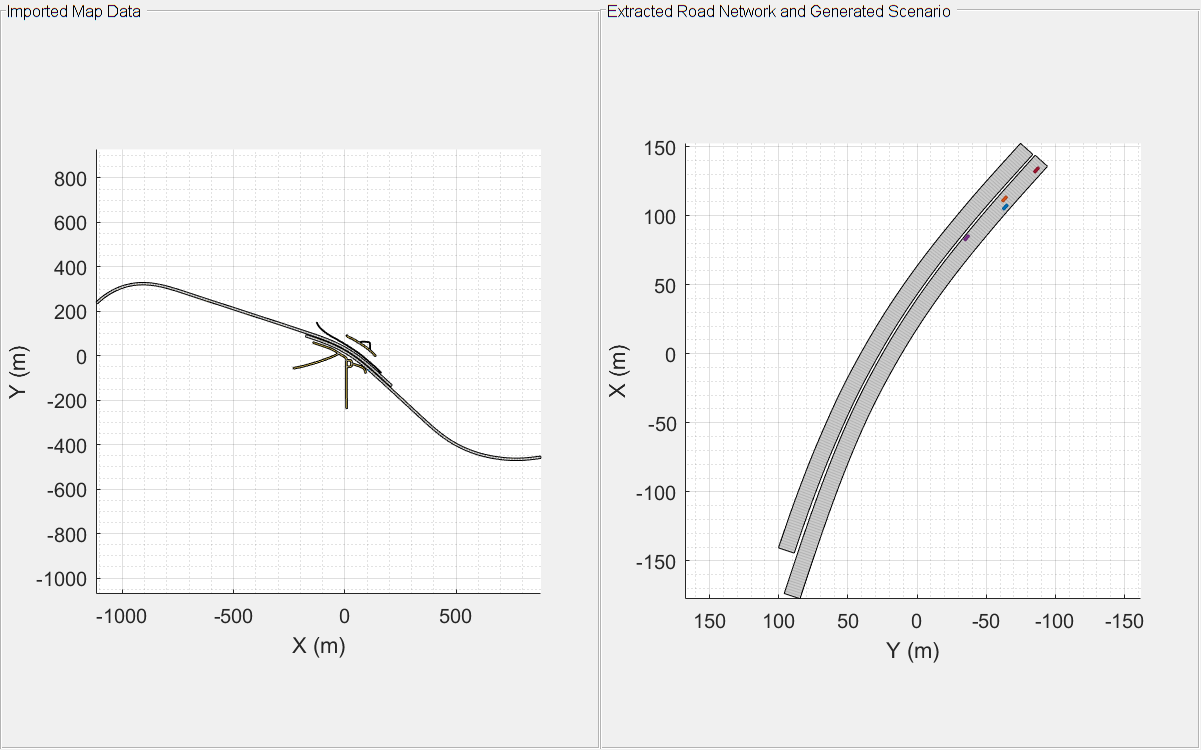

Plot the map data and the scenario generated using GPS and lidar sensor data.

scfig = figure(Position=[0,0,800,500]); hfig = uipanel(scfig,Title="Imported Map Data",Position=[0 0 0.5 1]); plaxis = axes(hfig); plot(importedScenario,Parent=plaxis) hfig1 = uipanel(scfig,Title="Extracted Road Network and Generated Scenario",Position=[0.5 0 0.5 1]); plaxis1 = axes(hfig1); plot(scenario,Parent=plaxis1); xlim([-100,100]) while advance(scenario) pause(sim.sampleTime); end

You can also visually inspect the accuracy of the generated scenario by plotting it alongside the camera sensor and lidar sensor data.

Read the data recorded by camera sensor.

if ~exist("camera","var") url = "https://ssd.mathworks.com/supportfiles/driving/data/highwayLaneChangeDatase/camera2.mat"; filePath = fullfile(sensorData,"camera.mat"); if ~isfile(filePath) websave(filePath,url); end img = load(filePath,"imageData"); [count.camera,sim.camera.startstep,sim.camera.endstep] = ... helperTimestampIndex([img.imageData.timeStamp].', ... sim.startTime,sim.endTime); img.imageData = img.imageData(sim.camera.startstep:sim.camera.endstep); camera = table([img.imageData.timeStamp].',{img.imageData.mov}.', ... VariableNames=["timestamp","img"]); for i = 1 : count.camera camera.img{i,1} = camera.img{i,1}.cdata; end camera.relativeTime = double(camera.timestamp - sim.startTime)/10^6; end

Display the top-view and chase-view plots of the generated scenario.

restart(scenario) close all fig = figure; set(fig,Position=[40 40 1000 800]); % Ego Top View hPanel = uipanel(fig, ... Title="Top-View of Generated Scenario",Position=[0 0.5 0.5 0.5]); hPlot = axes(hPanel); chasePlot(egoVehicle,Parent=hPlot, ... ViewPitch=90,ViewHeight=120,ViewLocation=[0, 0]); % Ego ChasePlot hPanel2 = uipanel(fig, ... Title="Chase-View of Generated Scenario",Position=[0.5 0.5 0.5 0.5]); hPlot2 = axes(hPanel2); chasePlot(egoVehicle,Parent=hPlot2,Meshes='on');

Specify an axes for displaying the camera data.

% Image from camera hPanel3 = uipanel(fig, ... Title="Recorded Camera Data",Position=[0 0 0.5 0.5]); hPlot3 = axes(hPanel3); % Initialize last used indices of the sensor last.lidarIndex = 0; last.cameraIndex = 0;

Plot the lidar point cloud data.

hPanel4 = uipanel(fig, ... Title ="Recorded Lidar Data",Position=[0.5 0 0.5 0.5]); hPlot4 = axes(hPanel4); % Initialize Display xlimits = [-60 60]; ylimits = [-30 30]; zlimits = [-5 20]; player = pcplayer(xlimits,ylimits,zlimits,Parent=hPlot4);

Call the advance function in a loop to advance the simulation one time step at a time.

while advance(scenario) if last.cameraIndex + 1 <= count.camera && ... scenario.SimulationTime >= camera.relativeTime(last.cameraIndex+1) condition = true; while condition last.cameraIndex = last.cameraIndex + 1; condition = scenario.SimulationTime < ... camera.relativeTime(last.cameraIndex); end image(camera.img{last.cameraIndex,1},Parent=hPlot3); end % if last.lidarIndex + 1 <= count.lidar && ... scenario.SimulationTime >= lidar.relativeTime(last.lidarIndex + 1) condition = true; while condition last.lidarIndex = last.lidarIndex + 1; condition = scenario.SimulationTime < ... lidar.relativeTime(last.lidarIndex); end % Plot point cloud with bounding box and label ptCloud = lidar.ptCloud{last.lidarIndex,1}; confirmedTracks = trackList.object{last.lidarIndex,1}; view(player,ptCloud); % The helperParseTracks function returns position, dimension, and orientation of the 3-D bounding boxes. posIndex = [1 3 6]; velocityIndex = [2 4 7]; yawIndex = 8; dimIndex = [9 10 11]; if ~isempty(confirmedTracks) [pos,dims,orients,labels] = helperParseTracks(confirmedTracks,posIndex,dimIndex,yawIndex); yaw = zeros(size(pos,1),3); yaw(:,3) = orients'; bboxes = [pos,dims,yaw]; showShape(cuboid=bboxes,Label=labels',Parent=hPlot4); end end pause(sim.sampleTime) end

References

[1] Park, Seo-Wook, Kunal Patil, Will Wilson, Mark Corless, Gabriel Choi, and Paul Adam. “Creating Driving Scenarios from Recorded Vehicle Data for Validating Lane Centering System in Highway Traffic,” 2020-01–0718, 2020. https://doi.org/10.4271/2020-01-0718.

See Also

road | roadNetwork | vehicle | actor | trajectory | drivingScenario | advance | restart | plot