AI-Native Fully Convolutional Receiver

This example shows how to train and use an AI-native, fully convolutional receiver known as DeepRx [1]. Using DeepRx, you replace channel estimation, equalization, and symbol demodulation operations with a deep convolutional neural network (DCNN) at the receiver end of a 5G New Radio (NR) link. DeepRx is particularly beneficial in high Doppler shift scenarios and sparse pilot configurations. In this example, you create a physical uplink shared channel (PUSCH) end-to-end link that consists of a transmitter, a channel, a receiver to generate training, validation and test data. You then assess the performance of DeepRx and compare it with a conventional receiver.

Introduction

AI-native air interface (AI-AI) is a promising technique for improving system performance and reducing energy consumption, essential steps in developing networks beyond 5G. AI-AI is a departure from the traditional approach of designing and productizing communications systems, as it involves the integration of AI and machine learning (AI/ML) as a core component of the physical layer (PHY) processing.

The 3rd Generation Partnership Project (3GPP) has initiated comprehensive study and work items focused on exploring the applications of AI/ML, with the goal of covering long-term projects such as technologies beyond 5G [2, 3, 4]. In Release 18, researchers, engineers, and companies explored three promising pilot areas – AI/ML-based channel state information (CSI) compression, beam management, and positioning. For more information, see the CSI Feedback with Autoencoders, Neural Network for Beam Selection, and AI for Positioning Accuracy Enhancement examples. In Release 19, the team continues to study AI/ML applications for beam management and positioning as work items (WI) and provides specification support [5].

This example demonstrates an AI-AI implementation that replaces multiple 5G receiver processing blocks with AI/ML counterparts to enhance coded bit error ratio (BER) and throughput performance. For a testbench that replaces a 5G receiver with an AI-native receiver using PyTorch™, see the Verify Performance of 6G AI-Native Receiver Using MATLAB and PyTorch Coexecution example.

System Description

This example uses a modified version of the NR PUSCH Throughput example to generate training, validation, and test data for online learning. The online learning approach means the model performs training and validation using a continuous stream of data. Storing a large portion of the dataset in memory, also known as batch learning, might not be feasible due to memory and storage limitations. Instead, the example creates multiple batches of data asynchronously on multiple CPUs and passes them to a GPU for training. This approach ensures that when a worker finishes generating the training data, it triggers a training session on the GPU while other parallel data generation sessions continue. The bottleneck is the training on the GPU, and data generation does not significantly contribute to the total execution time. Moreover, if parallel CPU acceleration is enabled, the model keeps only a few batches of data in memory for each training, validation, and test iteration. The Train DeepRx Network section provides further information on parallelism and data generation.

To create a data sample at each signal-to-noise ratio (SNR) level, the process simulates PUSCH data transmission for each instance through the following steps:

Generate an OFDM-modulated waveform that contains PUSCH and PUSCH demodulation reference signal (DM-RS).

Simulate the transmission through either a clustered delay line (CDL) or tapped delay line (TDL) fading channel, applying additive white Gaussian noise (AWGN) based on the SNR.

Synchronize and OFDM-demodulate the received waveform.

Perform channel estimation. If you selected perfect estimation, reconstruct the channel impulse response (CIR) and OFDM-demodulate it for channel estimation. For practical estimation, use the PUSCH DM-RS.

Extract the PUSCH resource elements and channel estimates from the received resource grid and equalize the PUSCH resource elements with the minimum mean square error (MSE) method.

Decode the PUSCH by demodulating and descrambling the equalized symbols that leading to an estimation of the received codewords.

Decode the uplink transport channel (UL-SCH) to process the decoded soft bits, decode the codeword, and return the block cyclic redundancy check (CRC) error. Use the block CRC to assess throughput, and the coded BER.

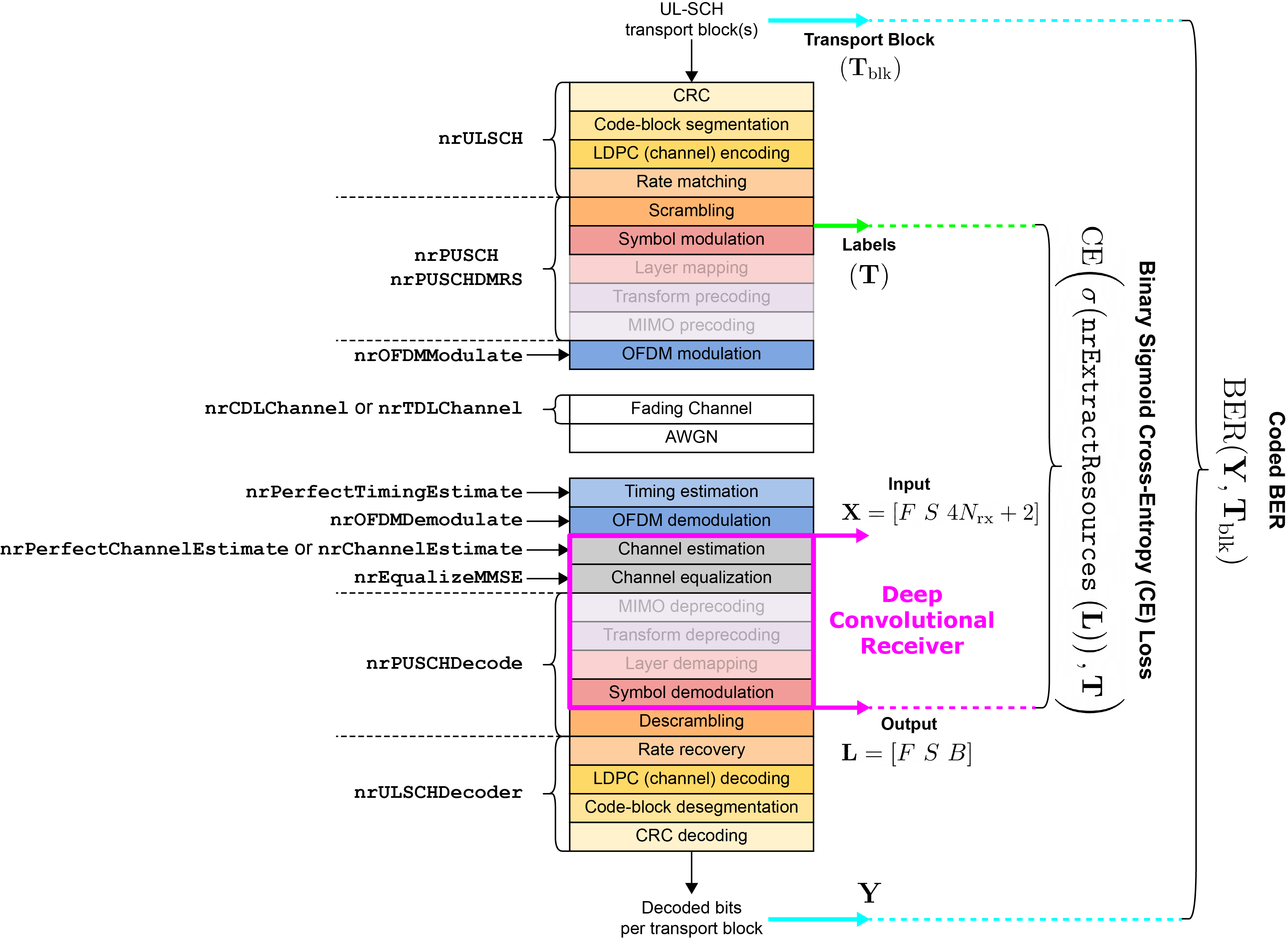

The following diagram shows each step of the PUSCH data transmission and the corresponding 5G Toolbox™ functions for the operations. The faded blocks represent operations that this example does not use because it implements a single-input-multiple-output (SIMO) configuration with one transmit antenna and two receive antennas. DeepRx replaces the channel estimation, channel equalization, and symbol demodulation. Accordingly, represents the number of subcarriers, denotes the number of symbols in time, and is the number of receive antennas. During DeepRx training, the loss function is binary sigmoid cross-entropy (CE), which compares the log-likelihood ratios (LLRs) of the output bits () with the ground truth labels (). The final step evaluates the trained DeepRx performance using transport blocks () and number of block errors to calculate coded BER and throughput. Note that the hybrid automatic repeat request (HARQ) is disabled in this example, as it only affects the processes after the calculation of LLRs.

Configure System Parameters

You can set the simulation length by specifying the number of 10 ms frames (simParameters.NFrames) and training samples (simParameters.NTrainSamples) in training. The SNR parameter represents the SNR per resource element (RE) and per antenna element. For an explanation of the SNR definition used in this example, see the SNR Definition Used in Link Simulations example.

Specify the carrier, UL-SCH, and PUSCH configurations along with the propagation channel model parameters. Training the DeepRx network may take several hours due to the large number of training samples that cover a wide range of scenario parameters, enhancing the model's generalization to the environment. By default, training is turned off, and the example uses a pretrained network (DeepRx_2M.mat). This network is trained using the default settings of the example with simParameters.NTrainSamples set to 2 million. To enable training, set simParameters.TrainNow to true.

Generate the simulation data for both training and testing simultaneously on multiple workers across multiple CPUs. To enable CPU-based hardware acceleration, set simParameters.UseParallel to true, which requires Parallel Computing Toolbox™. If a supported GPU device is available, the DeepRx network training runs on the GPU (requires Parallel Computing Toolbox™).

% Configure UL PUSCH simulation parameters for training, validation, and testing simParameters.TrainNow =false; % If true, train the network; otherwise, use a pretrained network simParameters.UseParallel =

false; % If true, enable parallel processing; otherwise, execute serially simParameters.LearnRate =

0.001; % Initial learning rate for training simParameters.NTrainSamples =

30e3; % Number of training iterations simParameters.NFrames =

1; % Number of frames used in each training iteration % Generic UL PUSCH simulation parameters. Note that the values of these parameters % are fixed during training simParameters.CarrierFrequency = 3.5e9; % Carrier frequency in Hz simParameters.NSizeGrid = 26; % Bandwidth in number of resource blocks (26 RBs at 15 kHz SCS) simParameters.SubcarrierSpacing = 15; % Subcarrier spacing in kHz simParameters.ModulationType = '16QAM'; % Modulation type: 'pi/2-BPSK', 'QPSK', '16QAM', '64QAM', '256QAM' simParameters.CodeRate = 658/1024; % Code rate used to calculate transport block size

The example randomizes training parameters within specified ranges to help DeepRx generalize across channel and simulation conditions, such as SNR, delay spread, maximum Doppler shift, and DM-RS settings.

% Randomized UL PUSCH simulation parameters. Note that the values of these % parameters are randomly selected within the given range during training. They % are relevant only during training. simParameters.SNRInLimits = [-4 32]; % SNR limits for uniform distribution [min(SNR) max(SNR)] in dB simParameters.DelaySpreadLimits = [10e-9 300e-9]; % RMS delay spread limits for uniform distribution [10 300] ns simParameters.MaximumDopplerShiftLimits = [0 500]; % Maximum Doppler shift limits for uniform distribution [0 500] Hz simParameters.DMRSAdditionalPositionLimits = [0 1]; % DMRS additional position limits (0 or 1) simParameters.DMRSConfigurationTypeLimits = [1 2]; % DMRS configuration type limits (1 or 2) simParameters.ChannelTrain = ["CDL-B","CDL-C","CDL-D","TDL-B","TDL-C","TDL-D"]; % Channel delay profiles used in training simParameters.ChannelValidate = ["CDL-A","CDL-E","TDL-A","TDL-E"]; % Channel delay profiles used in validation % Additional UL PUSCH simulation parameters simParameters = hGetAdditionalSystemParameters(simParameters);

________________________________________ Simulation summary ________________________________________ - Input (X) size: [ 312 14 10 ] - Output (L) size: [ 312 14 4 ] - Number of subcarriers (F): 312 - Number of symbols (S): 14 - Number of receive antennas (Nrx): 2

Load or Create DeepRx Network

DeepRx is a deep convolutional neural network (DCNN) based on a Residual Network (ResNet) architecture. This section explains how to develop and train a ResNet-based convolutional receiver framework for detecting OFDM waveforms [1, 6]. The example mode depends on the value of simParameters.TrainNow:

true— The example is in training mode. In this case, you construct and train the network from scratch.false— The example is in simulation mode. In this case, you use a pretrained network to decode the transmitted bits

DCNNs excel at identifying and learning crucial features from images. This example uses a DCNN to detect transmitted bits from the received resource grid, using 2-D convolutions that are well suited for processing the time-frequency resource grid. However, merely adding additional layers to extract further information in very deep networks can cause a plateau in accuracy and an increase in training errors, an issue known as degradation. DeepRx mitigates this problem by implementing residual blocks that introduce shortcuts or skip connections, facilitating the more efficient flow of gradients to earlier layers [7, 8]. Moreover, to lower computational requirements, each residual block incorporates 2-D depthwise separable convolutions [9].

In simulation mode, the example loads the pretrained

DeepRx_2M.matnetwork and evaluates the detection performance using the generated test data.In training mode, the example sets up and trains the DeepRx network architecture.

The training inputs consist of three components:

Frequency domain received resource grid (

rxGrid) — A complex-valued array with dimensions -by--by-Transmit pilot signals grid (

dmrsGrid) — A complex-valued array with dimensions -by--by-Channel estimations grid (

rawChanEstGrid) — A complex-valued array with dimensions -by--by-

The example concatenates rxGrid, dmrsGrid, and rawChanEstGrid along the channel dimension (depth-wise concatenation), yielding a complex-valued array with dimensions -by--. Since standard DCNNs operate on real-valued images, the example converts into a -by--by- real-valued array by stacking real and imaginary parts back to back. The labels that contain the bits before (trBlk) and after (codedTrBlock) the LDPC encoding, rate matching, and scrambling operations.

if simParameters.TrainNow % Create a custom DeepRx architecture for training [net,netParam] = hCreateDeepRx(simParameters.InputSize,simParameters.NBits,InputNorm="none"); else % Load a pretrained DeepRx network. Check for a custom-trained network % first, then the default network. if exist("trained_DeepRx.mat","file") data = load("trained_DeepRx.mat"); % Load custom-trained network elseif exist("DeepRx_2M.mat","file") data = load("DeepRx_2M.mat"); % Load pretrained network else error("No pretrained network (trained_DeepRx.mat or DeepRx_2M.mat) found in the working folder."); end net = data.net; netParam = data.netParam; end % Display the summary of the network displayNetworkSummary(net,netParam);

________________________________________ Neural Network Summary ________________________________________ - Number of ResNet blocks: 11 - Total number of layers: 46 - Total number of learnables: 1.2325 million

Train DeepRx Network

The DeepRx implementation frames the task on the receiver side as a self-supervised learning problem that does not require manual labeling. The model's input consists of received waveforms in the frequency domain, and the corresponding labels are the transmitted bits. In this section, you can generate the training data using the end-to-end PUSCH simulation function hGetFeaturesAndLabels. The prediction of the transmitted bits is framed as a binary classification problem. The output of the DeepRx network is an -by--by- soft bits matrix, where is the number of bits per symbol. The example uses BCE loss with sigmoid during training to compare the predicted LLRs () with the labels ().

To reduce the total training time, execute the SNR points on each available worker. For more information, see Accelerate Link-Level Simulations with Parallel Processing example. Train the DeepRx model on a GPU using data generated asynchronously on multiple CPUs. Using a GPU requires Parallel Computing Toolbox™ and a supported GPU device.

if simParameters.TrainNow % Train the DeepRx network using the specified simulation parameters net = hTrainDeepRx(simParameters,net); % Save the trained network and parameters to the current folder save("trained_DeepRx.mat","net","netParam"); % Information message indicating successful saving of the trained % network disp("Your trained network is saved in the current folder as trained_DeepRx.mat..."); end

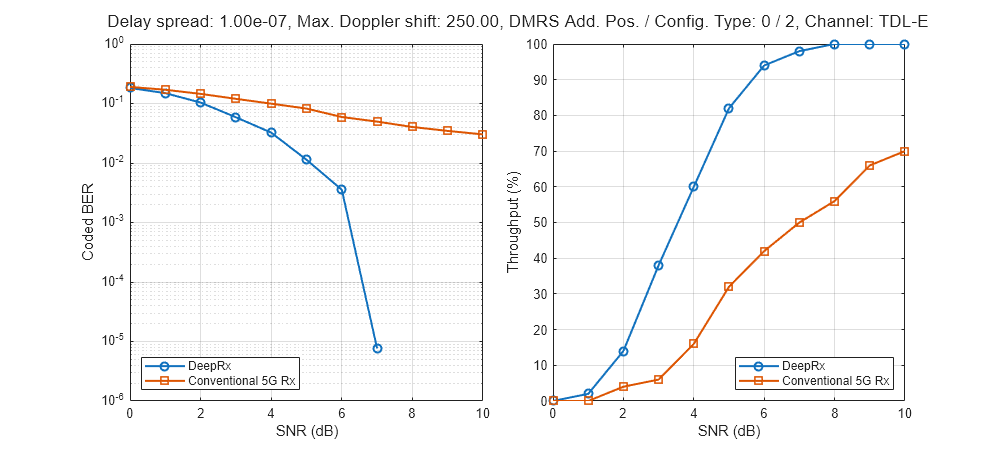

Compare the Performance of DeepRx and Conventional 5G Receivers

In this section, you calculate the coded BER and throughput for DeepRx and the conventional 5G receiver. The performance calculation includes generating the received resource grid, as outlined in the Train DeepRx Network section. You can set the modulation scheme during testing using the parameter simParameters.ModulationType up to the highest value used in training. For instance, 16-QAM is used while obtaining the trained network DeepRx_2M.mat, which means you can test the performance of DeepRx for QPSK or 16-QAM modulations. You can also set the logical variable simParameters.PerfectChannelEstimator to determine the channel estimation and timing synchronization approach. When set to true, it enables perfect channel estimation and synchronization for the conventional 5G receiver.

The trained DeepRx network predicts the soft decision bits (LLRs) using the frequency domain received resource grid, the DM-RS grid, and the estimated channel values that correspond to the DM-RS grid.

The end-to-end system simulation function

hEvaluateLinkPerformancecalculates the coded BER by comparing the transport block vector () with the UL-SCH decoded bits vector ().The end-to-end system simulation function

hEvaluateLinkPerformancealso calculates the throughput by using the number of successful transmissions and the size of each transmission block.

To reduce the total evaluation time, you can simulate each SNR point on an available worker. This example uses DeepRx predictions on CPU.

% End-to-end simulation parameters for testing the DeepRx and conventional % 5G receivers simParameters.ChannelModel ="TDL-E"; % Channel models for testing: "CDL-A", "CDL-E", "TDL-A", "TDL-E" simParameters.DelaySpread =

100e-9; % Delay spread in seconds, range: [10e-9, 300e-9] simParameters.MaximumDopplerShift =

250; % Maximum Doppler shift in Hz, range: [0, 500] simParameters.SNRInVec =

0:10; % SNR range in dB for evaluation simParameters.ModulationType =

"QPSK"; % Modulation type: "QPSK" or "16QAM" simParameters.DMRSAdditionalPosition =

0; % DMRS additional position: 0 or 1 simParameters.DMRSConfigurationType =

2; % DMRS configuration type: 1 or 2 simParameters.NFrames =

5 ; % Number of frames for the simulation simParameters.PerfectChannelEstimator =

false; % Use perfect channel estimation if true % Evaluate link performance using the specified simulation parameters and % neural network [resultsRX,resultsAI] = hEvaluateLinkPerformance(simParameters,net);

________________________________________ Simulating DeepRx and 5G receivers ________________________________________ Simulating DeepRx and conventional receivers for 11 SNR points... - Test data generation : Serial execution - Inference environment: CPU ( 9.09%) SNRIn: +0.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 18.18%) SNRIn: +1.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 27.27%) SNRIn: +2.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 36.36%) SNRIn: +3.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 45.45%) SNRIn: +4.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 54.55%) SNRIn: +5.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 63.64%) SNRIn: +6.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 72.73%) SNRIn: +7.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 81.82%) SNRIn: +8.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 ( 90.91%) SNRIn: +9.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2 (100.00%) SNRIn: +10.00 dB | Channel: TDL-E | Delay Spread: 1.00e-07 | Max Doppler shift: 250.00 | DMRS Add. Pos. / Config. Type: 0 / 2

Evaluate Detection Performance

Finally, evaluate the detection performance results by comparing DeepRx with the conventional receiver, which uses the nrPUSCHDecode function.If simParameters.TrainNow is set to true, the example saves the trained network in the current directory as trained_DeepRx.mat and assesses and plots the performance of the DeepRx network by default. Otherwise, it assesses and plots the performance of the pretrained network.

% Plot Coded BER vs. SNR and Throughput (%) vs. SNR

visualizePerformance(simParameters,resultsRX,resultsAI);

References

[1] M. Honkala, D. Korpi, and J. M. J. Huttunen, "DeepRx: Fully Convolutional Deep Learning Receiver," in IEEE Transactions on Wireless Communications, vol. 20, no. 6, pp. 3925—3940, June 2021.

[2] J. Hoydis, F. A. Aoudia, A. Valcarce, and H. Viswanathan, "Toward a 6G AI-Native Air Interface," in IEEE Communications Magazine, vol. 59, no. 5, pp. 76—81, May 2021.

[3] T. O’Shea and J. Hoydis, "An Introduction to Deep Learning for the Physical Layer," in IEEE Transactions on Cognitive Communications and Networking, vol. 3, no. 4, pp. 563—575, Dec. 2017.

[4] 3GPP TR 38.843. "Study on Artificial Intelligence (AI)/Machine Learning (ML) for NR air interface" 3rd Generation Partnership Project; Technical Specification Group Radio Access Network.

[5] 3GPP TSG RAN Meeting #103, Qualcomm, "Revised WID on Artificial Intelligence (AI)/Machine Learning (ML) for NR Air Interface," RP-240774, Maastricht, Netherlands, Mar. 18-21, 2024.

[6] F. Ait Aoudia and J. Hoydis, "End-to-End Learning for OFDM: From Neural Receivers to Pilotless Communication," in IEEE Transactions on Wireless Communications, vol. 21, no. 2, pp. 1049—1063, Feb. 2022.

[7] K. He, X. Zhang, S. Ren, and J. Sun, "Deep Residual Learning for Image Recognition," 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 2016, pp. 770—778.

[8] He, K., Zhang, X., Ren, S., and Sun, J., "Identity mappings in deep residual networks", in European Conference on Computer Vision, pp. 630—645, Springer, 2016.

[9] F. Chollet, "Xception: Deep Learning with Depthwise Separable Convolutions," in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 2017 pp. 1800—1807.

Local Functions

function visualizePerformance(simParameters,resultsRX,resultsAI) % Visualize the performance of the system by plotting coded BER and % throughput results against SNR for both MMSE-based conventional 5G % receiver and DeepRx methods. % Create a figure for the plots f = figure; f.Position = [0 0 1000 450]; t = tiledlayout(1,2,'TileSpacing','compact'); % Plot coded BER against SNR nexttile; semilogy([resultsAI.SNRIn],[resultsAI.CodedBER],'-o',"LineWidth",1.5); hold on semilogy([resultsRX.SNRIn],[resultsRX.CodedBER],'-s',"LineWidth",1.5,"MarkerSize",7); hold off legend("DeepRx","Conventional 5G Rx","Location","southwest"); grid on; xlabel('SNR (dB)'); ylabel('Coded BER'); % Plot throughput against SNR nexttile; plot([resultsAI.SNRIn],[resultsAI.PercThroughput],'-o',"LineWidth",1.5); hold on plot([resultsRX.SNRIn],[resultsRX.PercThroughput],'-s',"LineWidth",1.5,"MarkerSize",7); hold off legend("DeepRx","Conventional 5G Rx","Location","southeast"); grid on; xlabel('SNR (dB)'); ylabel('Throughput (%)'); % Add a title to the tiled layout summarizing the system configuration title(t,sprintf('Delay spread: %.2e, Max. Doppler shift: %.2f, DMRS Add. Pos. / Config. Type: %d / %d, Channel: %s',... simParameters.DelaySpread,simParameters.MaximumDopplerShift,... simParameters.DMRSAdditionalPosition, simParameters.DMRSConfigurationType,... simParameters.ChannelModel)); end function displayNetworkSummary(net,details) % Display a summary of the deep neural network, including the number of % layers and learnable parameters, and plot its architecture if % initialized. % Calculate the total number of layers in the network. The total number % of layers is calculated similar to LeNet-5. numLayers = details.conv + details.conv_sep + details.fc; % Print the network summary fprintf('\n%s\n',repmat('_',1,40)); fprintf('\nNeural Network Summary\n'); fprintf('%s\n',repmat('_',1,40)); fprintf('\n'); fprintf('- %-27s %i\n','Number of ResNet blocks:',details.resblock); fprintf('- %-27s %i\n','Total number of layers:',numLayers); % Initialize the network if not already done if ~net.Initialized net = init(net); end % Analyze the network to get the number of learnable parameters netInfo = analyzeNetwork(net,Plots="none"); numLearnables = sum(netInfo.LayerInfo.NumLearnables) / 1e6; % millions fprintf('- %-27s %.4f million\n','Total number of learnables:',numLearnables); end