What Is SLAM?

How it works, types of SLAM algorithms, and getting started

How it works, types of SLAM algorithms, and getting started

SLAM (simultaneous localization and mapping) is a method used for autonomous vehicles that lets you build a map and localize your vehicle in that map at the same time. SLAM algorithms allow the vehicle to map out unknown environments. Engineers use the map information to carry out tasks such as path planning and obstacle avoidance.

SLAM has been the subject of technical research for many years. But with vast improvements in computer processing speed and the availability of low-cost sensors such as cameras and laser range finders, SLAM algorithms are now used for practical applications in a growing number of fields.

To understand why SLAM is important, let's look at some of its benefits and application examples.

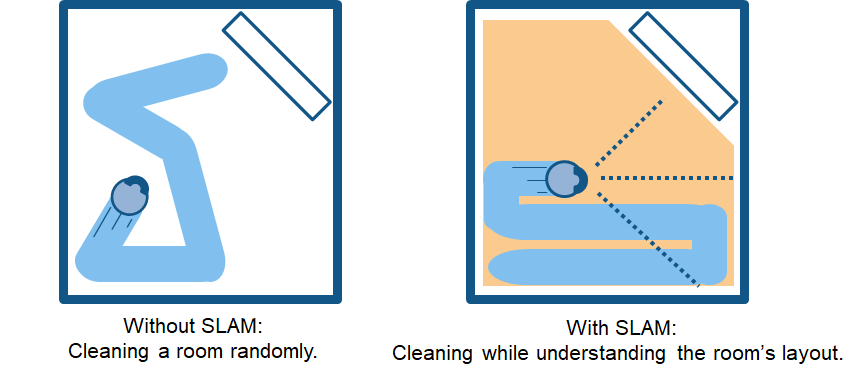

Consider a home robot vacuum. Without SLAM, it will just move randomly within a room and may not be able to clean the entire floor surface. In addition, this approach uses excessive power, so the battery will run out more quickly. On the other hand, robots with a SLAM algorithm can use information such as the number of wheel revolutions and data from cameras and other imaging sensors to determine the amount of movement needed. This is called localization. The robot can also simultaneously use the camera and other sensors to create a map of the obstacles in its surroundings and avoid cleaning the same area twice. This is called mapping.

Benefits of SLAM for Cleaning Robots

SLAM algorithms are useful in many other applications such as navigating a fleet of mobile robots to arrange shelves in a warehouse, parking a self-driving car in an empty spot, or delivering a package by navigating a drone in an unknown environment. MATLAB® and Simulink® provide SLAM algorithms, functions, and analysis tools to develop various applications. You can implement simultaneous localization and mapping along with other tasks such as sensor fusion, object tracking path planning, and path following.

Broadly speaking, there are two types of technology components used to achieve SLAM. The first type is sensor signal processing, including the front-end processing, which is largely dependent on the sensors used. The second type is pose-graph optimization, including the back-end processing, which is sensor-agnostic.

To learn more about the front-end processing component, explore different methods of SLAM such as visual SLAM, lidar SLAM, and multi-sensor SLAM.

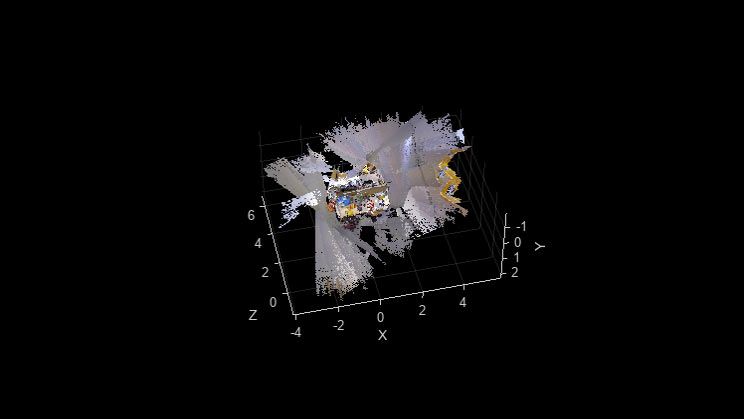

As the name suggests, visual SLAM (or vSLAM) uses images acquired from cameras and other image sensors. Visual SLAM can use simple cameras (wide angle, fish-eye, and spherical cameras), compound eye cameras (stereo and multi cameras), and RGB-D cameras (depth and ToF cameras).

Visual SLAM can be implemented at low cost with relatively inexpensive cameras. In addition, since cameras provide a large volume of information, they can be used to detect landmarks (previously measured positions). Landmark detection can also be combined with graph-based optimization, achieving flexibility in SLAM implementation.

Monocular SLAM is a type of SLAM algorithm when vSLAM uses a single camera as the only sensor, which makes it challenging to define depth. This can be solved by either detecting AR markers, checkerboards, or other known objects in the image for localization or by fusing the camera information with another sensor such as inertial measurement units (IMUs), which can measure physical quantities such as velocity and orientation. Technology related to vSLAM includes structure from motion (SfM), visual odometry, and bundle adjustment.

Visual SLAM algorithms can be broadly classified into two categories. Sparse methods match feature points of images and use algorithms such as PTAM and ORB-SLAM. Dense methods use the overall brightness of images and use algorithms such as DTAM, LSD-SLAM, DSO, and SVO.

Monocular vSLAM

Stereo vSLAM

RGB-D vSLAM

Light detection and ranging (lidar) is a method that primarily uses a laser sensor (or distance sensor).

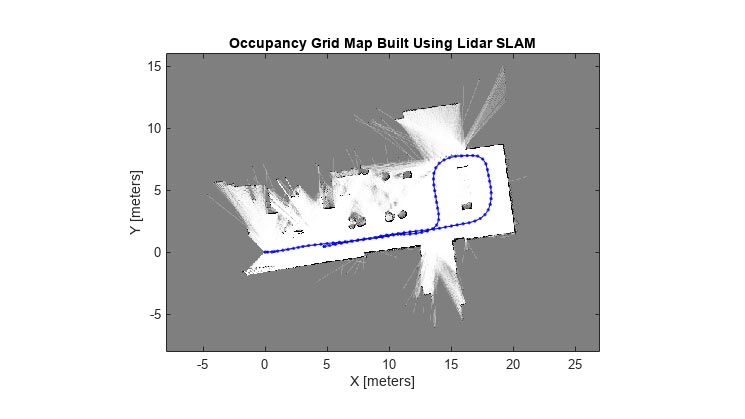

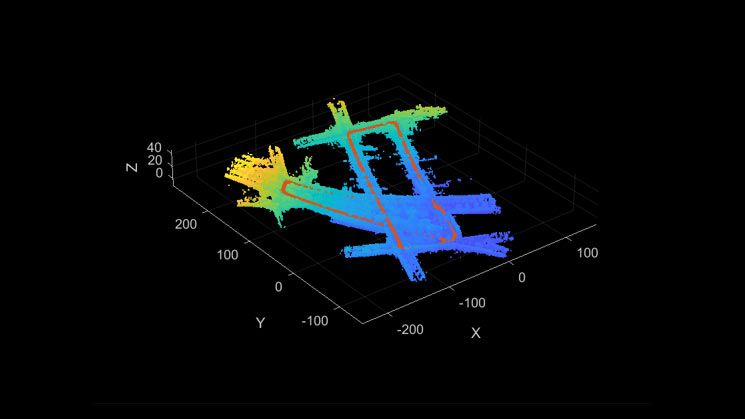

Compared to cameras, ToF, and other sensors, lasers are significantly more precise and are used for applications with high-speed moving vehicles such as self-driving cars and drones. The output values from laser sensors are generally 2D (x, y) or 3D (x, y, z) point cloud data. The laser sensor point cloud provides high-precision distance measurements and works effectively for map construction with SLAM algorithms. Movement is estimated sequentially registering the point clouds. The calculated movement (traveled distance) is used for localizing the vehicle. To estimate the relative transformation between the point clouds, you can use registration algorithms such as iterative closest point (ICP) and normal distributions transform (NDT). Alternatively, you can use a feature-based approach such as Lidar Odometry and Mapping (LOAM) or Fast Global Registration (FGR), based on FPFH features. 2D or 3D point cloud maps can be represented as grid maps or voxel maps.

Due to these challenges, localization for autonomous vehicles may involve fusing other measurements such as wheel odometry, global navigation satellite system (GNSS), and IMU data. For applications such as warehouse robots, 2D lidar SLAM is commonly used, whereas SLAM using 3D point clouds is commonly used for UAVs and automated driving.

SLAM with 2D LiDAR

SLAM with 3D LiDAR

Multi-sensor SLAM is a type of SLAM algorithm that utilizes a variety of sensors—including cameras, IMUs (Inertial Measurement Units), GPS, lidar, radar, and others—to enhance the precision and robustness of SLAM algorithms. By using the complementary strengths of different sensors and mitigating their individual limitations, multi-sensor SLAM can achieve superior performance. For instance, while cameras deliver detailed visual data, they may falter under low-light or high-speed scenarios; lidar, on the other hand, performs consistently across diverse lighting conditions but may have difficulties with certain surface materials. Multi-sensor SLAM offers a more dependable solution than its single-sensor counterparts by integrating data from various sources. Factor graph is a modular and adaptable framework that integrates diverse sensor types, such as cameras, IMUs, and GPS. Additionally, factor graph accommodates custom sensor inputs (like lidar and odometry) by converting the data into pose factors. This capability enables various multi-sensor SLAM configurations, such as Monocular Visual-Inertial SLAM and Lidar-IMU SLAM.

Although SLAM algorithms are used for some practical applications, several technical challenges prevent more general-purpose adoption. Each has a countermeasure that can help overcome the obstacle.

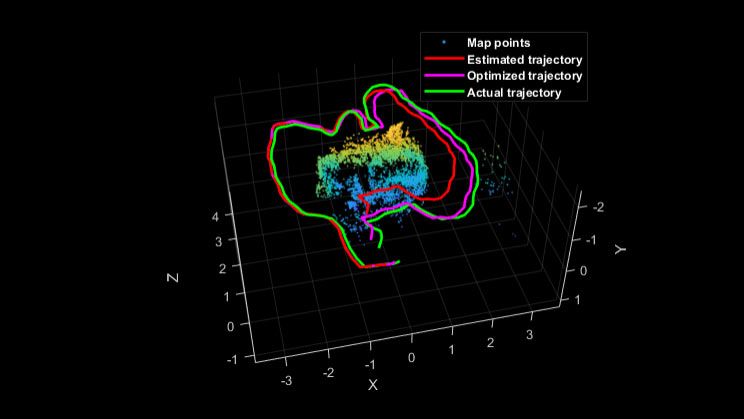

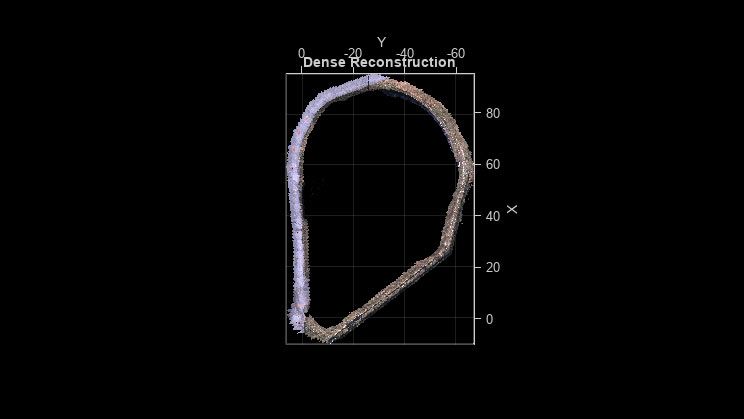

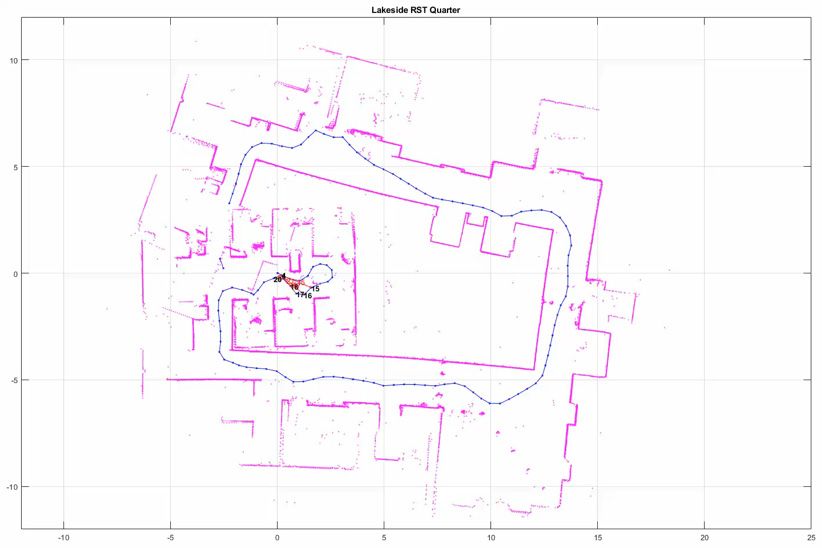

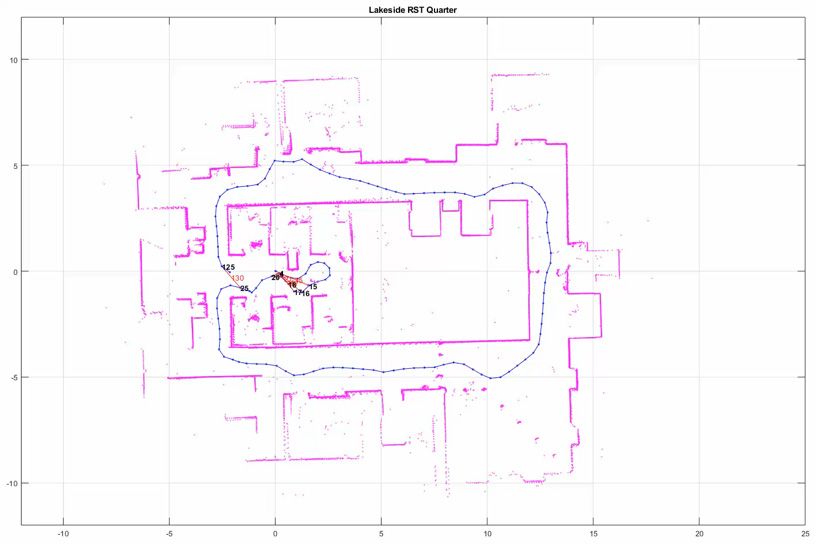

SLAM algorithms estimate sequential movement, which includes some margin of error. The error accumulates over time, causing substantial deviation from actual values. It can also cause map data to collapse or distort, making subsequent searches difficult. Let’s take an example of driving around a square-shaped passage. As the error accumulates, the robot’s starting and ending points no longer match up. This is called a loop closure problem. Pose estimation errors like these are unavoidable. It is important to detect loop closures and determine how to correct or cancel out the accumulated error.

Example of constructing a pose graph and minimizing errors.

For multi-sensor SLAM, accurate calibration of the sensors is vital. Discrepancies or calibration errors can result in sensor fusion inaccuracies and undermine the system's overall functionality. Factor graph optimization can further aid in the calibration process, including the alignment of camera-IMU systems.

One countermeasure is to remember some characteristics from a previously visited place as a landmark and minimize the localization error. Pose graphs are constructed to help correct the errors. By solving error minimization as an optimization problem, more accurate map data can be generated. This kind of optimization is called bundle adjustment in visual SLAM.

Example of constructing a pose graph and minimizing errors

Image and point-cloud mapping does not consider the characteristics of a robot’s movement. In some cases, this approach can generate discontinuous position estimates. For example, a calculation result showing that a robot moving at 1 m/s suddenly jumped forward by 10 meters. This kind of localization failure can be prevented either by using a recovery algorithm or by fusing the motion model with multiple sensors to make calculations based on the sensor data.

There are several methods for using a motion model with sensor fusion. A common method is using Kalman filtering for localization. Since most differential drive robots and four-wheeled vehicles generally use nonlinear motion models, extended Kalman filters and particle filters (Monte Carlo localization) are often used. More flexible Bayes filters, such as unscented Kalman filters, can also be used in some cases. Some commonly used sensors are inertial measurement devices such as IMU, Attitude and Heading Reference System or AHRS, Inertial Navigation System or INS, accelerometer sensors, gyro sensors, and magnetic sensors). Wheel encoders attached to the vehicle are often used for odometry.

When localization fails, a countermeasure to recover is by remembering a landmark as a key frame from a previously visited place. When searching for a landmark, a feature extraction process is applied in a way that it can scan at high speeds. Some methods based on image features include bag of features (BoF) and bag of visual words (BoVW). More recently, deep learning has been used for the comparison of distances from features.

Computing cost is a problem when implementing SLAM algorithms on vehicle hardware. Computation is usually performed on compact and low-energy embedded microprocessors that have limited processing power. To achieve accurate localization, it is essential to execute image processing and point cloud matching at high frequency. In addition, optimization calculations such as loop closure are high computation processes. The challenge is how to execute such computationally expensive processing on embedded microcomputers.

One countermeasure is to run different processes in parallel. Processes such as feature extraction, which is the preprocessing of the matching process, are relatively suitable for parallelization. Using multicore CPUs for processing, single instruction multiple data (SIMD) calculation, and embedded GPUs can further improve speeds in some cases. Also, since pose graph optimization can be performed over a relatively long cycle, lowering its priority and carrying out this process at regular intervals can also improve performance.

Sensor signal and image processing for SLAM front end:

2D / 3D pose graphs for SLAM back end:

Occupancy grids with SLAM Map Builder app:

Deploy standalone ROS nodes and communicate with your ROS-enabled robot from MATLAB and Simulink® using ROS Toolbox.

Deploy your image processing and navigation algorithms developed in MATLAB and Simulink on embedded microprocessors using MATLAB Coder™ and GPU Coder™.

Expand your knowledge through documentation, examples, videos, and more.

Explore similar topic areas commonly used with MATLAB and Simulink products.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

Europe