Key Issues for Realizing Autonomy of Inland Vessels | MATLAB Day for Marine Robotics & Autonomous Systems, Part 1

From the series: MATLAB Day for Marine Robotics & Autonomous Systems

Prof. Dirk Söffker, University Duisburg-Essen

Navreet Singh Thind, University Duisburg-Essen

Abderahman Bejaoui, University Duisburg-Essen

Waldemar Boschmann, University Duisburg-Essen

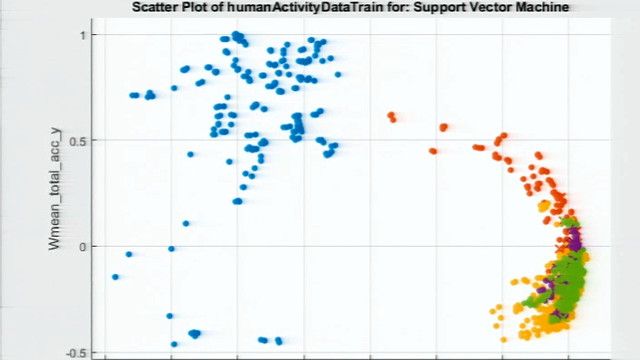

Realizing the autonomy of inland vessels can help solve waterway-based transport problems like labor shortage. Autonomous operation depends on the perception of the environment in any situation. The state-of-the-art solution is based on mapping diverse approaches from the automotive field to solve similar problems for inland vessel tasks, such as localization, object detection, tracking, and behavioral prediction. Based on the used modality or method, there are different drawbacks and advantages. The chair dynamics and control research is mainly focused on safety and reliability issues in connection with the developed and applied methods. The speakers utilize diverse approaches as well as situational knowledge to focus on the quality and reliability of the final decision and estimation. This contribution presents ongoing work aiming for autonomous and highly automated inland vessels.

Published: 23 Aug 2022