Labeling Ground Truth for Object Detection

From the series: Perception

Quality ground truth data is crucial for developing algorithms for autonomous systems. To generate quality ground truth data, Sebastian Castro and Connell D'Souza demonstrate how to use the Ground Truth Labeler app to label images and video frames.

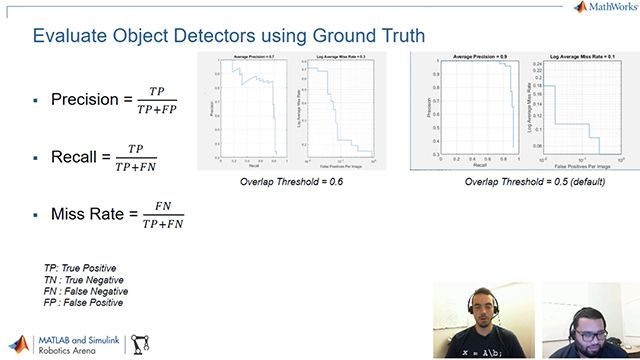

First, Sebastian and Connell introduce you to a few different types of object detectors. You will learn to differentiate between object detectors as well as discover the workflow involved in training object detectors using ground truth data.

Connell will then show you how to create ground truth from a short video clip and create a labeled dataset that can be used in MATLAB® or in other environments. Labeling can be automated using the built-in automation algorithms or by creating your own custom algorithms. You can also sync this data with other time-series data like LiDAR or radar data. Download all the files used in this video from MATLAB Central's File Exchange

Additional Resources:

Published: 17 Oct 2018