This example shows how to improve runtime performance of an OCR model with quantization. This can be useful when deploying an OCR model in resource constrained systems.

Specify the fullpath to the OCR model.

Specify a filename to save the quantized OCR model.

Quantize the OCR model.

Compare the runtime performance of the quantized model against the original model.

Quantized model is 1.425x faster

Compare file size of the quantized model with that of the original model.

Quantized model is 7.8483x smaller

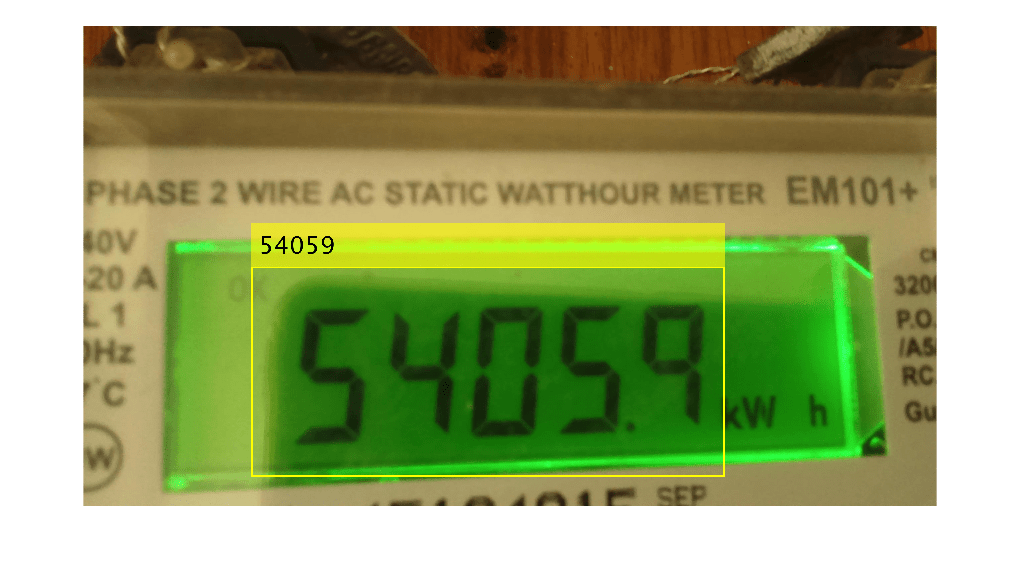

While the quantized model is smaller and faster than the original model, these advantages of quantization comes at the expense of accuracy. To understand this trade-off, compare the accuracy of the two models by evaluating them against the YUVA EB dataset.

Download the dataset.

Downloading evaluation data set (7SegmentImages.zip - 96 MB)...

Load ground truth to be used for evaluation.

Create datastores that contain images, bounding boxes and text labels from the groundTruth object using the ocrTrainingData function with the label and attribute names used during labeling.

Run the two models on the dataset and evaluate recognition accuracy using evaluateOCR.

Evaluating ocr results

----------------------

* Selected metrics: character error rate, word error rate.

* Processed 119 images.

* Finalizing... Done.

* Data set metrics:

CharacterErrorRate WordErrorRate

__________________ _____________

0.082195 0.19958

Evaluating ocr results

----------------------

* Selected metrics: character error rate, word error rate.

* Processed 119 images.

* Finalizing... Done.

* Data set metrics:

CharacterErrorRate WordErrorRate

__________________ _____________

0.13018 0.31933

Display the model accuracies.

Original model accuracy = 91.7805%

Quantized model accuracy = 86.9816%

Tabulate the quantitative results.

ans=3×2 table

OriginalModel QuantizedModel

_____________ ______________

Accuracy (in %) 91.781 86.982

File Size (in MB) 11.297 1.4394

Runtime (in seconds) 0.09306 0.065305