auc

Description

Examples

Input Arguments

Output Arguments

Algorithms

For an ROC curve, auc calculates the area under the curve by trapezoidal

integration using the trapz function. For a precision-recall

curve, auc calculates the area under the curve using the

trapz function, and then adds the area of the rectangle (if any)

that is formed by the leftmost point on the curve and the point (0,0). For example,

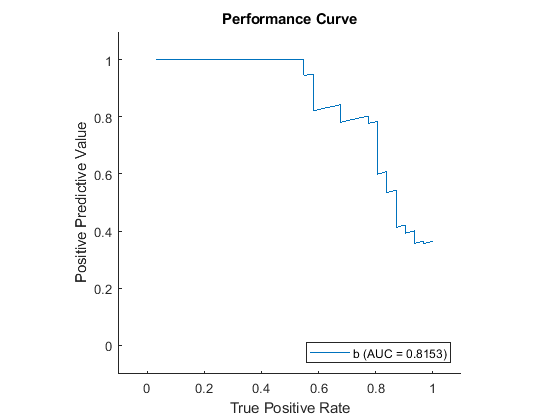

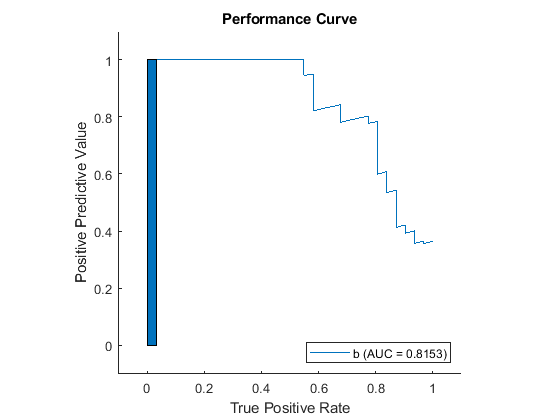

load ionosphere rng default % For reproducibility of the partition c = cvpartition(Y,Holdout=0.25); trainingIndices = training(c); % Indices for the training set testIndices = test(c); % Indices for the test set XTrain = X(trainingIndices,:); YTrain = Y(trainingIndices); XTest = X(testIndices,:); YTest = Y(testIndices); Mdl = fitcsvm(XTrain,YTrain); rocObj = rocmetrics(Mdl,XTest,YTest,AdditionalMetrics="ppv"); r = plot(rocObj,XAxisMetric="tpr",... YAxisMetric="ppv",ClassNames="b"); % Plots the normal PR curve. legend(Location="southeast")

There is a gap between the leftmost point on the curve and the zero point of the True

Positive Rate. Plot the rectangle that fills this gap, which represents the correction that

auc adds to the returned AUC.

hold on rectangle(Position=[0 0 r.XData(2) r.YData(2)],FaceColor=r.Color) hold off

Technically, the rectangle is not part of the precision-recall curve. But to make

comparisons easier across models (which can have different domains of definition),

auc treats the area under the curve as extending all the way down

to zero.

If you create a rocmetrics object with

confidence intervals (as described on the reference page), the returned

AUC

lower and upper arguments use the same technique

for computing confidence intervals as done for the original rocmetrics

object, either bootstrapping or cross-validation.

Version History

Introduced in R2024b

See Also

rocmetrics | average | plot