incrementalClassificationNaiveBayes

Description

The incrementalClassificationNaiveBayes function creates an

incrementalClassificationNaiveBayes model object, which represents a naive Bayes multiclass

classification model for incremental learning.

Unlike other Statistics and Machine Learning Toolbox™ model objects, incrementalClassificationNaiveBayes can be called directly. Also,

you can specify learning options, such as performance metrics configurations and prior class

probabilities, before fitting the model to data. After you create an

incrementalClassificationNaiveBayes object, it is prepared for incremental learning.

incrementalClassificationNaiveBayes is best suited for incremental learning. For a traditional

approach to training a naive Bayes model for multiclass classification (such as creating a

model by fitting it to data, performing cross-validation, tuning hyperparameters, and so on),

see fitcnb.

Creation

You can create an incrementalClassificationNaiveBayes model object in several ways:

Call the function directly — Configure incremental learning options, or specify learner-specific options, by calling

incrementalClassificationNaiveBayesdirectly. This approach is best when you do not have data yet or you want to start incremental learning immediately. You must specify the maximum number of classes or all class names expected in the response data during incremental learning.Convert a traditionally trained model — To initialize a naive Bayes classification model for incremental learning using the model parameters of a trained model object (

ClassificationNaiveBayes), you can convert the traditionally trained model to anincrementalClassificationNaiveBayesmodel object by passing it to theincrementalLearnerfunction.Call an incremental learning function —

fit,updateMetrics, andupdateMetricsAndFitaccept a configuredincrementalClassificationNaiveBayesmodel object and data as input, and return anincrementalClassificationNaiveBayesmodel object updated with information learned from the input model and data.

Syntax

Description

Mdl = incrementalClassificationNaiveBayes('MaxNumClasses',MaxNumClasses)Mdl, where MaxNumClasses is the maximum number

of classes expected in the response data during incremental learning. Properties of a

default model contain placeholders for unknown model parameters. You must train a default

model before you can track its performance or generate predictions from it.

Mdl = incrementalClassificationNaiveBayes('ClassNames',ClassNames)ClassNames expected in the response data during incremental learning, and sets the ClassNames property.

Mdl = incrementalClassificationNaiveBayes(___,Name,Value)incrementalClassificationNaiveBayes('DistributionNames','mn','MaxNumClasses',5,'MetricsWarmupPeriod',100)

specifies that the joint conditional distribution of the predictor variables is

multinomial, sets the maximum number of classes expected in the response data to

5, and sets the metrics warm-up period to

100.

Input Arguments

Maximum number of classes expected in the response data during incremental learning, specified as a positive integer.

MaxNumClasses sets the number of class names in the ClassNames property.

If you do not specify MaxNumClasses, you must specify the

ClassNames argument.

Example: 'MaxNumClasses',5

Data Types: single | double

All unique class labels expected in the response data during incremental learning,

specified as a categorical, character, or string array; logical or numeric vector; or

cell array of character vectors. ClassNames and the response data

must have the same data type. This argument sets the ClassNames property.

ClassNames specifies the order of any input or output argument

dimension that corresponds to the class order. For example, set

'ClassNames' to specify the order of the dimensions of

Cost or the column order of classification scores returned by

predict

If you do not specify ClassNames, you must specify the

MaxNumClasses argument. In that case, the software infers the

ClassNames property from the data during incremental

learning.

Example: 'ClassNames',["virginica" "setosa"

"versicolor"]

Data Types: single | double | logical | string | char | cell | categorical

Name-Value Arguments

Specify optional pairs of arguments as

Name1=Value1,...,NameN=ValueN, where Name is

the argument name and Value is the corresponding value.

Name-value arguments must appear after other arguments, but the order of the

pairs does not matter.

Before R2021a, use commas to separate each name and value, and enclose

Name in quotes.

Example: 'NumPredictors',4,'Prior',[0.3 0.3 0.4] specifies

4 variables in the predictor data and the prior class probability

distribution of [0.3 0.3 0.4].

Cost of misclassifying an observation, specified as a value in this table, where

c is the number of classes in the

ClassNames property:

| Value | Description |

|---|---|

| c-by-c numeric matrix |

|

| Structure array | A structure array having two fields:

|

If you specify Cost, you must also specify the

ClassNames argument. Cost sets the

Cost property.

The default is one of the following alternatives:

An empty array

[]when you specifyMaxNumClassesA c-by-c matrix when you specify

ClassNames, whereCost(for alli,j) = 1ijCost(for alli,j) = 0ij

Example: 'Cost',struct('ClassNames',{'b','g'},'ClassificationCosts',[0

2; 1 0])

Data Types: single | double | struct

Model performance metrics to track during incremental learning, in addition to

minimal expected misclassification cost, specified as a built-in loss function name,

string vector of names, function handle (for example,

@metricName), structure array of function handles, or cell vector

of names, function handles, or structure arrays.

When Mdl is warm (see IsWarm), updateMetrics and updateMetricsAndFit track performance metrics in the Metrics property of Mdl.

The following table lists the built-in loss function names. You can specify more than one by using a string vector.

| Name | Description |

|---|---|

"binodeviance" | Binomial deviance |

"classiferror" | Misclassification error rate |

"exponential" | Exponential |

"hinge" | Hinge |

"logit" | Logistic |

"mincost" | Minimal expected misclassification cost (for classification scores that are posterior probabilities). |

"quadratic" | Quadratic |

For more details on the built-in loss functions, see loss.

Example: 'Metrics',["classiferror" "logit"]

To specify a custom function that returns a performance metric, use function handle notation. The function must have this form.

metric = customMetric(C,S,Cost)

The output argument

metricis an n-by-1 numeric vector, where each element is the loss of the corresponding observation in the data processed by the incremental learning functions during a learning cycle.You specify the function name (here,

customMetric).Cis an n-by-K logical matrix with rows indicating the class to which the corresponding observation belongs, where K is the number of classes. The column order corresponds to the class order in theClassNamesproperty. CreateCby settingC(=p,q)1, if observationpqp0.Sis an n-by-K numeric matrix of predicted classification scores.Sis similar to thePosterioroutput ofpredict, where rows correspond to observations in the data and the column order corresponds to the class order in theClassNamesproperty.S(is the classification score of observationp,q)pqCostis a K-by-K numeric matrix of misclassification costs. See the'Cost'name-value argument.

To specify multiple custom metrics and assign a custom name to each, use a structure array. To specify a combination of built-in and custom metrics, use a cell vector.

Example: 'Metrics',struct('Metric1',@customMetric1,'Metric2',@customMetric2)

Example: 'Metrics',{@customMetric1 @customMetric2 'logit'

struct('Metric3',@customMetric3)}

updateMetrics and updateMetricsAndFit store specified metrics in a table in the Metrics property. The data type of Metrics determines the row names of the table.

'Metrics' Value Data Type | Description of Metrics Property Row Name | Example |

|---|---|---|

| String or character vector | Name of corresponding built-in metric | Row name for "classiferror" is "ClassificationError" |

| Structure array | Field name | Row name for struct('Metric1',@customMetric1) is "Metric1" |

| Function handle to function stored in a program file | Name of function | Row name for @customMetric is "customMetric" |

| Anonymous function | CustomMetric_, where Metrics | Row name for @(C,S,Cost)customMetric(C,S,Cost)... is CustomMetric_1 |

For more details on performance metrics options, see Performance Metrics.

Data Types: char | string | struct | cell | function_handle

Properties

You can set most properties by using name-value pair argument syntax only when you call incrementalClassificationNaiveBayes directly. You can set some properties when you call incrementalLearner to convert a traditionally trained model. You cannot set the properties DistributionParameters, IsWarm, and NumTrainingObservations.

Classification Model Parameters

This property is read-only.

Categorical predictors list, specified as one of the values in this table.

| Value | Description |

|---|---|

| Vector of positive integers | Each entry in the vector is an index value corresponding to the

column of the predictor data that contains a categorical variable. The index

values are between 1 and |

| Logical vector | A true entry means that the corresponding column of

predictor data is a categorical variable. The length of the vector is

NumPredictors. |

"all" | All predictors are categorical. |

For the identified categorical predictors, incrementalClassificationNaiveBayes uses

multivariate multinomial distributions. For more details, see

DistributionNames.

By default, if you specify the DistributionNames option, all

predictor variables corresponding to 'mvmn' are categorical.

Otherwise, none of the predictor variables are categorical.

Example: 'CategoricalPredictors',[1 2 4] and

'CategoricalPredictors',[true true false true] specify that the

first, second, and fourth of four predictor variables are categorical.

Data Types: single | double | logical

Levels of multivariate multinomial predictor variables, specified as a cell

vector. The length of CategoricalLevels is equal to

NumPredictors.

Incremental fitting functions fit and updateMetricsAndFit

populate cells with the learned numeric categorical levels of each categorical

predictor variable, while cells corresponding to other predictor variables contain an

empty array []. Specifically, if predictor j is

multivariate multinomial,

CategoricalLevels{j} is a

list of all distinct values of predictor j experienced during

incremental fitting. For more details, see the DistributionNames

property.

Note

Unlike fitcnb, incremental fitting functions order

the levels of a predictor as the functions experience them during training. For example,

suppose predictor j is categorical with multivariate multinomial

distribution. The order of the levels in CategoricalLevels{j} and,

consequently, the order of the level probabilities in each cell of

DistributionParameters{:,j} returned by incremental fitting functions

can differ from the order returned by fitcnb for the same training data

set.

This property is read-only.

Cost of misclassifying an observation, specified as an array.

If you specify the 'Cost' name-value argument, its value sets

Cost. If you specify a structure array,

Cost is the value of the ClassificationCosts

field.

If you convert a traditionally trained model to create Mdl,

Cost is the Cost property of the

traditionally trained model.

Data Types: single | double

This property is read-only.

All unique class labels expected in the response data during incremental learning, specified as a categorical or character array, a logical or numeric vector, or a cell array of character vectors.

You can set ClassNames in one of three ways:

If you specify the

MaxNumClassesargument, the software infers theClassNamesproperty during incremental learning.If you specify the

ClassNamesargument,incrementalClassificationNaiveBayesstores your specification in theClassNamesproperty. (The software treats string arrays as cell arrays of character vectors.)If you convert a traditionally trained model to create

Mdl, theClassNamesproperty is specified by the corresponding property of the traditionally trained model.

Data Types: single | double | logical | char | string | cell | categorical

This property is read-only.

Number of predictor variables, specified as a nonnegative numeric scalar.

The default NumPredictors value depends on how you create the model:

If you convert a traditionally trained model to create

Mdl,NumPredictorsis specified by the corresponding property of the traditionally trained model.If you create

Mdlby callingincrementalClassificationNaiveBayesdirectly, you can specifyNumPredictorsby using name-value argument syntax. If you do not specify the value, then the default value is0, and incremental fitting functions inferNumPredictorsfrom the predictor data during training.

Data Types: double

This property is read-only.

Number of observations fit to the incremental model Mdl, specified as a nonnegative numeric scalar. NumTrainingObservations increases when you pass Mdl and training data to fit or updateMetricsAndFit.

Note

If you convert a traditionally trained model to create Mdl, incrementalClassificationNaiveBayes does not add the number of observations fit to the traditionally trained model to NumTrainingObservations.

Data Types: double

This property is read-only.

Prior class probabilities, specified as 'empirical',

'uniform', or a numeric vector. incrementalClassificationNaiveBayes

stores the Prior value as a numeric vector.

| Value | Description |

|---|---|

'empirical' | Incremental learning functions infer prior class probabilities from the observed class relative frequencies in the response data during incremental training. |

'uniform' | For each class, the prior probability is 1/K, where K is the number of classes. |

| numeric vector | Custom, normalized prior probabilities. The order of the elements of

Prior corresponds to the elements of the

ClassNames property. |

The default Prior value depends on how you create the model:

If you convert a traditionally trained model to create

Mdl,Prioris specified by the corresponding property of the traditionally trained model.Otherwise, the default value of

Prioris'empirical'.

Data Types: single | double | char | string

This property is read-only.

Score transformation function describing how incremental learning functions

transform raw response values, specified as a character vector, string scalar, or

function handle. incrementalClassificationNaiveBayes stores the specified value as a

character vector or function handle.

This table describes the available built-in functions for score transformation.

| Value | Description |

|---|---|

"doublelogit" | 1/(1 + e–2x) |

"invlogit" | log(x / (1 – x)) |

"ismax" | Sets the score for the class with the largest score to 1, and sets the scores for all other classes to 0 |

"logit" | 1/(1 + e–x) |

"none" or "identity" | x (no transformation) |

"sign" | –1 for x < 0 0 for x = 0 1 for x > 0 |

"symmetric" | 2x – 1 |

"symmetricismax" | Sets the score for the class with the largest score to 1, and sets the scores for all other classes to –1 |

"symmetriclogit" | 2/(1 + e–x) – 1 |

For a MATLAB® function or a function that you define, enter its function handle; for

example, @function, where:

functionaccepts an n-by-K matrix (the original scores) and returns a matrix of the same size (the transformed scores).n is the number of observations, and row j of the matrix contains the class scores of observation j.

K is the number of classes, and column k is class

ClassNames(.k)

The default ScoreTransform value depends on how you create the model:

If you convert a traditionally trained model to create

Mdl,ScoreTransformis specified by the corresponding property of the traditionally trained model.The default

'none'specifies returning posterior class probabilities.

Data Types: char | function_handle | string

Training Parameters

Predictor distributions

P(x|ck), where

ck is class

ClassNames(, specified as a

character vector or string scalar, or a 1-by-k)NumPredictors string

vector or cell vector of character vectors with values from the table.

| Value | Description |

|---|---|

"mn" | Multinomial distribution. If you specify "mn", then

all features are components of a multinomial distribution (for example, a

bag-of-tokens

model). Therefore, you cannot include "mn" as an element

of a string array or a cell array of character vectors. For details, see

Estimated Probability for Multinomial Distribution. |

"mvmn" | Multivariate multinomial distribution. For details, see Estimated Probability for Multivariate Multinomial Distribution. |

"normal" | Normal distribution. For details, see Normal Distribution Estimators |

If you specify a character vector or string scalar, then the software models all

the features using that distribution. If you specify a

1-by-NumPredictors string vector or cell vector of character

vectors, the software models feature j using the

distribution in element j of the vector.

By default, the software sets all predictors specified as categorical predictors

(see the CategoricalPredictors property) to

'mvmn'. Otherwise, the default distribution is

'normal'.

incrementalClassificationNaiveBayes stores the value as a character vector or cell vector

of character vectors.

Example: 'DistributionNames',"mn" specifies that the joint

conditional distribution of all predictor variables is multinomial.

Example: 'DistributionNames',["normal" "mvmn" "normal"]

specifies that the first and third predictor variables are normally distributed and

the second variable is categorical with a multivariate multinomial

distribution.

Data Types: char | string | cell

This property is read-only.

Distribution parameter estimates, specified as a cell array.

DistributionParameters is a

K-by-NumPredictors cell array, where

K is the number of classes and cell

(k,j) contains the

distribution parameter estimates for instances of predictor

j in class k. The order of the

rows corresponds to the order of the classes in the property

ClassNames, and the order of the columns corresponds to the

order of the predictors in the predictor data.

If class k has no observations for predictor

j, then

DistributionParameters{

is empty (k,j}[]).

The elements of DistributionParameters depend on the

distributions of the predictors. This table describes the values in

DistributionParameters{.k,j}

| Distribution of Predictor j | Value of Cell Array for Predictor j and

Class k |

|---|---|

'mn' | A scalar representing the probability that token j appears in class k. For details, see Estimated Probability for Multinomial Distribution. |

'mvmn' | A numeric vector containing the probabilities for each possible level

of predictor j in class k. The

software orders the probabilities by the sorted order of all unique levels

of predictor j (stored in the property

CategoricalLevels). For more details, see Estimated Probability for Multivariate Multinomial Distribution. |

'normal' | A 2-by-1 numeric vector. The first element is the weighted sample mean and the second element is the weighted sample standard deviation. For more details, see Normal Distribution Estimators. |

Note

Unlike fitcnb, incremental fitting functions order

the levels of a predictor as the functions experience them during training. For example,

suppose predictor j is categorical with multivariate multinomial

distribution. The order of the levels in CategoricalLevels{j} and,

consequently, the order of the level probabilities in each cell of

DistributionParameters{:,j} returned by incremental fitting functions

can differ from the order returned by fitcnb for the same training data

set.

Data Types: cell

Performance Metrics Parameters

Flag indicating whether the incremental model tracks performance metrics, specified as logical

0 (false) or 1

(true).

The incremental model Mdl is warm

(IsWarm becomes true) when incremental fitting

functions perform both of these actions:

Fit the incremental model to

MetricsWarmupPeriodobservations.Process

MaxNumClassesclasses or all class names specified by theClassNamesname-value argument.

| Value | Description |

|---|---|

true or 1 | The incremental model Mdl is warm. Consequently, updateMetrics and updateMetricsAndFit track performance metrics in the Metrics property of Mdl. |

false or 0 | updateMetrics and updateMetricsAndFit do not track performance metrics. |

Data Types: logical

This property is read-only.

Model performance metrics updated during incremental learning by

updateMetrics and updateMetricsAndFit,

specified as a table with two columns and m rows, where

m is the number of metrics specified by the Metrics name-value

argument.

The columns of Metrics are labeled Cumulative and Window.

Cumulative: Elementjis the model performance, as measured by metricj, from the time the model became warm (IsWarmis1).Window: Elementjis the model performance, as measured by metricj, evaluated over all observations within the window specified by theMetricsWindowSizeproperty. The software updatesWindowafter it processesMetricsWindowSizeobservations.

Rows are labeled by the specified metrics. For details, see the

Metrics name-value argument of

incrementalLearner or incrementalClassificationNaiveBayes.

Data Types: table

This property is read-only.

Number of observations the incremental model must be fit to before it tracks performance metrics in its Metrics property, specified as a nonnegative integer.

The default MetricsWarmupPeriod value depends on how you create

the model:

If you convert a traditionally trained model to create

Mdl, theMetricsWarmupPeriodname-value argument of theincrementalLearnerfunction sets this property. The default value of the argument is0.Otherwise, the default value is

1000.

For more details, see Performance Metrics.

Data Types: single | double

This property is read-only.

Number of observations to use to compute window performance metrics, specified as a positive integer.

The default MetricsWindowSize value depends on how you create the model:

If you convert a traditionally trained model to create

Mdl, theMetricsWindowSizename-value argument of theincrementalLearnerfunction sets this property. The default value of the argument is200.Otherwise, the default value is

200.

For more details on performance metrics options, see Performance Metrics.

Data Types: single | double

Object Functions

fit | Train naive Bayes classification model for incremental learning |

updateMetricsAndFit | Update performance metrics in naive Bayes incremental learning classification model given new data and train model |

updateMetrics | Update performance metrics in naive Bayes incremental learning classification model given new data |

logp | Log unconditional probability density of naive Bayes classification model for incremental learning |

loss | Loss of naive Bayes incremental learning classification model on batch of data |

predict | Predict responses for new observations from naive Bayes incremental learning classification model |

perObservationLoss | Per observation classification error of model for incremental learning |

reset | Reset incremental classification model |

Examples

To create a naive Bayes classification model for incremental learning, you must specify the maximum number of classes that you expect the model to process ('MaxNumClasses' name-value argument). As you fit the model to incoming batches of data by using an incremental fitting function, the model collects new classes in its ClassNames property. If the specified maximum number of classes is inaccurate, one of the following occurs:

Before an incremental fitting function processes the expected maximum number of classes, the model is cold. Consequently, the

updateMetricsandupdateMetricsAndFitfunctions do not measure performance metrics.If the number of classes exceeds the maximum expected, the incremental fitting function issues an error.

This example shows how to create a naive Bayes classification model for incremental learning when the only information you specify is the expected maximum number of classes in the data. Also, the example illustrates the consequences when incremental fitting functions process all expected classes early and late in the sample.

For this example, consider training a device to predict whether a subject is sitting, standing, walking, running, or dancing based on biometric data measured on the subject. Therefore, the device has a maximum of 5 classes from which to choose.

Process Expected Maximum Number of Classes Early in Sample

Create an incremental naive Bayes model for multiclass learning. Specify a maximum of 5 classes in the data.

MdlEarly = incrementalClassificationNaiveBayes('MaxNumClasses',5)MdlEarly =

incrementalClassificationNaiveBayes

IsWarm: 0

Metrics: [1×2 table]

ClassNames: [1×0 double]

ScoreTransform: 'none'

DistributionNames: 'normal'

DistributionParameters: {}

Properties, Methods

MdlEarly is an incrementalClassificationNaiveBayes model object. All its properties are read-only.

MdlEarly must be fit to data before you can use it to perform any other operations.

Load the human activity data set. Randomly shuffle the data.

load humanactivity n = numel(actid); rng(1); % For reproducibility idx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enter Description at the command line.

Fit the incremental model to the training data by using the updateMetricsAndFit function. Simulate a data stream by processing chunks of 50 observations at a time. At each iteration:

Process 50 observations.

Overwrite the previous incremental model with a new one fitted to the incoming observations.

Store the mean of the first predictor in the first class , the cumulative metrics, and the window metrics to see how they evolve during incremental learning.

% Preallocation numObsPerChunk = 50; nchunk = floor(n/numObsPerChunk); mc = array2table(zeros(nchunk,2),'VariableNames',["Cumulative" "Window"]); mu1 = zeros(nchunk+1,1); % Incremental learning for j = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1); iend = min(n,numObsPerChunk*j); idx = ibegin:iend; MdlEarly = updateMetricsAndFit(MdlEarly,X(idx,:),Y(idx)); mc{j,:} = MdlEarly.Metrics{"MinimalCost",:}; mu1(j + 1) = MdlEarly.DistributionParameters{1,1}(1); end

MdlEarly is an incrementalClassificationNaiveBayes model object trained on all the data in the stream. During incremental learning and after the model is warmed up, updateMetricsAndFit checks the performance of the model on the incoming observations, and then fits the model to those observations.

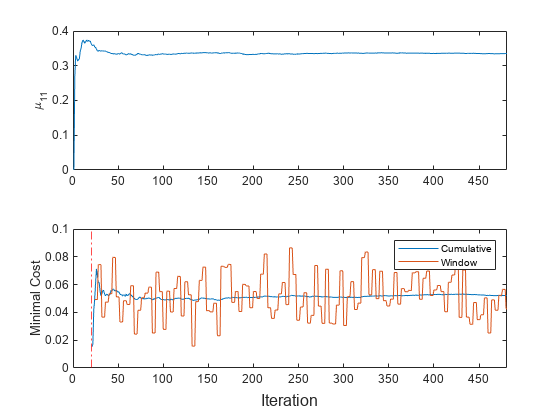

To see how the performance metrics and evolve during training, plot them on separate tiles.

t = tiledlayout(2,1); nexttile plot(mu1) ylabel('\mu_{11}') xlim([0 nchunk]) nexttile h = plot(mc.Variables); xlim([0 nchunk]) ylabel('Minimal Cost') xline(MdlEarly.MetricsWarmupPeriod/numObsPerChunk,'r-.') legend(h,mc.Properties.VariableNames) xlabel(t,'Iteration')

The plots indicate that updateMetricsAndFit performs the following actions:

Fit during all incremental learning iterations.

Compute the performance metrics after the metrics warm-up period (red vertical line) only.

Compute the cumulative metrics during each iteration.

Compute the window metrics after processing 200 observations (4 iterations).

Process Expected Maximum Number of Classes Late in Sample

Create a different naive Bayes model for incremental learning for the objective.

MdlLate = incrementalClassificationNaiveBayes('MaxNumClasses',5)MdlLate =

incrementalClassificationNaiveBayes

IsWarm: 0

Metrics: [1×2 table]

ClassNames: [1×0 double]

ScoreTransform: 'none'

DistributionNames: 'normal'

DistributionParameters: {}

Properties, Methods

Move all observations labeled with class 5 to the end of the sample.

idx5 = Y == 5; Xnew = [X(~idx5,:); X(idx5,:)]; Ynew = [Y(~idx5) ;Y(idx5)];

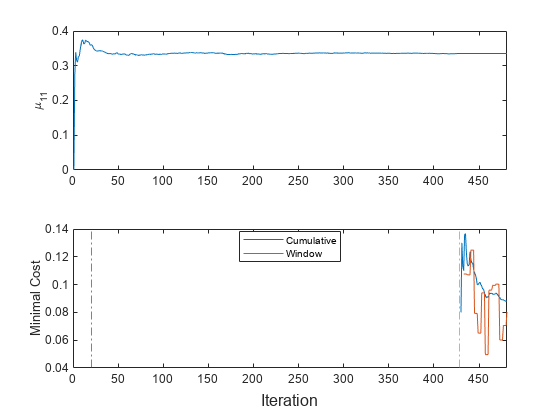

Fit the incremental model and plot the results.

mcnew = array2table(zeros(nchunk,2),'VariableNames',["Cumulative" "Window"]); mu1new = zeros(nchunk,1); for j = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1); iend = min(n,numObsPerChunk*j); idx = ibegin:iend; MdlLate = updateMetricsAndFit(MdlLate,Xnew(idx,:),Ynew(idx)); mcnew{j,:} = MdlLate.Metrics{"MinimalCost",:}; mu1new(j + 1) = MdlLate.DistributionParameters{1,1}(1); end t = tiledlayout(2,1); nexttile plot(mu1new) ylabel('\mu_{11}') xlim([0 nchunk]) nexttile h = plot(mcnew.Variables); xlim([0 nchunk]); ylabel('Minimal Cost') xline(MdlLate.MetricsWarmupPeriod/numObsPerChunk,'r-.') xline(sum(~idx5)/numObsPerChunk,'g-.') legend(h,mcnew.Properties.VariableNames,'Location','best') xlabel(t,'Iteration')

The updateMetricsAndFit function trains the model throughout incremental learning, but the function starts tracking performance metrics only after the model is fit to all expected number of classes (the green vertical line in the bottom tile).

Create an incremental naive Bayes model when you know all the class names in the data.

Consider training a device to predict whether a subject is sitting, standing, walking, running, or dancing based on biometric data measured on the subject. The class names map 1 through 5 to an activity.

Create an incremental naive Bayes model for multiclass learning. Specify the class names.

classnames = 1:5;

Mdl = incrementalClassificationNaiveBayes('ClassNames',classnames)Mdl =

incrementalClassificationNaiveBayes

IsWarm: 0

Metrics: [1×2 table]

ClassNames: [1 2 3 4 5]

ScoreTransform: 'none'

DistributionNames: 'normal'

DistributionParameters: {5×0 cell}

Properties, Methods

Mdl is an incrementalClassificationNaiveBayes model object. All its properties are read-only.

Mdl must be fit to data before you can use it to perform any other operations.

Load the human activity data set. Randomly shuffle the data.

load humanactivity n = numel(actid); rng(1); % For reproducibility idx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enter Description at the command line.

Fit the incremental model to the training data by using the updateMetricsAndFit function. Simulate a data stream by processing chunks of 50 observations at a time. At each iteration:

Process 50 observations.

Overwrite the previous incremental model with a new one fitted to the incoming observations.

% Preallocation numObsPerChunk = 50; nchunk = floor(n/numObsPerChunk); % Incremental learning for j = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1); iend = min(n,numObsPerChunk*j); idx = ibegin:iend; Mdl = updateMetricsAndFit(Mdl,X(idx,:),Y(idx)); end

In addition to specifying the maximum number of class names, prepare an incremental naive Bayes learner by specifying a metrics warm-up period, during which the updateMetricsAndFit function fits only the model. Specify a metrics window size of 500 observations.

Load the human activity data set. Randomly shuffle the data.

load humanactivity n = numel(actid); rng(1); % For reproducibility idx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

The class names map 1 through 5 to an activity—sitting, standing, walking, running, or dancing, respectively—based on biometric data measured on the subject. For details on the data set, enter Description at the command line.

Create an incremental naive Bayes model for multiclass learning. Configure the model as follows:

Specify a metrics warm-up period of 5000 observations.

Specify a metrics window size of 500 observations.

Double the penalty to the classifier when it mistakenly classifies class 2.

Track the classification error and minimal cost to measure the performance of the model. You do not have to specify

'mincost'forMetricsbecauseincrementalClassificationNaiveBayesalways tracks this metric.

C = ones(5) - eye(5); C(2,[1 3 4 5]) = 2; Mdl = incrementalClassificationNaiveBayes('ClassNames',1:5, ... 'MetricsWarmupPeriod',5000,'MetricsWindowSize',500, ... 'Cost',C,'Metrics','classiferror')

Mdl =

incrementalClassificationNaiveBayes

IsWarm: 0

Metrics: [2×2 table]

ClassNames: [1 2 3 4 5]

ScoreTransform: 'none'

DistributionNames: 'normal'

DistributionParameters: {5×0 cell}

Properties, Methods

Mdl is an incrementalClassificationNaiveBayes model object configured for incremental learning.

Fit the incremental model to the rest of the data by using the updateMetricsAndFit function. At each iteration:

Simulate a data stream by processing a chunk of 50 observations.

Overwrite the previous incremental model with a new one fitted to the incoming observations.

Store the standard deviation of the first predictor variable in the first class , the cumulative metrics, and the window metrics to see how they evolve during incremental learning.

% Preallocation numObsPerChunk = 50; nchunk = floor(n/numObsPerChunk); ce = array2table(zeros(nchunk,2),'VariableNames',["Cumulative" "Window"]); mc = array2table(zeros(nchunk,2),'VariableNames',["Cumulative" "Window"]); sigma11 = zeros(nchunk+1,1); % Incremental fitting for j = 1:nchunk ibegin = min(n,numObsPerChunk*(j-1) + 1); iend = min(n,numObsPerChunk*j); idx = ibegin:iend; Mdl = updateMetricsAndFit(Mdl,X(idx,:),Y(idx)); ce{j,:} = Mdl.Metrics{"ClassificationError",:}; mc{j,:} = Mdl.Metrics{"MinimalCost",:}; sigma11(j + 1) = Mdl.DistributionParameters{1,1}(2); end

Mdl is an incrementalClassificationNaiveBayes model object trained on all the data in the stream. During incremental learning and after the model is warmed up, updateMetricsAndFit checks the performance of the model on the incoming observations, and then fits the model to those observations.

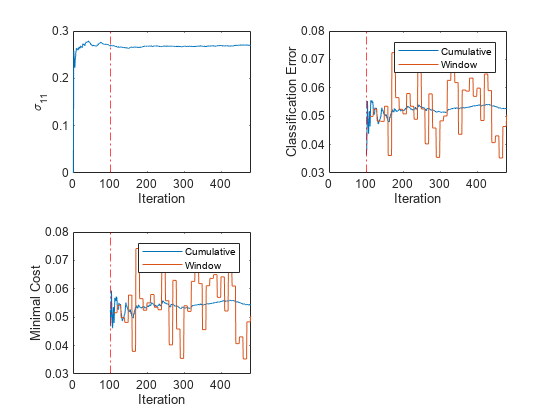

To see how the performance metrics and evolve during training, plot them on separate tiles.

tiledlayout(2,2) nexttile plot(sigma11) ylabel('\sigma_{11}') xlim([0 nchunk]); xline(Mdl.MetricsWarmupPeriod/numObsPerChunk,'r-.') xlabel('Iteration') nexttile h = plot(ce.Variables); xlim([0 nchunk]) ylabel('Classification Error') xline(Mdl.MetricsWarmupPeriod/numObsPerChunk,'r-.') legend(h,ce.Properties.VariableNames) xlabel('Iteration') nexttile h = plot(mc.Variables); xlim([0 nchunk]); ylabel('Minimal Cost') xline(Mdl.MetricsWarmupPeriod/numObsPerChunk,'r-.') legend(h,mc.Properties.VariableNames) xlabel('Iteration')

The plots indicate that updateMetricsAndFit performs the following actions:

Fit during all incremental learning iterations.

Compute the performance metrics after the metrics warm-up period (red vertical line) only.

Compute the cumulative metrics during each iteration.

Compute the window metrics after processing 500 observations (10 iterations).

Train a naive Bayes model for multiclass classification by using fitcnb. Then, convert the model to an incremental learner, track its performance, and fit the model to streaming data. Carry over training options from traditional to incremental learning.

Load and Preprocess Data

Load the human activity data set. Randomly shuffle the data.

load humanactivity rng(1) % For reproducibility n = numel(actid); idx = randsample(n,n); X = feat(idx,:); Y = actid(idx);

For details on the data set, enter Description at the command line.

Suppose that the data collected when the subject was idle (Y <= 2) has double the quality than when the subject was moving. Create a weight variable that attributes 2 to observations collected from an idle subject, and 1 to a moving subject.

W = ones(n,1) + (Y <= 2);

Train Naive Bayes Model

Fit a naive Bayes model for multiclass classification to a random sample of half the data.

idxtt = randsample([true false],n,true);

TTMdl = fitcnb(X(idxtt,:),Y(idxtt),'Weights',W(idxtt))TTMdl =

ClassificationNaiveBayes

ResponseName: 'Y'

CategoricalPredictors: []

ClassNames: [1 2 3 4 5]

ScoreTransform: 'none'

NumObservations: 12053

DistributionNames: {1×60 cell}

DistributionParameters: {5×60 cell}

Properties, Methods

TTMdl is a ClassificationNaiveBayes model object representing a traditionally trained naive Bayes model.

Convert Trained Model

Convert the traditionally trained naive Bayes model to a naive Bayes classification model for incremental learning.

IncrementalMdl = incrementalLearner(TTMdl)

IncrementalMdl =

incrementalClassificationNaiveBayes

IsWarm: 1

Metrics: [1×2 table]

ClassNames: [1 2 3 4 5]

ScoreTransform: 'none'

DistributionNames: {1×60 cell}

DistributionParameters: {5×60 cell}

Properties, Methods

Separately Track Performance Metrics and Fit Model

Perform incremental learning on the rest of the data by using the updateMetrics and fit functions. Simulate a data stream by processing 50 observations at a time. At each iteration:

Call

updateMetricsto update the cumulative and window classification error of the model given the incoming chunk of observations. Overwrite the previous incremental model to update the losses in theMetricsproperty. Note that the function does not fit the model to the chunk of data—the chunk is "new" data for the model. Specify the observation weights.Call

fitto fit the model to the incoming chunk of observations. Overwrite the previous incremental model to update the model parameters. Specify the observation weights.Store the minimal cost and mean of the first predictor variable of the first class .

% Preallocation idxil = ~idxtt; nil = sum(idxil); numObsPerChunk = 50; nchunk = floor(nil/numObsPerChunk); mc = array2table(zeros(nchunk,2),'VariableNames',["Cumulative" "Window"]); mu11 = [IncrementalMdl.DistributionParameters{1,1}(1); zeros(nchunk+1,1)]; Xil = X(idxil,:); Yil = Y(idxil); Wil = W(idxil); % Incremental fitting for j = 1:nchunk ibegin = min(nil,numObsPerChunk*(j-1) + 1); iend = min(nil,numObsPerChunk*j); idx = ibegin:iend; IncrementalMdl = updateMetrics(IncrementalMdl,Xil(idx,:),Yil(idx), ... 'Weights',Wil(idx)); mc{j,:} = IncrementalMdl.Metrics{"MinimalCost",:}; IncrementalMdl = fit(IncrementalMdl,Xil(idx,:),Yil(idx),'Weights',Wil(idx)); mu11(j+1) = IncrementalMdl.DistributionParameters{1,1}(1); end

IncrementalMdl is an incrementalClassificationNaiveBayes model object trained on all the data in the stream.

Alternatively, you can use updateMetricsAndFit to update the performance metrics of the model given a new chunk of data, and then fit the model to the data.

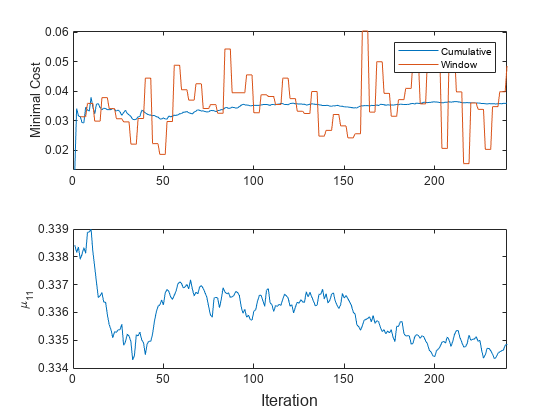

Plot a trace plot of the performance metrics and .

t = tiledlayout(2,1); nexttile h = plot(mc.Variables); xlim([0 nchunk]) ylabel('Minimal Cost') legend(h,mc.Properties.VariableNames) nexttile plot(mu11) ylabel('\mu_{11}') xlim([0 nchunk]) xlabel(t,'Iteration')

The cumulative loss levels quickly and is stable, whereas the window loss jumps throughout the training.

changes abruptly at first, then gradually levels off as fit processes more chunks.

More About

In the bag-of-tokens model, the value of predictor j is the nonnegative number of occurrences of token j in the observation. The number of categories (bins) in the multinomial model is the number of distinct tokens (number of predictors).

Incremental learning, or online learning, is a branch of machine learning concerned with processing incoming data from a data stream, possibly given little to no knowledge of the distribution of the predictor variables, aspects of the prediction or objective function (including tuning parameter values), or whether the observations are labeled. Incremental learning differs from traditional machine learning, where enough labeled data is available to fit to a model, perform cross-validation to tune hyperparameters, and infer the predictor distribution.

Given incoming observations, an incremental learning model processes data in any of the following ways, but usually in this order:

Predict labels.

Measure the predictive performance.

Check for structural breaks or drift in the model.

Fit the model to the incoming observations.

For more details, see Incremental Learning Overview.

Algorithms

The

updateMetricsandupdateMetricsAndFitfunctions track model performance metrics (Metrics) from new data only when the incremental model is warm (IsWarmproperty istrue).If you create an incremental model by using

incrementalLearnerandMetricsWarmupPeriodis 0 (default forincrementalLearner), the model is warm at creation.Otherwise, an incremental model becomes warm after

fitorupdateMetricsAndFitperforms both of these actions:Fit the incremental model to

MetricsWarmupPeriodobservations, which is the metrics warm-up period.Fit the incremental model to all expected classes (see the

MaxNumClassesandClassNamesarguments ofincrementalClassificationNaiveBayes).

The

Metricsproperty of the incremental model stores two forms of each performance metric as variables (columns) of a table,CumulativeandWindow, with individual metrics in rows. When the incremental model is warm,updateMetricsandupdateMetricsAndFitupdate the metrics at the following frequencies:Cumulative— The functions compute cumulative metrics since the start of model performance tracking. The functions update metrics every time you call the functions and base the calculation on the entire supplied data set.Window— The functions compute metrics based on all observations within a window determined by theMetricsWindowSizename-value argument.MetricsWindowSizealso determines the frequency at which the software updatesWindowmetrics. For example, ifMetricsWindowSizeis 20, the functions compute metrics based on the last 20 observations in the supplied data (X((end – 20 + 1):end,:)andY((end – 20 + 1):end)).Incremental functions that track performance metrics within a window use the following process:

Store a buffer of length

MetricsWindowSizefor each specified metric, and store a buffer of observation weights.Populate elements of the metrics buffer with the model performance based on batches of incoming observations, and store corresponding observation weights in the weights buffer.

When the buffer is full, overwrite

Mdl.Metrics.Windowwith the weighted average performance in the metrics window. If the buffer overfills when the function processes a batch of observations, the latest incomingMetricsWindowSizeobservations enter the buffer, and the earliest observations are removed from the buffer. For example, supposeMetricsWindowSizeis 20, the metrics buffer has 10 values from a previously processed batch, and 15 values are incoming. To compose the length 20 window, the functions use the measurements from the 15 incoming observations and the latest 5 measurements from the previous batch.

The software omits an observation with a

NaNscore when computing theCumulativeandWindowperformance metric values.

If predictor variable j has a conditional normal distribution (see the DistributionNames property), the software fits the distribution to the data by computing the class-specific weighted mean and the biased (maximum likelihood) estimate of the weighted standard deviation. For each class k:

The weighted mean of predictor j is

where wi is the weight for observation i. The software normalizes weights within a class such that they sum to the prior probability for that class.

The unbiased estimator of the weighted standard deviation of predictor j is

If all predictor variables compose a conditional multinomial distribution (see the

DistributionNames property), the software fits the distribution

using the Bag-of-Tokens Model. The software stores the probability

that token j appears in class k in the

property

DistributionParameters{.

With additive smoothing [1], the estimated probability isk,j}

where:

which is the weighted number of occurrences of token j in class k.

nk is the number of observations in class k.

is the weight for observation i. The software normalizes weights within a class so that they sum to the prior probability for that class.

which is the total weighted number of occurrences of all tokens in class k.

If predictor variable j has a conditional multivariate

multinomial distribution (see the DistributionNames property), the

software follows this procedure:

The software collects a list of the unique levels, stores the sorted list in

CategoricalLevels, and considers each level a bin. Each combination of predictor and class is a separate, independent multinomial random variable.For each class k, the software counts instances of each categorical level using the list stored in

CategoricalLevels{.j}The software stores the probability that predictor

jin classkhas level L in the propertyDistributionParameters{, for all levels ink,j}CategoricalLevels{. With additive smoothing [1], the estimated probability isj}where:

which is the weighted number of observations for which predictor j equals L in class k.

nk is the number of observations in class k.

if xij = L, and 0 otherwise.

is the weight for observation i. The software normalizes weights within a class so that they sum to the prior probability for that class.

mj is the number of distinct levels in predictor j.

mk is the weighted number of observations in class k.

References

[1] Manning, Christopher D., Prabhakar Raghavan, and Hinrich Schütze. Introduction to Information Retrieval, NY: Cambridge University Press, 2008.

Version History

Introduced in R2021aStarting in R2021b, naive Bayes incremental fitting functions fit and updateMetricsAndFit compute

biased (maximum likelihood) estimates of the weighted standard deviations for conditionally

normal predictor variables during training. In other words, for each class

k, incremental fitting functions normalize the sum of square weighted

deviations of the conditionally normal predictor

xj by the sum of the weights in class

k. Before R2021b, naive Bayes incremental fitting functions computed

the unbiased standard deviation, like fitcnb. The currently returned weighted standard deviation estimates differ

from those computed before R2021b by a factor of

The factor approaches 1 as the sample size increases.

See Also

Functions

Topics

- Incremental Learning Overview

- Configure Incremental Learning Model

- Implement Incremental Learning for Classification Using Succinct Workflow

- Implement Incremental Learning for Classification Using Flexible Workflow

- Perform Text Classification Incrementally

- Incremental Learning with Naive Bayes and Heterogeneous Data

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)