monovslam

Visual simultaneous localization and mapping (vSLAM) and visual-inertial sensor fusion with monocular camera

Since R2025a

Description

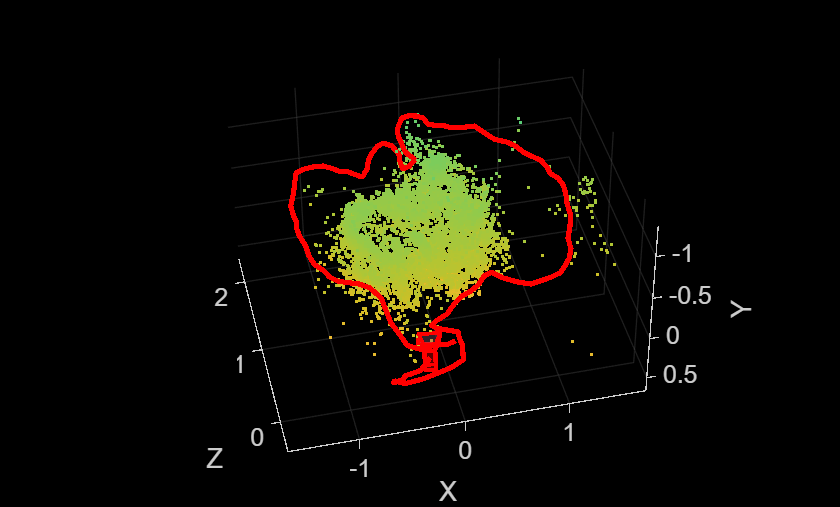

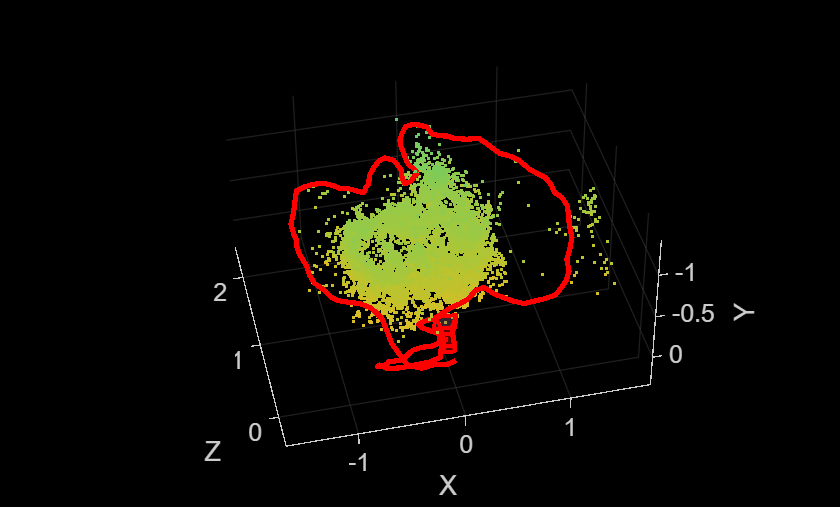

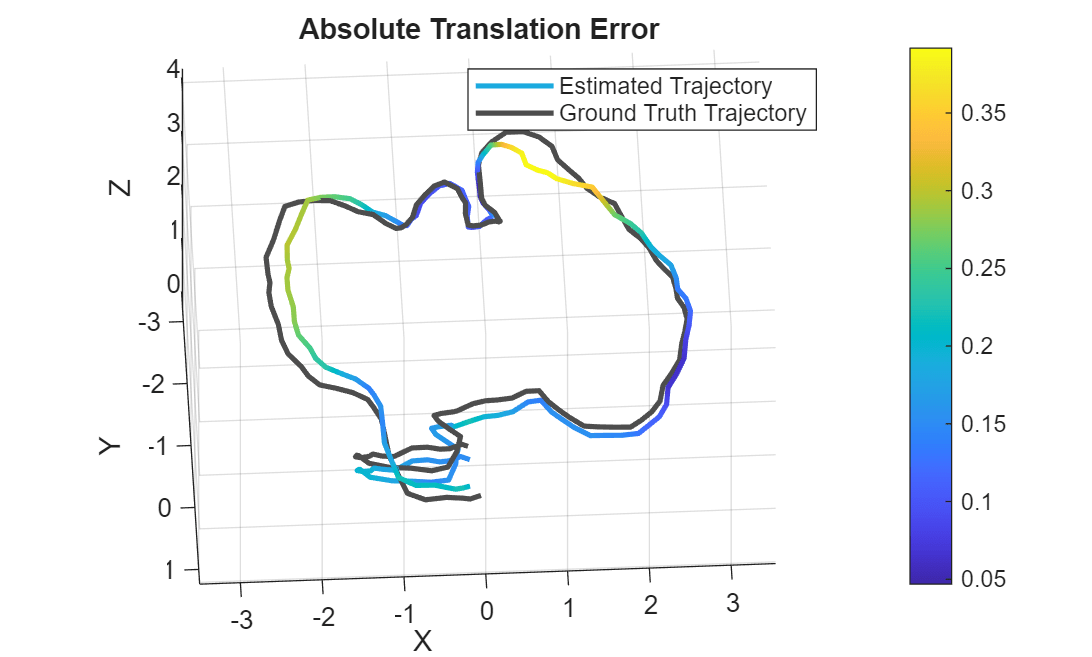

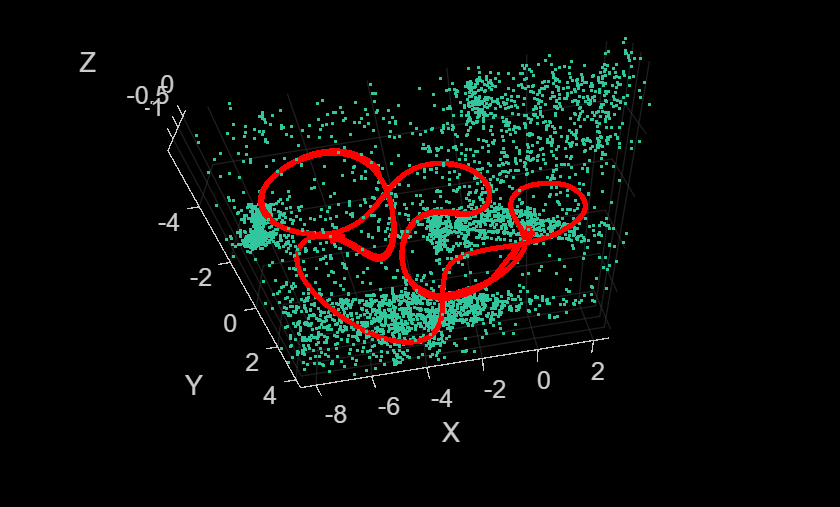

Use the monovslam object to perform visual simultaneous

localization and mapping (vSLAM) and visual inertial SLAM (viSLAM) with a monocular camera.

The object extracts Oriented FAST and Rotated BRIEF (ORB) features from incrementally read

images, and then tracks those features to estimate camera poses, identify key frames, and

reconstruct a 3-D environment. The vSLAM algorithm also searches for loop closures using the

bag-of-features algorithm, and then optimizes the camera poses using pose graph optimization.

You can enhance the accuracy and robustness of the SLAM by integrating this object with IMU

data to perform visual-inertial sensor fusion. To learn more about visual SLAM, see Implement Visual SLAM in MATLAB (Computer Vision Toolbox).

Creation

Syntax

Description

vslam = monovslam(intrinsics)vslam, by using the camera

intrinsic parameters.

The monovslam object does not account for lens distortion. You can

use the specified undistortImage (Computer Vision Toolbox) function to undistort images

before adding them to the object.

The object represents 3-D map points and camera poses in world coordinates. The object

assumes the camera pose of the first key frame is an identity rigidtform3d (Image Processing Toolbox)

transform.

Note

The monovslam object runs on multiple threads internally, which can delay the processing of an image frame added by using the addFrame function. Additionally, the object running on multiple threads means the current frame the object is processing can be different than the recently added frame.

vslam = monovslam(intrinsics,imuParameters)vslam, by fusing the camera

input intrinsics with inertial measurement unit (IMU) readings.

vslam = monovslam(intrinsics,PropertyName=Value)MaxNumFeatures=850 sets the maximum number of ORB feature points to

extract from each image to 850.

Input Arguments

Properties

Object Functions

addFrame | Add image frame to visual SLAM object |

hasNewKeyFrame | Check if new key frame added in visual SLAM object |

checkStatus | Check status of visual SLAM object |

isDone | End-of-processing status for visual SLAM object |

mapPoints | Build 3-D map of world points |

poses | Absolute camera poses of key frames |

plot | Plot 3-D map points and estimated camera trajectory in visual SLAM |

reset | Reset visual SLAM object |

Examples

References

[1] Mur-Artal, Raul, J. M. M. Montiel, and Juan D. Tardos. “ORB-SLAM: A Versatile and Accurate Monocular SLAM System.” IEEE Transactions on Robotics 31, no. 5 (October 2015): 1147–63. https://doi.org/10.1109/TRO.2015.2463671.

[2] Galvez-López, D., and J. D. Tardos. “Bags of Binary Words for Fast Place Recognition in Image Sequences.” IEEE Transactions on Robotics, vol. 28, no. 5, Oct. 2012, pp. 1188–97. DOI.org (Crossref), https://doi.org/10.1109/TRO.2012.2197158.

Extended Capabilities

Version History

Introduced in R2025aSee Also

Objects

stereovslam|rgbdvslam(Computer Vision Toolbox) |factorGraph|factorIMUParameters|cameraIntrinsics(Computer Vision Toolbox) |imageDatastore

Topics

- Implement Visual SLAM in MATLAB (Computer Vision Toolbox)

- Performant and Deployable Monocular Visual SLAM (Computer Vision Toolbox)

- Monocular Visual Simultaneous Localization and Mapping (Computer Vision Toolbox)

- Monocular Visual-Inertial SLAM (Computer Vision Toolbox)