Perform Large Matrix Multiplication on FPGA External DDR Memory Using Ethernet-Based AXI Manager

This example shows how to use Ethernet-based AXI manager to access external memory connected to an FPGA. This example also shows how to:

Generate an HDL IP core with an interface.

Access large matrices from the external DDR3 memory on the AMD® Kintex®-7 KC705 board using the Ethernet-based AXI manager interface.

Perform matrix vector multiplication in the HDL IP core and write the output result back to the DDR3 memory using the Ethernet-based AXI manager interface.

Requirements

To run this example, you must have this software and hardware installed and set up.

AMD Vivado® Design Suite, with a supported version listed in the FPGA Verification Requirements

AMD Kintex-7 KC705 Evaluation Kit

JTAG cable and Ethernet cable for connecting to KC705 FPGA

HDL Coder™ Support Package for Xilinx FPGA and SoC Devices

HDL Verifier™ Support Package for AMD FPGA and SoC Devices

Introduction

This example models a matrix vector multiplication algorithm and implements the algorithm on the AMD Kintex-7 KC705 board. Large matrices might not map efficiently to block RAMs on the FPGA fabric. Instead, store the matrices in the external DDR3 memory on the FPGA board. The Ethernet-based AXI manager interface can access the data by communicating with vendor-provided memory interface IP cores that interface with the DDR3 memory. This capability enables you to model algorithms that involve large data processing and requires high-throughput DDR access, such as matrix operations and computer vision algorithms.

The matrix vector multiplication module supports fixed-point matrix vector multiplication, with a configurable matrix size ranging from 2 to 4000. The size of the matrix is run-time configurable through the AXI4 accessible register.

Open the model by entering this command at the MATLAB® command prompt.

modelname = 'hdlcoder_external_memory_axi_master';

open_system(modelname);

Model Algorithm

This example model includes an FPGA implementable design under test (DUT) block, a DDR functional behavior block, and a test environment to drive inputs and verify the expected outputs.

The DUT subsystem contains an AXI4 Master read and write controller along with a matrix vector multiplication module. Using the AXI4 Master interface, the DUT subsystem reads data from the external DDR3 memory, feeds the data into the Matrix_Vector_Multiplication module, and then writes the output data to the external DDR3 memory using the Ethernet-based AXI manager interface. The DUT module has several parameter ports. These ports are mapped to AXI4 accessible registers, so you can adjust these parameters from MATLAB even after you implement the design onto the FPGA.

The matrix_mul_on port controls whether to run the Matrix_Vector_Multiplication module. When the input to matrix_mul_on is true, the DUT subsystem performs matrix vector multiplication, as described earlier in this example. When the input to matrix_mul_on is false, the DUT subsystem performs a data loop back mode. In this mode, the DUT subsystem reads data from the external DDR3 memory, writes it into the Internal_Memory module, and then writes the same data back to the external DDR3 memory. The data loop back mode is a way to verify the functionality of the AXI4 Master external DDR3 memory access.

Also inside the DUT subsystem, the Matrix_Vector_Multiplication module uses a multiply-add block to implement a streaming dot-product computation for the inner-product of the matrix vector multiplication.

If A is a matrix of size N-by-N and B is a vector of size N-by-1, then the matrix vector multiplication output is Z = A x B, which is of size N-by-1.

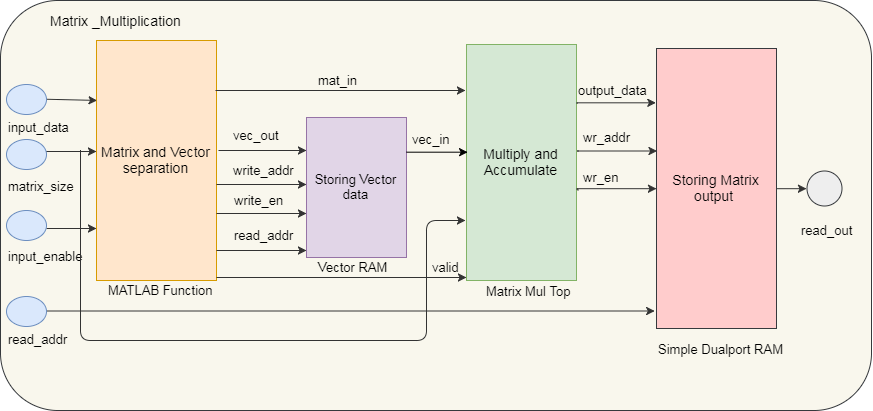

The first N values from the DDR are treated as the N-by-1 size vector, followed by N-by-N size matrix data. The first N values (vector data) are stored into a RAM. From N+1 values onward, data is directly streamed as matrix data. Vector data is read from the Vector_RAM in parallel. Both matrix and vector inputs are fed into the Matrix_mul_top subsystem. The first matrix output is available after N clock cycles and is stored in output RAM. Again, the vector RAM read address is reinitialized to 0 and starts reading the same vector data corresponding to the new matrix stream. This operation is repeated for all of the rows of the matrix.

This diagram shows the architecture of the Matrix_Vector_Multiplication module.

Generate HDL IP core with Ethernet-Based AXI Manager

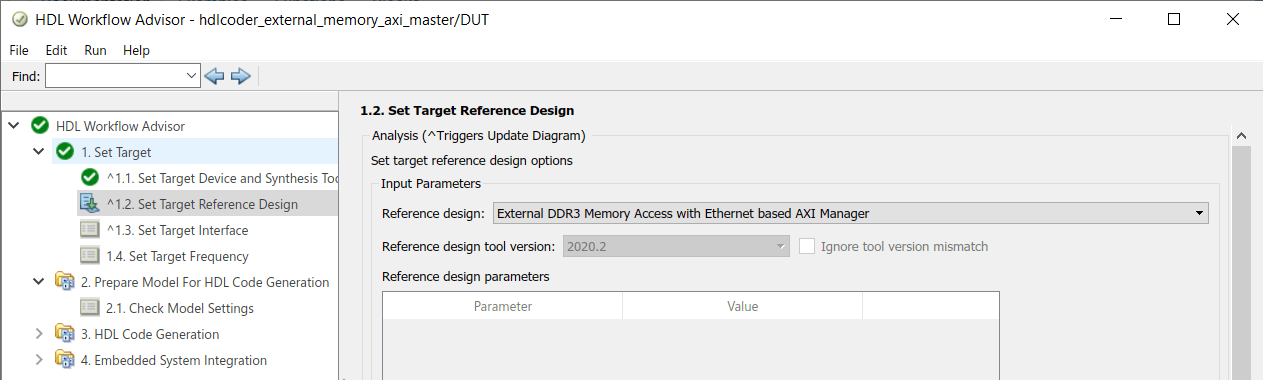

Start the HDL Workflow Advisor and use the IP Core Generation workflow to deploy this design on the AMD Kintex-7 hardware.

1. Set up the AMD Vivado synthesis tool path by entering this command at the MATLAB command prompt. Use your own Vivado installation path when you run the command.

hdlsetuptoolpath('ToolName','Xilinx Vivado', ... 'ToolPath','C:\Xilinx\Vivado\2023.1\bin\vivado.bat')

2. Start the HDL Workflow Advisor from the DUT subsystem hdlcoder_external_memory_axi_master/DUT. The target interface settings are saved on the model. In step 1.1, the target workflow is the IP Core Generation workflow and the target platform is the AMD Kintex-7 KC705 development board.

3. In step 1.2, select the Reference design as External DDR3 Memory Access with Ethernet based AXI Manager.

4. Review the target platform interface table settings.

In this example, the input parameter ports, such as matrix_mul_on, matrix_size, burst_len, burst_from_ddr, and burst start, are mapped to the AXI4 interface. The HDL Coder product generates AXI4 interface accessible registers for these ports. You can use MATLAB to tune these parameters at run-time when the design is running on the FPGA board.

The Ethernet-based AXI manager interface has separate read and write channels. The read channel ports, such as axim_rd_data, axim_rd_s2m, and axim_rd_m2s, are mapped to the AXI4 Master Read interface. The write channel ports, such as axim_wr_data, axim_wr_s2m, and axim_wr_m2s, are mapped to the AXI4 Master Write interface.

5. Right-click step 3.2, Generate RTL Code and IP Core, and select Run to Selected Task to generate the IP core. You can find the register address mapping and other documentation for the IP core in the generated IP Core Report.

6. Right-click step 4.1, Create Project, and select Run This Task to generate the Vivado project. During the project creation, the generated DUT IP core is integrated into the External DDR3 Memory Access with Ethernet based AXI Manager reference design. This reference design comprises of an AMD memory interface generator (MIG) IP to communicate with the on-board external DDR3 memory on the KC705 platform. The AXI Manager IP is also added to enable MATLAB to control the DUT IP and to initialize and verify the DDR memory content.

You can view the generated Vivado project by clicking on the project link in the result window and inspect the design.

The Ethernet-based AXI manager IP has a default target IP address of 192.168.0.2 and default UDP port value of 50101. You can change these values by double-clicking the ethernet_mac_hub IP in the Vivado block design.

7. Right-click step 4.3, Program Target Device, and select Run to Selected Task to generate the bitstream and program the device.

Run FPGA Implementation on Kintex-7 Hardware

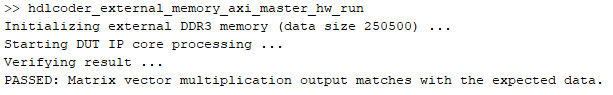

Run the FPGA implementation and verify the hardware result by running this script in MATLAB.

hdlcoder_external_memory_axi_master_hw_run

This script first initializes the Matrix_Size to 500, which means a 500-by-500 matrix. You can adjust Matrix_Size up to 4000.

The script then configures the AXI4 Master read and write channel base addresses. These addresses define the base address that the DUT reads from and writes to external DDR memory. In this script, the DUT reads from base address '40000000' and writes to base address '50000000'.

The AXI manager feature is used to initialize the external DDR3 memory with input vector and matrix data and to clear the output DDR memory location.

The DUT calculation is started by controlling the AXI4 accessible registers. The DUT IP core reads input data from the DDR memory, performs matrix vector multiplication, and then writes the result back to the DDR memory.

Finally, the output result is read back to MATLAB and compared with the expected value. In this way, the hardware results are verified in MATLAB.