Ideal Ground Truth Sensor

Generate ground truth measurements as sensor detections or track reports from driving scenario or RoadRunner Scenario

Since R2025a

Libraries:

Automated Driving Toolbox /

Driving Scenario and Sensor Modeling

Description

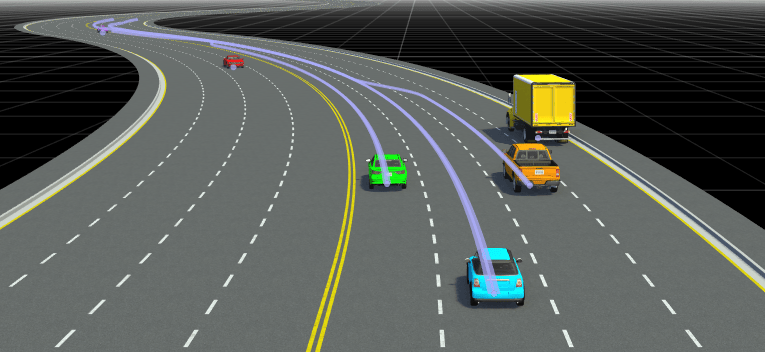

The Ideal Ground Truth Sensor block generates detection or track reports for ground truth measurements of target actors in field of view. It also generates ground truth lane boundaries as lane detections. Use this block to generate ground truth data as sensor detections from a driving scenario containing actors and trajectories, which you can read from a Scenario Reader block. When used in a driving scenario, the block generates detections only for vehicle actors in the scenario.

You can use the block with vehicle actors in RoadRunner Scenario simulations. When used in a RoadRunner Scenario, simulation, the block generates ground truth detections for vehicle actors as well as other static actors such as buildings, traffic cones etc. For more information, see Add Sensors to RoadRunner Scenario Using Simulink example.

Examples

Add Sensors to RoadRunner Scenario Using Simulink

Simulate a RoadRunner Scenario with sensor models defined in Simulink® and visualize object and lane detections.

Ports

Output

Object detections, returned as a Simulink bus containing a MATLAB structure. For more details about buses, see Create Nonvirtual Buses (Simulink).

The detections structure has this form:

| Field | Description | Type |

|---|---|---|

NumDetections | Number of detections | Integer |

IsValidTime | False when updates are requested at times that are between block invocation intervals | Boolean |

Detections | Object detections | Array of object detection structures of length set by the

Maximum number of object detections reported parameter.

Only NumDetections of these detections are actual

detections. |

The object detection structure contains these properties.

| Property | Definition |

|---|---|

Time | Measurement time |

Measurement | Object measurements |

MeasurementNoise | Measurement noise covariance matrix |

SensorIndex | Unique ID of the sensor |

ObjectClassID | Object classification |

MeasurementParameters | Parameters used by initialization functions of nonlinear Kalman tracking filters |

ObjectAttributes | Additional information passed to tracker |

The Measurement field reports the position and velocity of a

measurement in the coordinate system specified by Coordinate system used to

report objects. This field is a real-valued column vector of the form

[x; y; z;

vx; vy; vz]. Units are in

meters per second.

The MeasurementNoise field is a 6-by-6 matrix that reports the

measurement noise covariance for each coordinate in the Measurement

field.

The MeasurementParameters field is a structure with these

fields.

| Parameter | Definition |

|---|---|

Frame | Enumerated type indicating the frame used to report measurements. The

Ideal Ground Truth Sensor block reports detections in

either ego and sensor Cartesian coordinates, which are both rectangular

coordinate frames. Therefore, for this block, Frame is

always set to 'rectangular'. |

OriginPosition | 3-D vector offset of the sensor origin from the ego vehicle origin. The vector is derived from the Sensor's (x,y,z) position (m) parameter of the block. |

Orientation | Orientation of the sensor coordinate system with respect to the ego vehicle coordinate system. The orientation is derived from the Yaw angle of sensor mounted on ego vehicle (deg), Pitch angle of sensor mounted on ego vehicle (deg), and Roll angle of sensor mounted on ego vehicle (deg) parameters of the block. |

HasVelocity | Indicates whether measurements contain velocity. |

The ObjectAttributes property of each detection is a

structure with these fields.

| Field | Definition |

|---|---|

TargetIndex | Identifier of the actor, ActorID, that generated the

detection. For false alarms, this value is negative. |

Dependencies

To enable this output port, set these options:

Types of detections generated by sensor parameter must be

Objects onlyorObjects and LanesOutput format of reported targets must be

Object Detections.

Object tracks, returned as a Simulink bus containing a MATLAB structure. See Create Nonvirtual Buses (Simulink).

This table shows the structure fields.

| Field | Description |

|---|---|

NumTracks | Number of tracks |

Tracks | Array of track structures of a length set by the Maximum number

of tracks parameter. Only the first

NumTracks of these are actual tracks. |

This table shows the fields of each track structure.

| Field | Definition |

|---|---|

TrackID | Unique track identifier used to distinguish multiple tracks. |

BranchID | Unique track branch identifier used to distinguish multiple track branches. |

SourceIndex | Unique source index used to distinguish tracking sources in a multiple tracker environment. |

UpdateTime | Time at which the track is updated. Units are in seconds. |

Age | Number of times the track was updated. |

State | Value of state vector at the update time. |

StateCovariance | Uncertainty covariance matrix. |

ObjectClassID | Integer value representing the object classification. The value

0 represents an unknown classification. Nonzero

classifications apply only to confirmed tracks. |

TrackLogic | Confirmation and deletion logic type. This value is always

'History' for radar sensors, to indicate

history-based logic. |

TrackLogicState | Current state of the track logic type, returned as a 1-by-K logical array. K is the number of latest track logical states recorded.

In the array, |

IsConfirmed | Confirmation status. This field is true if the track

is confirmed to be a real target. |

IsCoasted | Coasting status. This field is true if the track is

updated without a new detection. |

IsSelfReported | Indicate if the track is reported by the tracker. This field is

used in a track fusion environment. It is returned as

|

ObjectAttributes | Additional information about the track. |

For more details about these fields, see objectTrack.

The block outputs one track per target actor.

Dependencies

To enable this port, on the Parameters tab, set the

Output format of reported targets parameter to

Tracks.

Target poses, returned as a Simulink bus containing a MATLAB structure.

A target pose defines the position, velocity, and orientation of a target in ego vehicle coordinates. Target poses also include the rates of change in actor position and orientation.

The structure has these fields:

| Field | Description |

|---|---|

ActorID | Scenario-defined actor identifier, specified as a positive integer. |

ClassID | Classification identifier, specified as a nonnegative integer. 0

represents an object of an unknown or unassigned class. |

Position | Position of actor, specified as a real-valued vector of the form [x y z]. Units are in meters. |

Velocity | Velocity (v) of actor in the x-, y-, and z-directions, specified as a real-valued vector of the form [vx vy vz]. Units are in meters per second. |

Roll | Roll angle of actor, specified as a real scalar. Units are in degrees. |

Pitch | Pitch angle of actor, specified as a real scalar. Units are in degrees. |

Yaw | Yaw angle of actor, specified as a real scalar. Units are in degrees. |

AngularVelocity | Angular velocity (ω) of actor in the x-, y-, and z-directions, specified as a real-valued vector of the form [ωx ωy ωz]. Units are in degrees per second. |

Dependencies

To enable this port, on the Parameters tab, set the

Output format of reported targets parameter to

Target Poses.

Lane boundary detections, returned as a Simulink bus containing a MATLAB structure. The structure has these fields:

| Field | Description |

| Lane boundary coordinates, returned as a real-valued N-by-3 matrix, where N is the number of lane boundary coordinates. Lane boundary coordinates define the position of points on the boundary at returned longitudinal distances away from the ego vehicle, along the center of the road. This matrix also includes the boundary coordinates at zero distance from the ego vehicle. These coordinates are to the left and right of the ego vehicle origin, which is located under the center of the rear axle. Units are in meters. |

| Lane boundary curvature at each row of the

Coordinates matrix, returned as a real-valued

N-by-1 vector. N is the number of

lane boundary coordinates. Units are in radians per meter. |

| Derivative of lane boundary curvature at each row of the

Coordinates matrix, returned as a real-valued

N-by-1 vector. N is the number of

lane boundary coordinates. Units are in radians per square meter. |

| Initial lane boundary heading angle, returned as a real scalar. The heading angle of the lane boundary is relative to the ego vehicle heading. Units are in degrees. |

| Lateral offset of the ego vehicle position from the lane boundary, returned as a real scalar. An offset to a lane boundary to the left of the ego vehicle is positive. An offset to the right of the ego vehicle is negative. Units are in meters. In this image, the ego vehicle is offset 1.5 meters from the left lane and 2.1 meters from the right lane.

|

| Type of lane boundary marking, returned as one of these values:

|

| Saturation strength of the lane boundary marking, returned as a

real scalar from 0 to 1. A value of |

| Lane boundary width, returned as a positive real scalar. In a double-line lane marker, the same width is used for both lines and for the space between lines. Units are in meters. |

| Length of dash in dashed lines, returned as a positive real scalar. In a double-line lane marker, the same length is used for both lines. |

| Length of space between dashes in dashed lines, returned as a positive real scalar. In a dashed double-line lane marker, the same space is used for both lines. |

Dependencies

To enable this output port, set the Types of detections generated by

sensor parameter to Objects and

Lanes.

Parameters

To edit block parameters interactively, use the Property Inspector. From the Simulink Toolstrip, on the Simulation tab, in the Prepare gallery, select Property Inspector.

Unique sensor identifier, specified as a positive integer. This parameter distinguishes detections that come from different sensors in a multi-sensor system.

Example: 5

Data Types: double

Types of detections generated by the sensor, specified as Objects

Only or Objects and Lanes.

When set to

Objects Only, only actors are detected.When set to

Objects and Lanes, the sensor generates both object detections and unoccluded lane detections.

Required time interval between sensor updates, specified as a positive real scalar.

The drivingScenario object calls the ideal

ground truth sensor at regular time intervals. The sensor generates new detections at

intervals defined by this parameter which must be an integer multiple of the simulation

time interval. Updates requested from the sensor between update intervals contain no

detections. Units are in seconds.

Example: 5

Data Types: double

Sensor Extrinsics and Measurements

Sensor mounting location on the ego vehicle, specified as a 1-by-3 real-valued vector of the form [x y z]. This parameter defines the coordinates of the sensor in meters, along the x-, y-, and z-axes relative to the ego vehicle origin, where:

The x-axis points forward from the vehicle.

The y-axis points to the left of the vehicle.

The z-axis points up from the ground.

The default value defines a sensor that is mounted at the center of the front grill of a sedan.

For more details on the ego vehicle coordinate system, see Coordinate Systems in Automated Driving Toolbox.

Data Types: double

Yaw angle of the sensor, specified as a real scalar. The yaw angle is the angle between the center line of the ego vehicle and the down-range axis of the sensor. A positive yaw angle corresponds to a clockwise rotation when looking in the positive direction of the z-axis of the ego vehicle coordinate system. Units are in degrees.

Example: -4

Data Types: double

Pitch angle of the sensor, specified as a real scalar. The pitch angle is the angle between the down-range axis of the sensor and the x-y plane of the ego vehicle coordinate system. A positive pitch angle corresponds to a clockwise rotation when looking in the positive direction of the y-axis of the ego vehicle coordinate system. Units are in degrees.

Example: 3

Data Types: double

Roll angle of the sensor, specified as a real scalar. The roll angle is the angle of rotation of the down-range axis of the sensor around the x-axis of the ego vehicle coordinate system. A positive roll angle corresponds to a clockwise rotation when looking in the positive direction of the x-axis of the coordinate system. Units are in degrees.

Example: -4

Data Types: double

Angular field of view of the sensor, specified as a real-valued 1-by-2 vector of

positive values, [azfov,elfov]. The field of view defines the azimuth

and elevation extents of the sensor image. Each component must lie in the interval from

0 degrees to 180 degrees. Targets outside of the angular field of view of the sensor are

not detected. Units are in degrees.

Data Types: double

Maximum detection range of the sensor, specified as a positive real scalar. The sensor cannot detect a target beyond this range. Units are in meters.

Example: 200

Data Types: double

Detection Reporting

Coordinate system of reported detections, specified as one of these options:

Host Coordinates— Detections are reported in the ego vehicle Cartesian coordinate system.Sensor Coordinates— Detections are reported in the sensor Cartesian coordinate system.

Point on target actor used to report ground truth position, specified as one of these options:

Origin Point— The sensor reports the origin of the local coordinate frame of the target actor. For driving scenario vehicle actors, the origin is their rear-axle center. For RoadRunner Scenario vehicle actors, the origin is their geometric center.Closest Point— The sensor reports the point on the target actor closest to the sensor.Rear Center— The sensor reports the rear center of the target actor.

Format in which the sensor reports target actors, specified as one of these options:

Object detections— The sensor generates target reports as object detections described in the Object Detections output port.Tracks— The sensor generates target reports as tracks, which are clustered detections that have been processed by a tracking filter. The sensor returns tracks as described in the Tracks output port.Target Poses— The sensor generates poses of targets as described in the Target Poses output port.

Maximum number of object detections reported by the sensor, specified as a positive integer. The detections closest to the sensor are reported.

Data Types: double

Lane boundaries reported by sensor, specified as one of these options:

Ego Lane— The sensor returns the boundaries for the lane in which the host vehicle actor is traveling.Ego and Adjacent Lanes— The sensor returns the boundaries for the adjacent left and right lanes along with the lane in which host vehicle actor is traveling.All Lanes— The sensor returns the boundaries for all lanes on the road.

Dependencies

To enable this parameter, first set Types of detections generated by

sensor parameter to Objects and Lanes.

Maximum number of reported lanes, specified as a positive integer.

Dependencies

To enable this parameter, first set Types of detections generated by

sensor parameter to Objects and Lanes.

Data Types: double

Output Port Settings

Source of object bus name, specified as one of these options:

Auto— The block automatically creates a bus name.Property— Specify the bus name by using the Object bus name parameter.

Specify the name of the object detection bus returned in the Object Detections output port.

Dependencies

To enable this parameter, set the Source of object bus name

parameter to Property.

Source of lane detection bus name, specified as one of these options:

Auto— The block automatically creates a bus name.Property— Specify the bus name by using the Specify an output lane bus name parameter.

Specify the name of the object detection bus returned in the Lane Boundaries output port.

Dependencies

To enable this parameter, set the Source of lane bus name

parameter to Property.

Extended Capabilities

For driving scenario workflows, the Ideal Ground Truth Sensor block supports:

Rapid accelerator mode simulation.

Standalone deployment using Simulink Coder™ and for Simulink Real-Time™ targets.

For RoadRunner Scenario workflows, the Ideal Ground Truth Sensor block supports standalone deployment using ready-to-run packages. For more information about generating ready-to-run packages for your Simulink model, see Publish Ready-to-Run Actor Behaviors for Reuse and Simulation Performance.

Version History

Introduced in R2025a

MATLAB Command

You clicked a link that corresponds to this MATLAB command:

Run the command by entering it in the MATLAB Command Window. Web browsers do not support MATLAB commands.

Select a Web Site

Choose a web site to get translated content where available and see local events and offers. Based on your location, we recommend that you select: .

You can also select a web site from the following list

How to Get Best Site Performance

Select the China site (in Chinese or English) for best site performance. Other MathWorks country sites are not optimized for visits from your location.

Americas

- América Latina (Español)

- Canada (English)

- United States (English)

Europe

- Belgium (English)

- Denmark (English)

- Deutschland (Deutsch)

- España (Español)

- Finland (English)

- France (Français)

- Ireland (English)

- Italia (Italiano)

- Luxembourg (English)

- Netherlands (English)

- Norway (English)

- Österreich (Deutsch)

- Portugal (English)

- Sweden (English)

- Switzerland

- United Kingdom (English)