Mapping and Localization Using Vision and Lidar Data

Use simultaneous localization and mapping (SLAM) algorithms to build a map of the environment while estimating the pose of the ego vehicle at the same time. You can use SLAM algorithms with either visual or point cloud data. For more information on implementing visual SLAM using camera image data, see Implement Visual SLAM in MATLAB and Develop Visual SLAM Algorithm Using Unreal Engine Simulation. For more information on implementing point cloud SLAM using lidar data, see Implement Point Cloud SLAM in MATLAB and Design Lidar SLAM Algorithm Using Unreal Engine Simulation Environment.

You can use measurements from sensors such as inertial measurement units (IMU) and global positioning system (GPS) to improve the map building process with visual or lidar data. For an example, see Build a Map from Lidar Data.

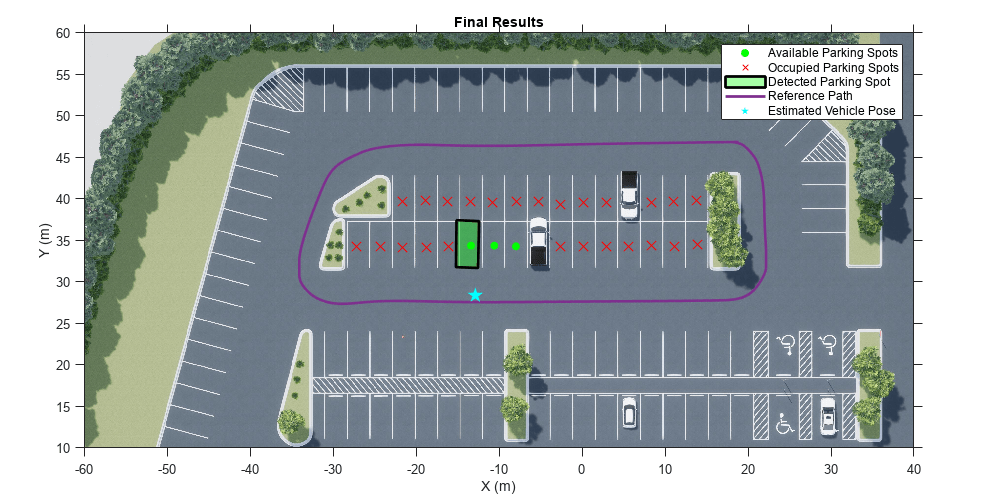

In environments with known maps, you can localize the ego vehicle by estimating its pose relative to the map coordinate frame origin. For an example on localization using a known visual map, see Visual Localization in a Parking Lot. For an example on localization using a known point cloud map, see Lidar Localization with Unreal Engine Simulation.

In environments without known maps, you can use visual-inertial odometry by fusing visual and IMU data to estimate the pose of the ego vehicle relative to the starting pose. For an example, see Visual-Inertial Odometry Using Synthetic Data.

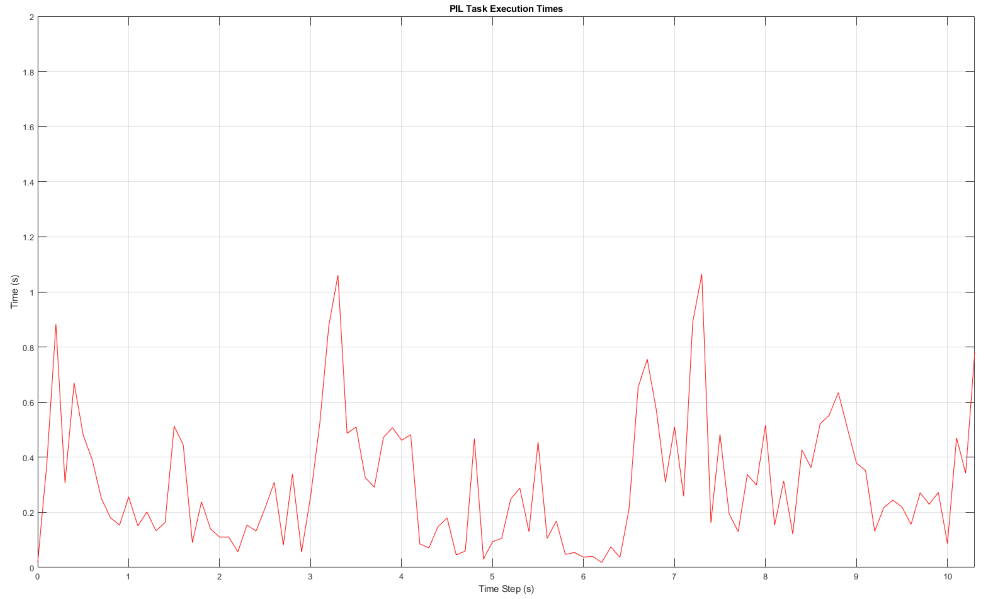

For an application of mapping and location algorithms to detect empty parking spots in a parking lot, see Perception-Based Parking Spot Detection Using Unreal Engine Simulation.

Functions

Topics

- Rotations, Orientations, and Quaternions for Automated Driving

Quaternions are four-part hypercomplex numbers that are used to describe three-dimensional rotations and orientations. Learn how to use them for automated driving applications.

- Implement Visual SLAM in MATLAB

Understand the visual simultaneous localization and mapping (vSLAM) workflow and how to implement it using MATLAB.

- Monocular Visual Simultaneous Localization and Mapping

Visual simultaneous localization and mapping (vSLAM).

- Implement Point Cloud SLAM in MATLAB

Understand point cloud registration and mapping workflow.