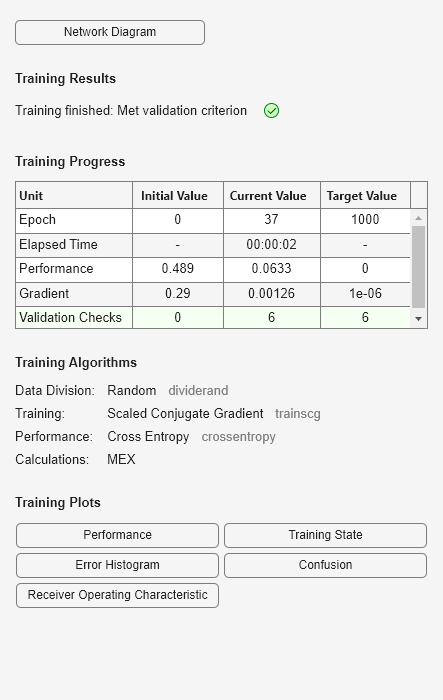

crossentropy

Neural network performance

Description

perf = crossentropy(net,targets,outputs,perfWeights)y near 1-t), with very little

penalty for fairly correct classifications (y near t).

Minimizing cross-entropy leads to good classifiers.

The cross-entropy for each pair of output-target elements is calculated as: ce =

-t .* log(y).

The aggregate cross-entropy performance is the mean of the individual values:

perf = sum(ce(:))/numel(ce).

Special case (N = 1): If an output consists of only one element, then the outputs and

targets are interpreted as binary encoding. That is, there are two classes with targets of 0

and 1, whereas in 1-of-N encoding, there are two or more classes. The binary cross-entropy

expression is: ce = -t .* log(y) - (1-t) .* log(1-y) .

perf = crossentropy(___,Name,Value)

Examples

Input Arguments

Name-Value Arguments

Output Arguments

Version History

Introduced in R2013b