Assessing the Risk of Falls in Older Adults with Inertial Sensors and Machine Learning

By Dr. Barry Greene, Kinesis Health Technologies

Almost one in three adults over the age of 65 falls each year, making falls the leading cause of fatal and nonfatal injuries in this age group. In the United States alone, the estimated annual medical cost of injuries from falls among the elderly is $50 billion [1].

Assessing a patient’s fall risk and taking appropriate action when risk is identified are vital to reducing fall-related injuries. Many of the methods traditionally used to evaluate fall risk, however, depend on subjective evaluation or require specialized clinical expertise.

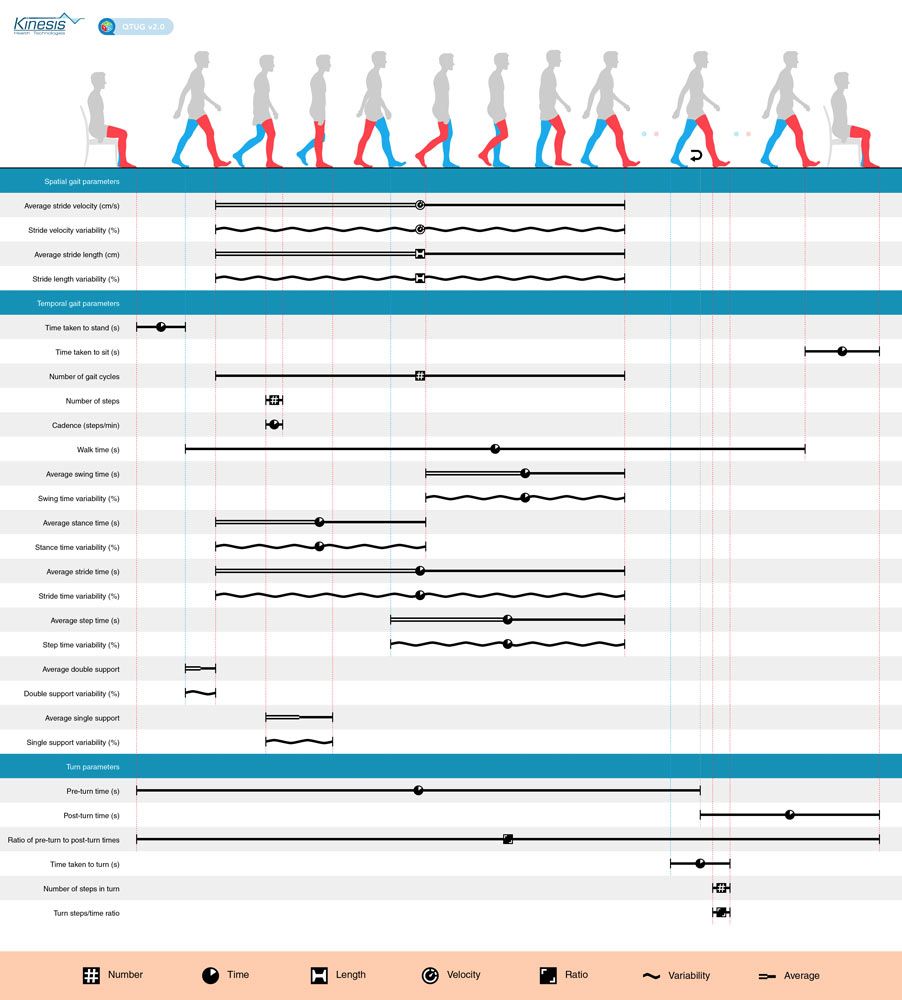

Our engineering team at Kinesis Health Technologies has developed an objective, quantitative method of screening for fall risk, frailty, and mobility impairment that is 15% to 27% more accurate than traditional methods. Our QTUG™ (Quantitative Timed Up and Go) system (Figure 1) uses data from wireless inertial sensors placed on a patient’s legs. Signal processing algorithms and machine learning–based classifiers developed in MATLAB® compute a fall risk estimate (FRE) and a frailty index (FI) based on data collected from the sensors and from patient responses to a questionnaire on common fall risk factors.

Developing the QTUG software with MATLAB enabled us to deliver QTUG two to three times faster than would have been possible by developing entirely in Java®. That enabled us to shorten the time needed to bring QTUG to market and get it registered as a Class I medical device with the FDA, Health Canada, and the EU.

Traditional Fall Risk Methods vs. QTUG

The two most common methods for assessing fall risk and mobility are the timed up and go (TUG) test and the Berg Balance Scale (BBS). In a TUG test, the clinician uses a stopwatch to measure how long it takes a patient to stand from sitting in a chair, walk three meters, turn around, return to the chair, and sit back down. The BBS test is more involved, requiring the patient to complete a series of balance-related tasks; a clinician rates the patient’s ability to complete each subtask on a scale from 0 to 4. Studies have shown that TUG and BBS tests are approximately 50–60% accurate in identifying patients at risk of falling. In addition, the BBS test requires the clinician to make subjective judgments on how well a patient completes each task.

Kinesis QTUG provides a more detailed, objective, and accurate alternative to these methods. In a QTUG test, a patient is fitted with two wireless inertial sensors, one on each leg below the knee. Each sensor includes an accelerometer and a gyroscope. The patient then performs the standard TUG test movements of standing, walking, turning, and returning to the chair as sensor data is transmitted to the QTUG software via Bluetooth® (Figure 2).

Filtering and Calibrating Accelerometer and Gyroscope Signals

The sensor units worn on each leg contain a tri-axial accelerometer and a tri-axial gyroscope. Each accelerometer produces three signals, reflecting movement along the x, y, and z axes. Each gyroscope also produces three signals, reflecting rotational movement in three dimensions. Sensor data for all 12 signals is sampled at a rate of 102.4 Hz. To remove high-frequency noise in this data, we use digital filters that we designed using the Filter Designer in Signal Processing Toolbox™. During initial development, we evaluated Chebyshev and Butterworth filters, finding that a zero-phase, second-order Butterworth filter with a 20 Hz corner frequency worked best.

While designing the filters, we were also developing MATLAB algorithms for calibrating the sensors. These in-field calibration algorithms are essential for removing bias from the sensor signals and for acquiring consistent signal data across sensors. The calibration algorithms are also responsible for converting the raw 32-bit signal values generated by the sensors into meaningful units, such as m/s2 (meters per second squared).

Extracting Features and Training Classifiers

We explored the filtered signal data in MATLAB to identify features and attributes relevant to frailty and fall risk. For example, we plotted angular velocity over time and detected peaks that corresponded to a patient’s mid-swing, heel strike, and toe-off points when walking. These characteristic points allow us to distinguish between walking and turning while performing a TUG test (Figure 3).

We identified more than 70 quantitative TUG parameters that we could use with the patient’s prospective or historical fall data to train a supervised classifier. These features included average stride velocity and length, time taken to stand and sit, number of steps required to turn, and time spent on one foot and two feet while walking (Figure 4).

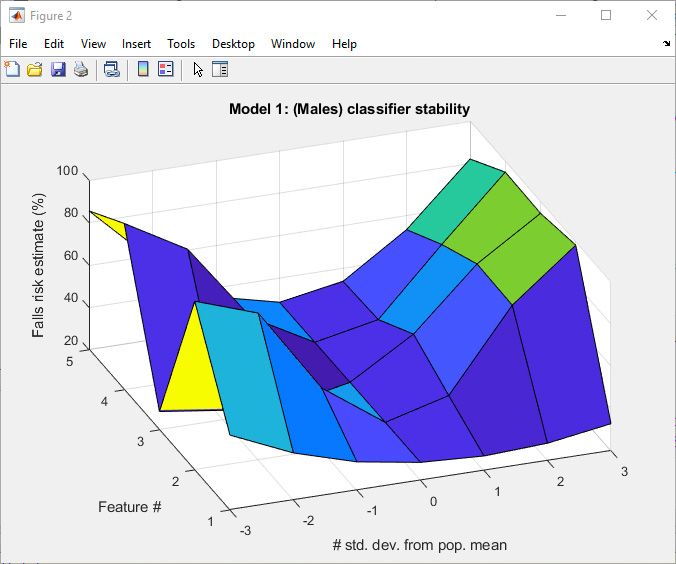

We used the cross-validation and sequential feature selection functions in Statistics and Machine Learning Toolbox™ to select the subset of features with the highest predictive value and validate a regularized discriminant classifier model that we implemented in MATLAB. We trained a separate logistic regression classifier on data from patient questionnaires, which included clinical risk factors such as gender, height, weight, age, vision impairment, and polypharmacy (number of prescription medications being taken). We obtained an overall fall risk estimate by performing a weighted average on the results of the sensor-based model and the questionnaire-based model (Figure 5). We used a similar method to generate a statistical estimate of the patient’s frailty level.

Validating Results and Deploying to Production Hardware

We trained our models on clinical trial data collected on thousands of patients, and assessed the results produced by the combined classifier. As part of this analysis, we generated histograms and scatter plots of frailty and fall risk that confirmed our assumption that the two measures are closely correlated (Figure 6).

We also compared the accuracy of QTUG with traditional TUG tests and the Berg Balance Scale for specific patient groups. A recent study that focused on patients with Parkinson’s disease, for example, showed that QTUG was almost 30% more accurate than the TUG test. For each method, we examined sensitivity (the percentage of fallers identified correctly) and specificity (the percentage of non-fallers correctly identified). We then plotted ROC curves for all methods that clearly showed QTUG with the largest area under the curve.

To implement the QTUG classifiers on a touchscreen Android™ device, we recoded them in Java. To update the classifier coefficients based on a new reference data set, we simply export them from MATLAB to an Android resource file that is then incorporated into our Android build. The complete QTUG Android app guides the clinician through the test, receives the sensor data transmitted via Bluetooth, processes the data with the classifier models, and displays the fall risk and frailty score together with a reference that shows how the patient’s results compare with the results of his or her peers.

To date, clinicians in eight countries have used QTUG to evaluate more than 20,000 patients. We continue to improve the reference data set as new results come in. We are also developing a MATLAB algorithm that will enable individuals to assess fall risk themselves using their mobile phones. The new algorithm processes data from the mobile phone’s accelerometer and gyroscope. It produces a simplified fall risk estimate that requires no office visit and that can be tracked daily to reveal increases in fall risk.

Published 2019

References

-

https://onlinelibrary.wiley.com/doi/full/10.1111/jgs.15304