Run TensorFlow Lite Models with MATLAB and Simulink

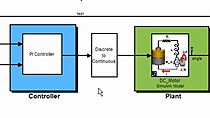

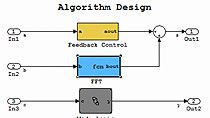

The Deep Learning Toolbox™ Interface for TensorFlow Lite enables the use of pretrained TensorFlow Lite (TFLite) models directly within MATLAB® and Simulink® for deep learning inference. Incorporate pretrained TFLite models with the rest of your application implemented in MATLAB or Simulink for development, testing, and deployment. Inference of pretrained TFLite models is executed by the TensorFlow Lite Interpreter while the rest of the application code is executed by MATLAB or Simulink. Data is exchanged between MATLAB or Simulink and the TensorFlow Lite Interpreter automatically. Use MATLAB Coder™ or Simulink Coder™ to generate C++ code from applications containing TFLite models for deployment to target hardware. In the generated code, inference of the TFLite model is executed by the TensorFlow Lite Interpreter while C++ code is generated for the remainder of the MATLAB or Simulink application, including pre- and postprocessing. For example, use a TensorFlow Lite model pretrained for object detection in a Simulink model to perform vehicle detection on streaming video input. For more information on prerequisites and getting started with using TensorFlow Lite Models in MATLAB and Simulink, please refer to documentation on this site and the Related Resources section.

Published: 11 Jul 2022