Data Sets for Text Analytics

This page provides a list of different data sets that you can use to get started with text analytics applications.

| Data Set | Description | Task |

|---|---|---|

|

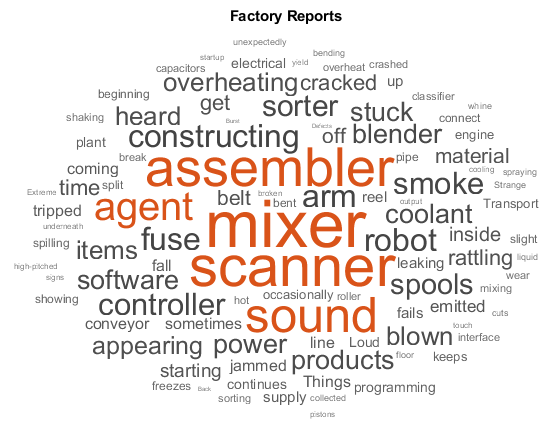

Factory Reports

| The Factory Reports data set is a table containing approximately 500 reports with various

attributes including a plain text description in the variable Read the Factory Reports data from the file filename = "factoryReports.csv"; data = readtable(filename,'TextType','string'); textData = data.Description; labels = data.Category; For an example showing how to process this data for deep learning, see Classify Text Data Using Deep Learning (Deep Learning Toolbox). |

Text classification, topic modeling |

|

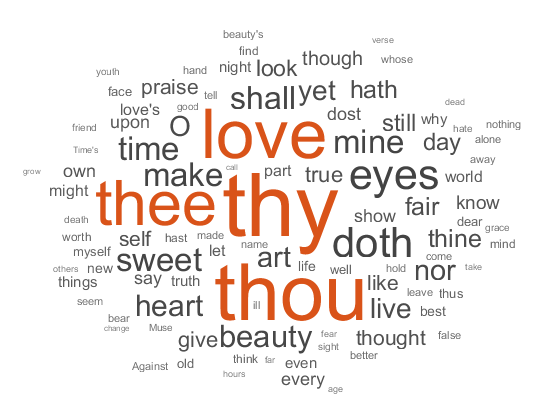

Shakespeare's Sonnets

| The file Read the Shakespeare's Sonnets data from the file

filename = "sonnets.txt";

textData = extractFileText(filename);

The sonnets are indented by two whitespace characters and are separated by two newline

characters. Remove the indentations using textData = replace(textData," ",""); textData = split(textData,[newline newline]); textData = textData(5:2:end); For an example showing how to process this data for deep learning, see Generate Text Using Deep Learning (Deep Learning Toolbox). |

Topic modeling, text generation |

|

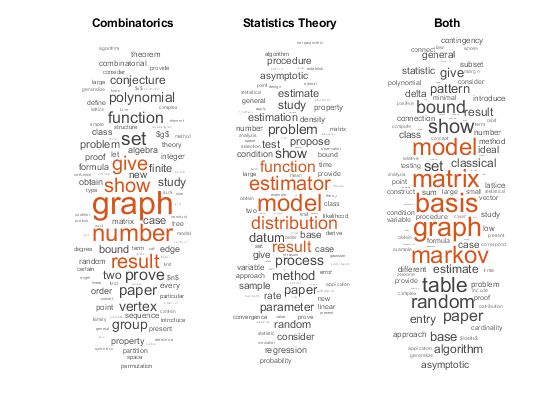

ArXiv Metadata

| The ArXiv API allows you to access the metadata of scientific e-prints submitted to https://arxiv.org including the abstract and subject areas. For more information, see https://arxiv.org/help/api. Import a set of abstracts and category labels from math papers using the arXiV API. url = "https://export.arxiv.org/oai2?verb=ListRecords" + ... "&set=math" + ... "&metadataPrefix=arXiv"; options = weboptions('Timeout',160); code = webread(url,options); For an example showing how to parse the returned XML code and import more records, see Multilabel Text Classification Using Deep Learning. |

Text classification, topic modeling |

|

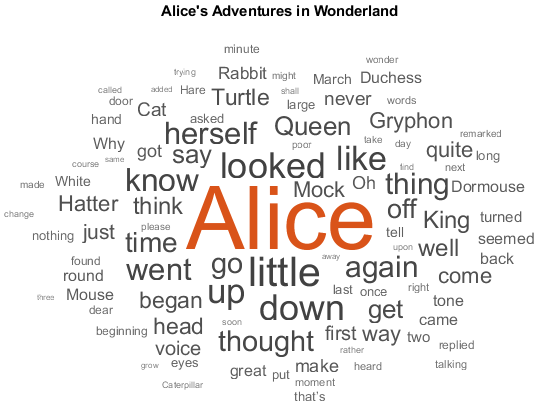

Books from Project Gutenberg

| You can download many books from Project Gutenberg. For example, download the text from Alice's Adventures in Wonderland by Lewis Carroll from https://www.gutenberg.org/files/11/11-h/11-h.htm using the url = "https://www.gutenberg.org/files/11/11-h/11-h.htm";

code = webread(url);The HTML code contains the relevant text inside tree = htmlTree(code);

selector = "p";

subtrees = findElement(tree,selector);Extract the text data from the HTML subtrees using the textData = extractHTMLText(subtrees);

textData(textData == "") = [];For an example showing how to process this data for deep learning, see Word-by-Word Text Generation Using Deep Learning. |

Topic modeling, text generation |

|

Weekend updates

| The file Extract the text data from the file filename = "weekendUpdates.xlsx"; tbl = readtable(filename,'TextType','string'); textData = tbl.TextData; For an example showing how to process this data, see Analyze Sentiment in Text. |

Sentiment analysis |

|

Roman Numerals

| The CSV file Load the decimal-Roman numeral pairs from the CSV file filename = fullfile("romanNumerals.csv"); options = detectImportOptions(filename, ... 'TextType','string', ... 'ReadVariableNames',false); options.VariableNames = ["Source" "Target"]; options.VariableTypes = ["string" "string"]; data = readtable(filename,options); For an example showing how to process this data for deep learning, see Sequence-to-Sequence Translation Using Attention. |

Sequence-to-sequence translation |

|

Finance Reports

|

The Securities and Exchange Commission (SEC) allows you to access financial reports via the Electronic Data Gathering, Analysis, and Retrieval (EDGAR) API. For more information, see https://www.sec.gov/search-filings/edgar-search-assistance/accessing-edgar-data. To download this data, use the function year = 2019; qtr = 4; maxLength = 2e6; textData = financeReports(year,qtr,maxLength); For an example showing how to process this data, see Generate Domain Specific Sentiment Lexicon. |

Sentiment analysis |

See Also

Topics

- Extract Text Data from Files

- Parse HTML and Extract Text Content

- Prepare Text Data for Analysis

- Analyze Text Data Containing Emojis

- Create Simple Text Model for Classification

- Classify Text Data Using Deep Learning

- Analyze Text Data Using Topic Models

- Analyze Sentiment in Text

- Sequence-to-Sequence Translation Using Attention

- Generate Text Using Deep Learning (Deep Learning Toolbox)