Summarize Status of Model Testing for Project

When you design complex systems, like a cruise control system, your team manages testing and requirements traceability to make sure that your models implement your requirements, your requirements test your implementation, and your implementation has sufficient test coverage. To help your team manage and assess the status of these objectives across your project, you can use the Project Model Testing dashboard.

The Project Model Testing dashboard provides:

A summary of the model testing, coverage, and requirements traceability across the units in your project

Aggregated metrics, including the status of model testing, coverage, and requirements traceability across your project

The ability to identify gaps like missing traceability links and insufficient model testing

The dashboard allows you to navigate directly to the affected artifacts, opening them in the appropriate development tools for further refinement. When you make a change to an artifact, the dashboard can automatically detect the change and identify outdated metric results. You can incrementally re-collect these metrics to help make sure that your team has up-to-date information on project artifacts and visibility into the overall status of your project, helping your team maintain high-quality testing standards and supporting compliance with industry specifications and guidelines.

Open Project and Dashboard

As you design your system, you manage many different types of artifacts, including requirements, models, tests, and results. To organize those artifacts and define the scope of artifacts that the dashboard metrics analyze, you need to have your artifacts inside a project. For this example, you use the dashboard example project for a cruise control system. For more information, see Create Project to Use Model Design and Model Testing Dashboards.

1. Open a project for an example cruise control system that simulates controlling the speed of a vehicle automatically. The project contains units that model specific behaviors inside the cruise control system and a component that integrates those lower level units together. The cruise control system manages the vehicle speed based on specific conditions specified in the requirements. The project includes requirement specifications for the system and tests for the cruise control response to driver inputs and system behavior.

openProject("cc_CruiseControl");2. Open the Model Testing Dashboard by clicking the Project tab and clicking Model Testing Dashboard.

When you open a dashboard, the dashboard analyzes the artifacts inside the project folder, identifies potential software units and components, and traces requirements-based testing artifacts to their associated units and components. By default, the dashboard considers Simulink® models as units and System Composer™ architecture models as components. But you can customize the unit and component classification as shown in Categorize Models in Hierarchy as Components or Units.

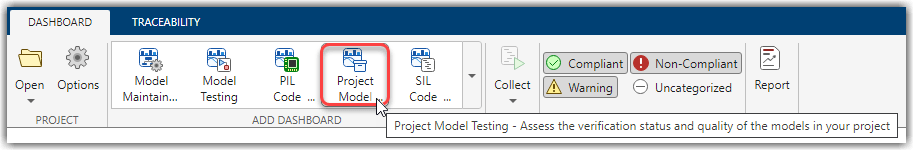

3. In the Dashboard tab, open the Project Model Testing dashboard by clicking Project Model Testing in the dashboard gallery in the toolstrip.

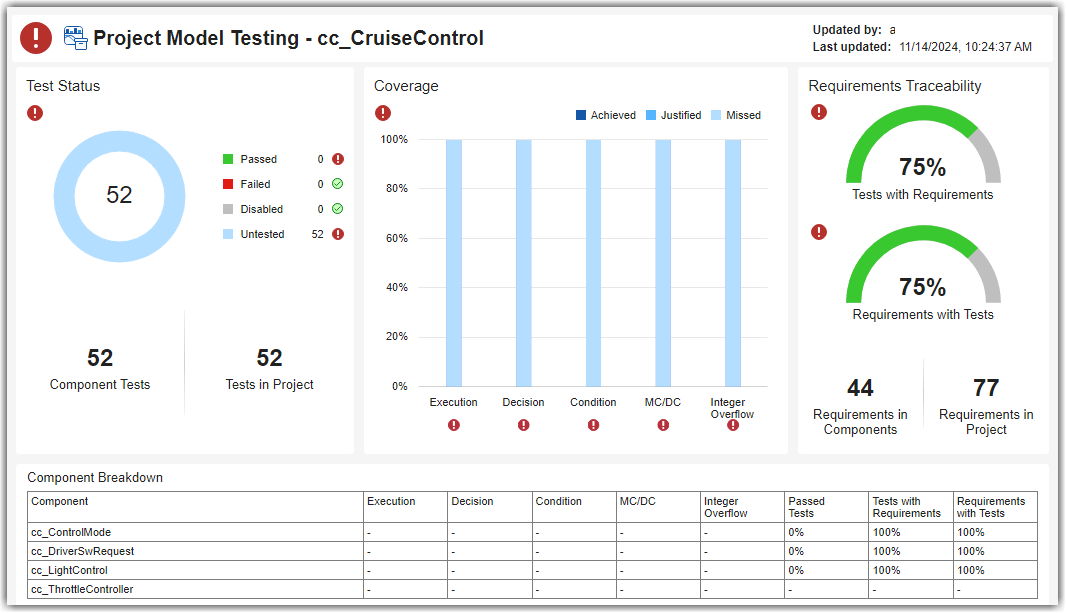

The dashboard collects project model testing metric results for the units in the project. For example, in the example cruise control project, the dashboard collects metric results for the units cc_ControlMode, cc_DriverSwRequest, cc_LightControl, and cc_ThrottleController. Collecting data for a metric requires a license for the product that supports the underlying artifacts, such as Requirements Toolbox™, Simulink Test™, or Simulink Coverage™. After you collect metric results, you need only a Simulink® Check™ license to view the results.

View Overall Status of Model Testing

The Project Model Testing dashboard can help you see the current status of model testing in your project, identify gaps in model testing and requirements traceability, and navigate directly to artifacts that need development.

View Model Testing Status

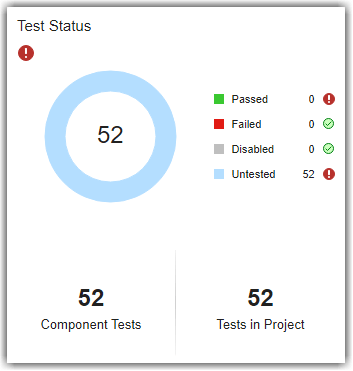

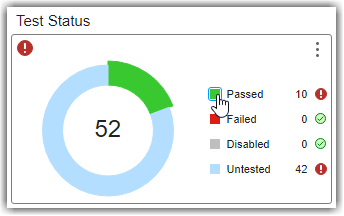

To view an overview of the model testing status for units in your project, you can use the Test Status section of the dashboard. The donut chart widget shows the number of unique tests in the project that Passed, Failed, are Disabled, or are Untested. If you see many untested tests, make sure to review the tests that the dashboard classified as component tests.

The dashboard analyzes the tests in your project to determine which tests directly contribute to the quality of component testing and which tests are for overall project testing. The widget for:

Component Tests shows the number of unique component tests in the project. Component tests evaluate the component design, or a part of the design, in the same execution context of the component. These tests help assess the quality of the component.

Tests in Project shows the total number of tests in the project. These tests include component tests, independent tests, and tests that are not associated with a component. Independent tests are tests that can execute independently of the component and can behave differently depending on the conditions specified in the test harness. These tests include tests on libraries, subsystem references, and virtual subsystems. A test might not be associated with any components if the model specified in the test has been deleted or there are models that are not part of a component.

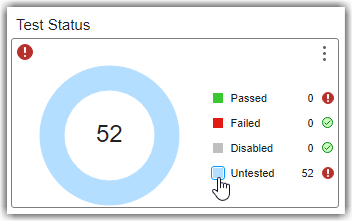

You can directly navigate to the tests that meet these criteria by clicking the widgets in the dashboard. To find out which artifacts contribute to a metric and how the metric calculates the metric results, you can point to a widget and use the Help to view the documentation for the associated metric for the widget.

For example, in the example project, the tests are currently untested.

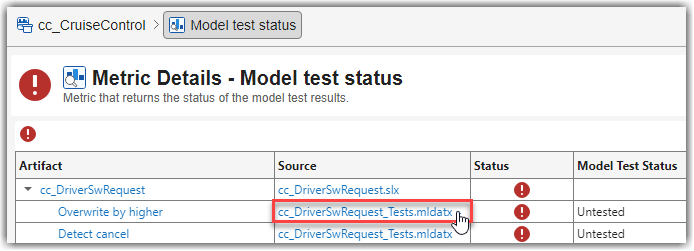

1. View more information about the untested tests in the project by clicking the blue square next to Untested. The dashboard opens a Metric Details table with hyperlinks to the tests and the source test file. In Metric Details, you can click the hyperlinks to open the test or test file directly in Test Manager.

2. Open and run the tests for the cc_DriverSwRequest model. In Metric Details, in the Source column, click the cc_DriverSwRequest_Tests.mldatx link. The test file opens in Test Manager. In the Test Browser, right-click and run cc_DriverSwRequest_Tests.

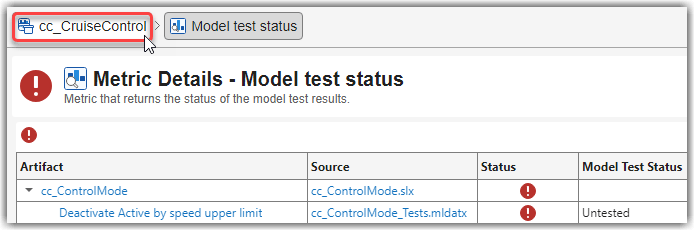

3. After the tests run, return to the Project Model Testing dashboard window. The dashboard automatically detects the new test results and shows a warning banner that the metric results are no longer up to date. Click Collect to update the impacted results in the current Metric Details table. The tests for cc_DriverSwRequest no longer appear in the list of untested tests in Metric Details.

4. Use the breadcrumb trail at the top of the dashboard to navigate from the Metric Details back to the main project metric results for the cc_CruiseControl project.

5. Update the impacted metric results for the overall Project Model Testing dashboard by clicking Collect in the warning banner. In the Test Status section, the donut chart widget shows the progress that you made towards the model testing goals for your project. Now, 10 tests are passing and 42 tests remain untested. Typically, you execute each of the untested tests in the project. For this example, leave the rest of the tests untested.

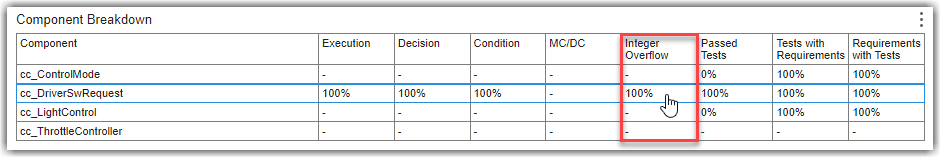

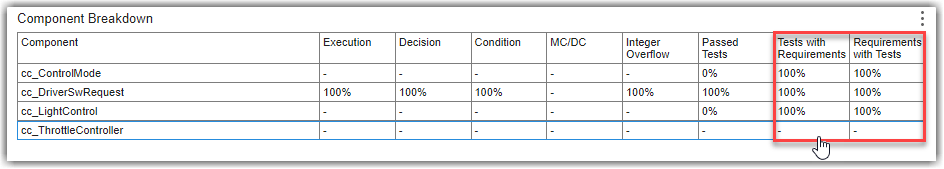

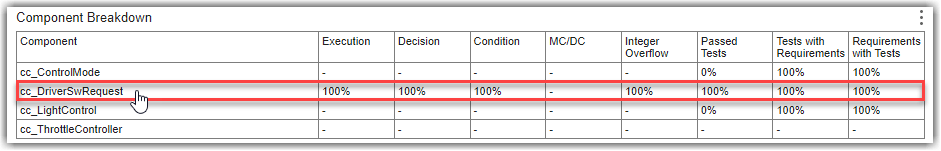

6. View the percentage of tests that passed for each unit. In the Component Breakdown section at the bottom of the dashboard, see the Passed Tests column. Components that have tests show the percentage of tests that passed. For example, the cc_DriverSwRequest model now has 100% of tests passing. Components that do not have component tests that trace to them show a dash (-).

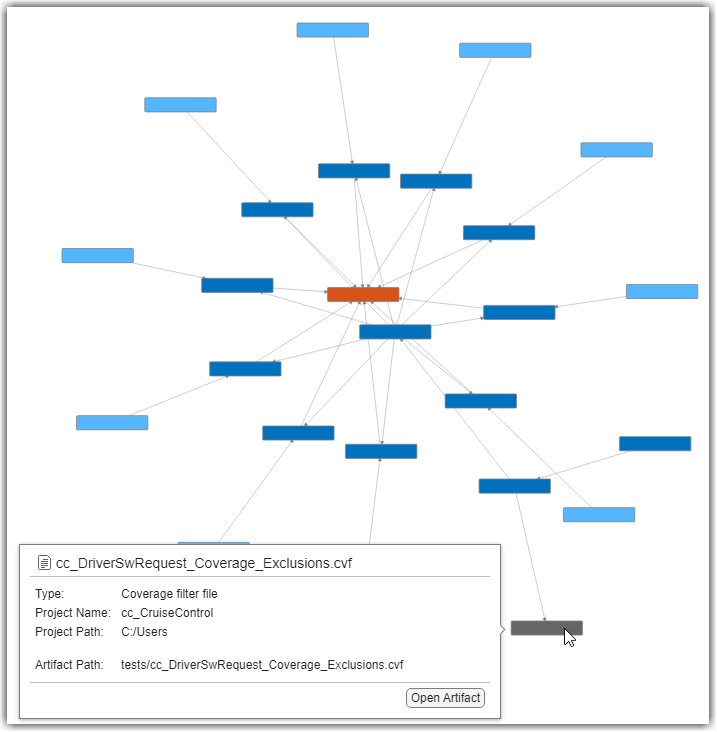

7. View the traceability between the cc_DriverSwRequest model, tests, and results. In the toolstrip, click the Traceability tab, and, in the trace views gallery, click the Tests and Results trace view. In the Project panel, click cc_DriverSwRequest to view the test and result artifacts that trace to cc_DriverSwRequest.

In this example cruise control system, the driver of the vehicle can control the cruise control by using five buttons. These buttons control the activation, cancellation, and vehicle speed settings for the cruise control system. The driver switch request system makes sure that if a driver uses these buttons to request changes to the cruise control mode, the system sends the requests only one time and allows higher priority commands, like canceling the cruise control, to override lower priority requests. The cc_DriverSwRequest model has tests that verify the functionality of driver requests to help make sure that the cruise control system handles driver inputs as expected according to the requirements. For example, there are driver switch request tests that evaluate the functionality implemented for requirements such as making sure the system detects short and long button presses and handling different priority requests from the driver. By running these tests, model developers and test engineers can verify that the model interprets and processes driver inputs as expected, providing the expected interaction between the driver and the vehicle cruise control system.

In the trace view, you can see the test file for the unit, the test suite, the test cases, and the connection between those tests and their individual test results. The trace view also includes relationships to additional artifacts such as coverage filters that impact the test coverage.

You can view more information about an artifact by pointing to the artifact in the trace view work area. You can also automatically rearrange artifacts by using the Layout menu in the toolbar. The Cluster layout option is helpful for visualizing large projects with complex hierarchies.

8. Above the trace view work area, click the Project Model Testing - cc_CruiseControl tab to return to the Project Model Testing dashboard.

View Average Model Coverage

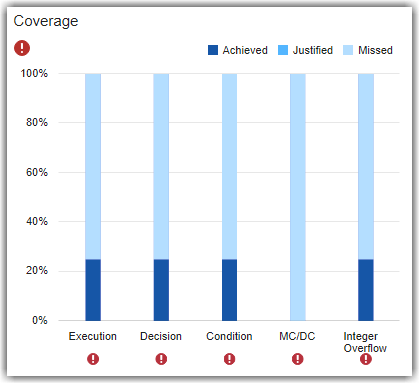

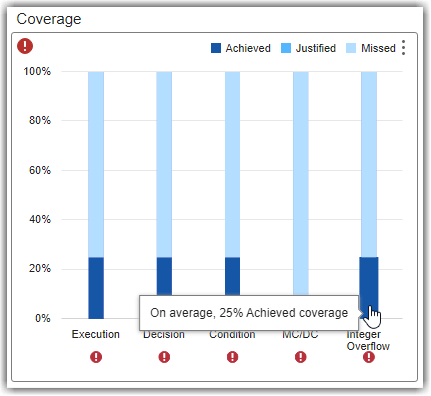

To assess the completeness of model testing across the units in the project, you can view the average coverage in the Coverage section of the dashboard. Each major type of coverage has a coverage bar, divided into sections for Achieved, Justified, and Missed coverage. The Coverage section shows the average percentage of model coverage for the Execution, Decision, Condition, MC/DC, and Integer Overflow coverage types. The metrics average the coverage across the units in the project.

1. View the average achieved integer overflow coverage for the project by pointing to the Achieved part of the Integer Overflow coverage bar. The tooltip shows the average achieved coverage as 25%.

2. View the individual model coverage percentages for each unit in the project by using the Component Breakdown section at the bottom of the dashboard. The unit that you tested, cc_DriverSwRequest, has 100% integer overflow coverage and the other three units do not have coverage results yet. Consequently, the Coverage widget shows 25% average achieved integer overflow coverage.

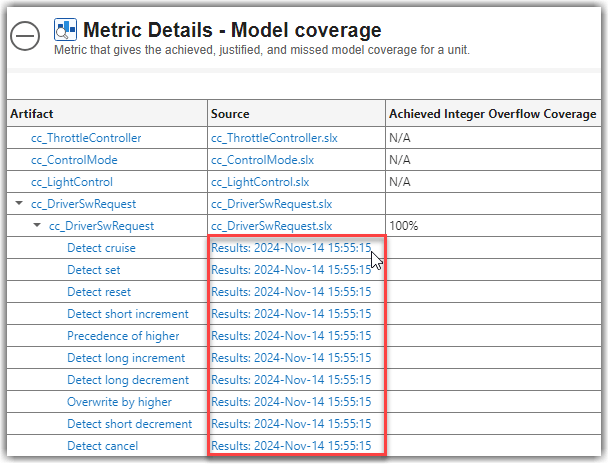

3. Identify the source of integer overflow coverage by clicking the Achieved part of the Integer Overflow bar in the Coverage section. The Metric Details table shows each unit in the project, the sources of model coverage, and the percentage of achieved integer overflow coverage for each unit. For the cc_DriverSwRequest model, the Source column shows the test results that contribute to the 100% achieved integer overflow coverage for the model. You can see that the source of coverage for the cc_DriverSwRequest model is the test results from when you ran the tests earlier in this example. The coverage data for the other units in the project is N/A because you ran only the tests for cc_DriverSwRequest. If you point to the results in the table, the tooltip shows where in the project the test results are.

The aggregated coverage data can help you identify areas where your model tests might not fully execute the model and its functionality and performance across different conditions. You can use these metric results to help you improve test completeness, improve model reliability, and meet industry standards.

View Traceability Between Requirements and Tests

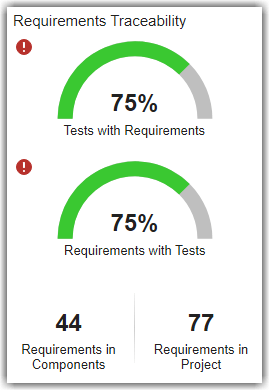

To make sure the requirements for your project have corresponding tests, you can use the Requirements Traceability section of the dashboard. The dial gauges for Tests with Requirements and Requirements with Tests show the connection between the implemented functional requirements and component tests in the project. These metric results show the average percentage across units in the project.

The dashboard analyzes the requirements in your project to determine which requirements directly contribute to the functional implementation of the component and which requirements are for the overall project. The widget for:

Requirements in Components shows the number of functional requirements that are implemented in components. You can see the requirements under the component that implements them and the source model, requirement, and test files by clicking the widget.

Requirements in Project shows the number of functional requirements in the project. You can see the individual functional requirements and the associated requirements file that defines those requirements by clicking the widget.

For this example project, the requirements are in the interface control document, system requirements, and software requirements files in the driving assistant specification reference project. By verifying that your requirements and tests are properly linked, you can assess the status of requirements traceability and linkage for your models and maintain industry standards for tracking implemented and functional requirements.

To see the percentage for each specific component, you can view the individual percentages in the Component Breakdown section at the bottom of the dashboard in the columns for Tests with Requirements and Requirements with Tests columns.

In this example project, you can see in the Component Breakdown table that the model cc_ThrottleController does not appear to have any requirements linked to tests or tests linked to requirements. A verification or test engineer needs to develop tests for this model. To see how existing software requirements currently relate to the implementation in the design, you can use the Requirements to Design trace view.

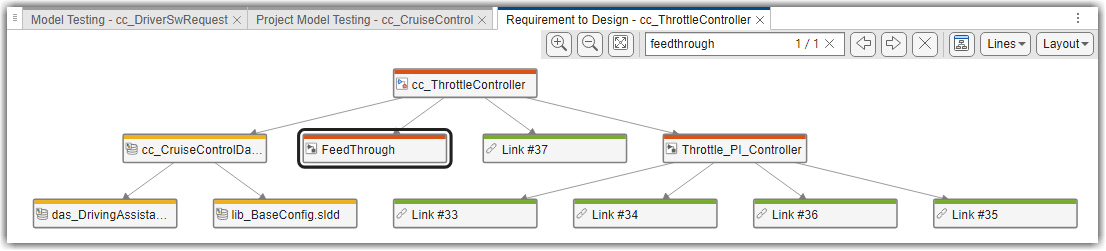

1. In the toolstrip, click the Traceability tab, click the Requirements to Design trace view, and in the Project panel, click cc_ThrottleController. The throttle controller needs to maintain vehicle speed control or allow manual throttle control when the system is inactive. The trace view shows the relationships between various requirements and subsystems in the model. The trace view shows individual requirements, links to the main block diagram for the unit, underlying subsystems, and data dictionaries involved.

2. In the toolstrip for the trace view, search for FeedThrough. The trace view highlights the subsystem.

The throttle controller model contains a FeedThrough subsystem that maintains the throttle signal integrity when the system is inactive. When the cruise control system is not in the ACTIVE mode, the throttle controller must pass the throttle value directly through without modification. The other requirements on the unit apply to the Throttle_PI_Controller subsystem, which applies control algorithms to adjust the throttle value to make sure the value does not exceed maximum or minimum thresholds. The system must calculate the appropriate throttle value when the cruise control system is active so that the throttle value stays within predefined limits. You can see the links to these requirements in the trace view. These requirements need tests to help validate that the throttle controller implements these functional behaviors.

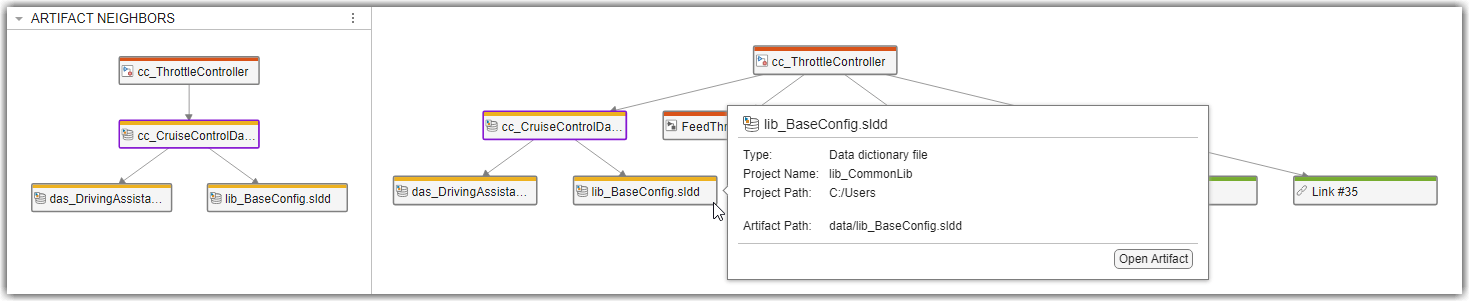

In this example project, the throttle controller model uses cruise control data from a data dictionary file, cc_CruiseControlData.sldd, that requires information from base configuration and driving assistant specification data dictionaries. You can see these data dictionaries in yellow, connected to the main block diagram. If you point to the arrow connecting the main data dictionary to the main block diagram, you can see that the traceability relationship between these artifacts is a REQUIRES relationship.

3. View the direct artifact relationships, such as the direct connections between data dictionaries and model block diagrams, by clicking an artifact and checking the Artifact Neighbors panel. To view detailed information about an artifact, point to the artifact in the trace view.

Identify and Address Gaps in Model Testing

The Project Model Testing dashboard provides an overview of the model testing status for the units in your project. When you identify issues in your model testing and requirements traceability, you can use the links in the dashboard to navigate directly to impacted artifacts.

As you develop your models, requirements, and tests, you can use the Model Testing Dashboard to assess the progress of the requirements-based testing for an individual model. You can open the Model Testing Dashboard for a specific unit by clicking the row for the unit in the Component Breakdown table at the bottom of the dashboard. For more information on the Model Testing Dashboard, see Explore Status and Quality of Testing Activities Using Model Testing Dashboard.

In addition to collecting and viewing metric results in the project and model testing dashboards, you can also collect metric results programmatically by using metric.Engine. For more information on the project model testing metrics, see Project Model Testing Metrics.