Enhanced Edge Detection from Noisy Color Video

This example shows how to develop a complex pixel-stream video processing algorithm, accelerate its simulation using MATLAB® Coder™, and generate HDL code from the design. The algorithm enhances the edge detection from noisy color video.

You must have a MATLAB Coder license to run this example.

This example builds on the Pixel-Streaming Design in MATLAB (Vision HDL Toolbox) and the Accelerate Pixel-Streaming Designs Using MATLAB Coder (Vision HDL Toolbox) examples.

Test Bench

In the EnhancedEdgeDetectionHDLTestBench.m file, the videoIn object reads each frame from a color video source, and the imnoise function adds salt and pepper noise. This noisy color image is passed to the frm2pix object, which converts the full image frame to a stream of pixels and control structures. The function EnhancedEdgeDetectionHDLDesign.m is then called to process one pixel (and its associated control structure) at a time. After we process the entire pixel-stream and collect the output stream, the pix2frm object converts the output stream to full-frame video. A full-frame reference design EnhancedEdgeDetectionHDLReference.m is also called to process the noisy color image. Its output is compared with that of the pixel-stream design. The function EnhancedEdgeDetectionHDLViewer.m is called to display video outputs.

The workflow above is implemented in the following lines of EnhancedEdgeDetectionHDLTestBench.m.

... frmIn = zeros(actLine,actPixPerLine,3,'uint8'); for f = 1:numFrm frmFull = readFrame(videoIn); % Get a new frame frmIn = imnoise(frmFull,'salt & pepper'); % Add noise

% Call the pixel-stream design

[pixInVec,ctrlInVec] = frm2pix(frmIn);

for p = 1:numPixPerFrm

[pixOutVec(p),ctrlOutVec(p)] = EnhancedEdgeDetectionHDLDesign(pixInVec(p,:),ctrlInVec(p));

end

frmOut = pix2frm(pixOutVec,ctrlOutVec);

% Call the full-frame reference design

[frmGray,frmDenoise,frmEdge,frmRef] = EnhancedEdgeDetectionHDLReference(frmIn);

% Compare the results

if nnz(imabsdiff(frmRef,frmOut))>20

fprintf('frame %d: reference and design output differ in more than 20 pixels.\n',f);

return;

end

% Display the results

EnhancedEdgeDetectionHDLViewer(actPixPerLine,actLine,[frmGray frmDenoise uint8(255*[frmEdge frmOut])],[frmFull frmIn]);

end

...

Since frmGray and frmDenoise are uint8 data type while frmEdge and frmOut are logical, uint8(255x[frmEdge frmOut]) maps logical false and true to uint8(0) and uint8(255), respectively, so that matrices can be concatenated.

Both frm2pix and pix2frm are used to convert between full-frame and pixel-stream domains. The inner for-loop performs pixel-stream processing. The rest of the test bench performs full-frame processing.

Before the test bench terminates, frame rate is displayed to illustrate the simulation speed.

For the functions that do not support C code generation, such as tic, toc, imnoise, and fprintf in this example, use coder.extrinsic to declare them as extrinsic functions. Extrinsic functions are excluded from MEX generation. The simulation executes them in the regular interpreted mode. Since imnoise is not included in the C code generation process, the compiler cannot infer the data type and size of frmIn. To fill in this missing piece, we add the statement frmIn = zeros(actLine,actPixPerLine,3,'uint8') before the outer for-loop.

Pixel-Stream Design

The function defined in EnhancedEdgeDetectionHDLDesign.m accepts a pixel stream and a structure consisting of five control signals, and returns a modified pixel stream and control structure. For more information on the streaming pixel protocol used by System objects from the Vision HDL Toolbox, see the Streaming Pixel Interface (Vision HDL Toolbox).

In this example, the rgb2gray object converts a color image to grayscale, medfil removes the salt and pepper noise. sobel highlights the edge. Finally, the mclose object performs morphological closing to enhance the edge output. The code is shown below.

[pixGray,ctrlGray] = rgb2gray(pixIn,ctrlIn); % Convert RGB to grayscale [pixDenoise,ctrlDenoise] = medfil(pixGray,ctrlGray); % Remove noise [pixEdge,ctrlEdge] = sobel(pixDenoise,ctrlDenoise); % Detect edges [pixClose,ctrlClose] = mclose(pixEdge,ctrlEdge); % Apply closing

Full-Frame Reference Design

When designing a complex pixel-stream video processing algorithm, it is a good practice to develop a parallel reference design using functions from the Image Processing Toolbox™. These functions process full image frames. Such a reference design helps verify the implementation of the pixel-stream design by comparing the output image from the full-frame reference design to the output of the pixel-stream design.

The function EnhancedEdgeDetectionHDLReference.m contains a similar set of four functions as in the EnhancedEdgeDetectionHDLDesign.m. The key difference is that the functions from Image Processing Toolbox process full-frame data.

Due to the implementation difference between edge function and visionhdl.EdgeDetector System object, reference and design output are considered matching if frmOut and frmRef differ in no greater than 20 pixels.

Create MEX File and Simulate the Design

Generate and execute the MEX file.

codegen('EnhancedEdgeDetectionHDLTestBench');Code generation successful.

EnhancedEdgeDetectionHDLTestBench_mex;

frame 1: reference and design output differ in more than 20 pixels.

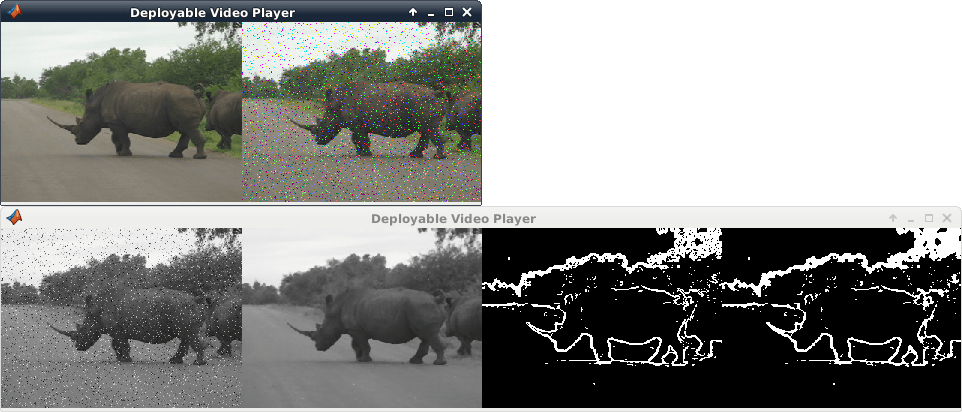

The upper video player displays the original color video on the left, and its noisy version after adding salt and pepper noise on the right. The lower video player, from left to right, represents: the grayscale image after color space conversion, the de-noised version after median filter, the edge output after edge detection, and the enhanced edge output after morphological closing operation.

Note that in the lower video chain, only the enhanced edge output (right-most video) is generated from pixel-stream design. The other three are the intermediate videos from the full-frame reference design. To display all of the four videos from the pixel-stream design, you would have written the design file to output four sets of pixels and control signals, and instantiated three more visionhdl.PixelsToFrame objects to convert the three intermediate pixel streams back to frames. For the sake of simulation speed and the clarity of the code, this example does not implement the intermediate pixel-stream displays.

HDL Code Generation

To create a new project, enter the following command in the temporary folder

coder -hdlcoder -new EnhancedEdgeDetectionProject

Then, add the file 'EnhancedEdgeDetectionHDLDesign.m' to the project as the MATLAB Function and 'EnhancedEdgeDetectionHDLTestBench.m' as the MATLAB Test Bench.

Refer to Get Started with MATLAB to HDL Workflow for a tutorial on creating and populating MATLAB HDL Coder projects.

Launch the Workflow Advisor. In the Workflow Advisor, right-click the 'Code Generation' step. Choose the option 'Run to selected task' to run all the steps from the beginning through HDL code generation.

Examine the generated HDL code by clicking the links in the log window.