Modulation Classification by Using FPGA

This example shows how to deploy a pretrained convolutional neural network (CNN) for modulation classification to the Xilinx™ Zynq® UltraScale+™ MPSoC ZCU102 Evaluation Kit. The pretrained network is trained by using generated synthetic, channel-impaired waveforms.

Prerequisites

Deep Learning Toolbox™

Deep Learning HDL Toolbox™

Deep Learning HDL Toolbox™ Support Package for Xilinx FPGA and SoC

Communications Toolbox™

Xilinx™ Zynq® UltraScale+™ MPSoC ZCU102 Evaluation Kit

Predict Modulation Type by Using CNN

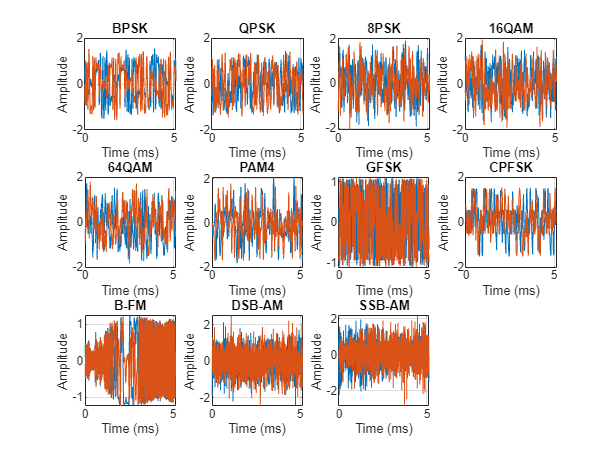

The trained CNN in this example recognizes these eight digital and three analog modulation types:

Binary phase shift keying (BPSK)

Quadrature phase shift keying (QPSK)

8-ary phase shift keying (8-PSK)

16-ary quadrature amplitude modulation (16-QAM)

64-ary quadrature amplitude modulation (64-QAM)

4-ary pulse amplitude modulation (PAM4)

Gaussian frequency shift keying (GFSK)

Continuous phase frequency shift keying (CPFSK)

Broadcast FM (B-FM)

Double sideband amplitude modulation (DSB-AM)

Single sideband amplitude modulation (SSB-AM)

modulationTypes = categorical(["BPSK", "QPSK", "8PSK", ... "16QAM", "64QAM", "PAM4", "GFSK", "CPFSK", ... "B-FM", "DSB-AM", "SSB-AM"]);

Load the trained network.

load dlhdltrainedModulationClassificationNetwork

trainedNettrainedNet =

SeriesNetwork with properties:

Layers: [28×1 nnet.cnn.layer.Layer]

InputNames: {'Input Layer'}

OutputNames: {'Output'}

The trained CNN takes 1024 channel-impaired samples and predicts the modulation type of each frame. Generate several PAM4 frames that have Rician multipath fading, center frequency and sampling time drift, and AWGN. To generate synthetic signals to test the CNN, use the following functions. Then use the CNN to predict the modulation type of the frames.

randi: Generate random bitspammod(Communications Toolbox) (Communications Toolbox) PAM4-modulate the bitsrcosdesign(Signal Processing Toolbox) (Signal Processing Toolbox): Design a square-root raised cosine pulse shaping filterfilter: Pulse shape the symbolscomm.RicianChannel(Communications Toolbox) (Communications Toolbox): Apply Rician multipath channelcomm.PhaseFrequencyOffset(Communications Toolbox) (Communications Toolbox): Apply phase and frequency shift due to clock offsetinterp1: Apply timing drift due to clock offsetawgn(Communications Toolbox) (Communications Toolbox): Add AWGN

% Set the random number generator to a known state to be able to regenerate % the same frames every time the simulation is run rng(123456) % Random bits d = randi([0 3], 1024, 1); % PAM4 modulation syms = pammod(d,4); % Square-root raised cosine filter filterCoeffs = rcosdesign(0.35,4,8); tx = filter(filterCoeffs,1,upsample(syms,8)); % Channel SNR = 30; maxOffset = 5; fc = 902e6; fs = 200e3; multipathChannel = comm.RicianChannel(... 'SampleRate', fs, ... 'PathDelays', [0 1.8 3.4] / 200e3, ... 'AveragePathGains', [0 -2 -10], ... 'KFactor', 4, ... 'MaximumDopplerShift', 4); frequencyShifter = comm.PhaseFrequencyOffset(... 'SampleRate', fs); % Apply an independent multipath channel reset(multipathChannel) outMultipathChan = multipathChannel(tx); % Determine clock offset factor clockOffset = (rand() * 2*maxOffset) - maxOffset; C = 1 + clockOffset / 1e6; % Add frequency offset frequencyShifter.FrequencyOffset = -(C-1)*fc; outFreqShifter = frequencyShifter(outMultipathChan); % Add sampling time drift t = (0:length(tx)-1)' / fs; newFs = fs * C; tp = (0:length(tx)-1)' / newFs; outTimeDrift = interp1(t, outFreqShifter, tp); % Add noise rx = awgn(outTimeDrift,SNR,0); % Frame generation for classification unknownFrames = dlhdlhelperModClassGetNNFrames(rx); % Classification [prediction1,score1] = classify(trainedNet,unknownFrames);

Return the classifier predictions, which are analogous to hard decisions. The network correctly identifies the frames as PAM4 frames. For details on the generation of the modulated signals, see the dlhdlhelperModClassGetModulator function.

The classifier also returns a vector of scores for each frame. The score corresponds to the probability that each frame has the predicted modulation type. Plot the scores.

dlhdlhelperModClassPlotScores(score1,modulationTypes)

Waveform Generation for Training

Generate 10,000 frames for each modulation type, where 80% of the frames are used for training, 10% are used for validation and 10% are used for testing. Use the training and validation frames during the network training phase. You obtain the final classification accuracy by using test frames. Each frame is 1024 samples long and has a sample rate of 200 kHz. For digital modulation types, eight samples represent a symbol. The network makes each decision based on single frames rather than on multiple consecutive frames (as in video). Assume a center frequency of 902 MHz and 100 MHz for the digital and analog modulation types, respectively.

numFramesPerModType = 10000; percentTrainingSamples = 80; percentValidationSamples = 10; percentTestSamples = 10; sps = 8; % Samples per symbol spf = 1024; % Samples per frame symbolsPerFrame = spf / sps; fs = 200e3; % Sample rate fc = [902e6 100e6]; % Center frequencies

Create Channel Impairments

Pass each frame through a channel by using:

AWGN

Rician multipath fading

Clock offset, resulting in center frequency offset and sampling time drift

Because the network in this example makes decisions based on single frames, each frame must pass through an independent channel AWGN.

The channel adds AWGN by using an SNR of 30 dB. Implement the channel by using the awgn (Communications Toolbox) (Communications Toolbox) function.

Rician Multipath

The channel passes the signals through a Rician multipath fading channel by using the comm.RicianChannel (Communications Toolbox) (Communications Toolbox) System object. Assume a delay profile of [0 1.8 3.4] samples that have corresponding average path gains of [0 -2 -10] dB. The K-factor is 4 and the maximum Doppler shift is 4 Hz, which is equivalent to a walking speed at 902 MHz. Implement the channel by using the following settings.

Clock Offset

Clock offset occurs because of the inaccuracies of internal clock sources of transmitters and receivers. Clock offset causes the center frequency, which is used to downconvert the signal to baseband, and the digital-to-analog converter sampling rate to differ from theoretical values. The channel simulator uses the clock offset factor C, expressed as C=1+Δclock106, where Δclock is the clock offset. For each frame, the channel generates a random Δclock value from a uniformly distributed set of values in the range [−maxΔclock maxΔclock], where maxΔclock is the maximum clock offset. Clock offset is measured in parts per million (ppm). For this example, assume a maximum clock offset of 5 ppm.

maxDeltaOff = 5; deltaOff = (rand()*2*maxDeltaOff) - maxDeltaOff; C = 1 + (deltaOff/1e6);

Frequency Offset

Subject each frame to a frequency offset based on clock offset factor C and the center frequency. Implement the channel by using the comm.PhaseFrequencyOffset (Communications Toolbox) (Communications Toolbox).

Sampling Rate Offset

Subject each frame to a sampling rate offset based on clock offset factor C. Implement the channel by using the interp1 function to resample the frame at the new rate of C×fs.

Combined Channel

To apply all three channel impairments to the frames, use the dlhdlhelperModClassTestChannel object.

channel = dlhdlhelperModClassTestChannel(... 'SampleRate', fs, ... 'SNR', SNR, ... 'PathDelays', [0 1.8 3.4] / fs, ... 'AveragePathGains', [0 -2 -10], ... 'KFactor', 4, ... 'MaximumDopplerShift', 4, ... 'MaximumClockOffset', 5, ... 'CenterFrequency', 902e6)

channel =

dlhdlhelperModClassTestChannel with properties:

SNR: 30

CenterFrequency: 902000000

SampleRate: 200000

PathDelays: [0 9.0000e-06 1.7000e-05]

AveragePathGains: [0 -2 -10]

KFactor: 4

MaximumDopplerShift: 4

MaximumClockOffset: 5

You can view basic information about the channel by using the info object function.

chInfo = info(channel)

chInfo = struct with fields:

ChannelDelay: 6

MaximumFrequencyOffset: 4510

MaximumSampleRateOffset: 1

Waveform Generation

Create a loop that generates channel-impaired frames for each modulation type and stores the frames with their corresponding labels in MAT files. By saving the data into files, you do not have to eliminate the need to generate the data every time you run this example. You can also share the data more effectively.

Remove a random number of samples from the beginning of each frame to remove transients and to make sure that the frames have a random starting point with respect to the symbol boundaries.

% Set the random number generator to a known state to be able to regenerate % the same frames every time the simulation is run rng(1235) tic numModulationTypes = length(modulationTypes); channelInfo = info(channel); transDelay = 50; dataDirectory = fullfile(tempdir,"ModClassDataFiles"); disp("Data file directory is " + dataDirectory);

fileNameRoot = "frame"; % Check if data files exist dataFilesExist = false; if exist(dataDirectory,'dir') files = dir(fullfile(dataDirectory,sprintf("%s*",fileNameRoot))); if length(files) == numModulationTypes*numFramesPerModType dataFilesExist = true; end end if ~dataFilesExist disp("Generating data and saving in data files...") [success,msg,msgID] = mkdir(dataDirectory); if ~success error(msgID,msg) end for modType = 1:numModulationTypes elapsedTime = seconds(toc); elapsedTime.Format = 'hh:mm:ss'; fprintf('%s - Generating %s frames\n', ... elapsedTime, modulationTypes(modType)) label = modulationTypes(modType); numSymbols = (numFramesPerModType / sps); dataSrc = dlhdlhelperModClassGetSource(modulationTypes(modType), sps, 2*spf, fs); modulator = dlhdlhelperModClassGetModulator(modulationTypes(modType), sps, fs); if contains(char(modulationTypes(modType)), {'B-FM','DSB-AM','SSB-AM'}) % Analog modulation types use a center frequency of 100 MHz channel.CenterFrequency = 100e6; else % Digital modulation types use a center frequency of 902 MHz channel.CenterFrequency = 902e6; end for p=1:numFramesPerModType % Generate random data x = dataSrc(); % Modulate y = modulator(x); % Pass through independent channels rxSamples = channel(y); % Remove transients from the beginning, trim to size, and normalize frame = dlhdlhelperModClassFrameGenerator(rxSamples, spf, spf, transDelay, sps); % Save data file fileName = fullfile(dataDirectory,... sprintf("%s%s%03d",fileNameRoot,modulationTypes(modType),p)); save(fileName,"frame","label") end end else disp("Data files exist. Skip data generation.") end

Generating data and saving in data files...

00:00:00 - Generating BPSK frames 00:01:09 - Generating QPSK frames 00:02:18 - Generating 8PSK frames 00:03:27 - Generating 16QAM frames 00:04:38 - Generating 64QAM frames 00:05:49 - Generating PAM4 frames 00:06:58 - Generating GFSK frames 00:08:10 - Generating CPFSK frames 00:09:22 - Generating B-FM frames 00:10:53 - Generating DSB-AM frames 00:12:06 - Generating SSB-AM frames

% Plot the amplitude of the real and imaginary parts of the example frames % against the sample number dlhdlhelperModClassPlotTimeDomain(dataDirectory,modulationTypes,fs)

% Plot the spectrogram of the example frames

dlhdlhelperModClassPlotSpectrogram(dataDirectory,modulationTypes,fs,sps)

Create a Datastore

To manage the files that contain the generated complex waveforms, use a signalDatastore object. Datastores are especially useful when each individual file fits in memory, but the entire collection does not necessarily fit.

frameDS = signalDatastore(dataDirectory,'SignalVariableNames',["frame","label"]);

Transform Complex Signals to Real Arrays

The deep learning network in this example looks for real inputs while the received signal has complex baseband samples. Transform the complex signals into real-valued 4-D arrays. The output frames have size 1-by-spf-by-2-by-N, where the first page (3rd dimension) is in-phase samples and the second page is quadrature samples. When the convolutional filters are of size 1-by-spf, this approach ensures that the information in the I and Q is mixed even in the convolutional layers and makes better use of the phase information. See dlhdlhelperModClassIQAPages.

frameDSTrans = transform(frameDS,@dlhdlhelperModClassIQAsPages);

Split into Training, Validation, and Test

Divide the frames into training, validation, and test data. See dlhdlhelperModClassSplitData.

splitPercentages = [percentTrainingSamples,percentValidationSamples,percentTestSamples]; [trainDSTrans,validDSTrans,testDSTrans] = dlhdlhelperModClassSplitData(frameDSTrans,splitPercentages);

Starting parallel pool (parpool) using the 'Processes' profile ... 10-Jan-2024 10:09:39: Job Queued. Waiting for parallel pool job with ID 1 to start ... Connected to parallel pool with 6 workers.

Import Data Into Memory

Neural network training is iterative. At every iteration, the datastore reads data from files and transforms the data before updating the network coefficients. If the data fits into the memory of your computer, importing the data from the files into the memory enables faster training by eliminating this repeated read from file and transform process. Instead, the data is read from the files and transformed once. Training this network using data files on disk takes about 110 minutes while training using in-memory data takes about 50 minutes.

Import the data in the files into memory. The files have two variables: frame and label. Each read call to the datastore returns a cell array, where the first element is the frame and the second element is the label. To read frames and labels, use the transform functions dlhdlhelperModClassReadFrame and dlhdlhelperModClassReadLabel. Use readall with the "UseParallel" option set to true to enable parallel processing of the transform functions, if you have Parallel Computing Toolbox license. Because the readall function, by default, concatenates the output of the read function over the first dimension, return the frames in a cell array and manually concatenate over the fourth dimension.

% Read the training and validation frames into the memory pctExists = parallelComputingLicenseExists(); trainFrames = transform(trainDSTrans, @dlhdlhelperModClassReadFrame); rxTrainFrames = readall(trainFrames,"UseParallel",pctExists); rxTrainFrames = cat(4, rxTrainFrames{:}); validFrames = transform(validDSTrans, @dlhdlhelperModClassReadFrame); rxValidFrames = readall(validFrames,"UseParallel",pctExists); rxValidFrames = cat(4, rxValidFrames{:}); % Read the training and validation labels into the memory trainLabels = transform(trainDSTrans, @dlhdlhelperModClassReadLabel); rxTrainLabels = readall(trainLabels,"UseParallel",pctExists); validLabels = transform(validDSTrans, @dlhdlhelperModClassReadLabel); rxValidLabels = readall(validLabels,"UseParallel",pctExists); testFrames = transform(testDSTrans, @dlhdlhelperModClassReadFrame); rxTestFrames = readall(testFrames,"UseParallel",pctExists); rxTestFrames = cat(4, rxTestFrames{:}); % Read the test labels into the memory YPred = transform(testDSTrans, @dlhdlhelperModClassReadLabel); rxTestLabels = readall(YPred,"UseParallel",pctExists);

Create Target Object

Create a target object for your target device that has a vendor name and an interface to connect your target device to the host computer. Interface options are JTAG (default) and Ethernet. Vendor options are Intel or Xilinx. To program the device, use the installed Xilinx Vivado Design Suite over an Ethernet connection.

hT = dlhdl.Target('Xilinx', Interface = 'Ethernet');

Create Workflow Object

Create an object of the dlhdl.Workflow class. When you create the object, specify the network and the bitstream name. Specify the saved pretrained series network trainedAudioNet as the network. Make sure that the bitstream name matches the data type and the FPGA board that you are targeting. In this example, the target FPGA board is the Zynq UltraScale+ MPSoC ZCU102 board. The bitstream uses a single data type.

hW = dlhdl.Workflow(Network = trainedNet, Bitstream = 'zcu102_single', Target = hT);Compile trainedModulationClassification Network

To compile the trainedNet series network, run the compile function of the dlhdl.Workflow object.

compile(hW)

### Compiling network for Deep Learning FPGA prototyping ...

### Targeting FPGA bitstream zcu102_single.

### Optimizing network: Fused 'nnet.cnn.layer.BatchNormalizationLayer' into 'nnet.cnn.layer.Convolution2DLayer'

### Optimizing network: Non-symmetric stride of layer with name 'MaxPool1' made symmetric as it produces an equivalent result, and only symmetric strides are supported.

### Optimizing network: Non-symmetric stride of layer with name 'MaxPool2' made symmetric as it produces an equivalent result, and only symmetric strides are supported.

### Optimizing network: Non-symmetric stride of layer with name 'MaxPool3' made symmetric as it produces an equivalent result, and only symmetric strides are supported.

### Optimizing network: Non-symmetric stride of layer with name 'MaxPool4' made symmetric as it produces an equivalent result, and only symmetric strides are supported.

### Optimizing network: Non-symmetric stride of layer with name 'MaxPool5' made symmetric as it produces an equivalent result, and only symmetric strides are supported.

### The network includes the following layers:

1 'Input Layer' Image Input 1×1024×2 images (SW Layer)

2 'CNN1' 2-D Convolution 16 1×8×2 convolutions with stride [1 1] and padding 'same' (HW Layer)

3 'ReLU1' ReLU ReLU (HW Layer)

4 'MaxPool1' 2-D Max Pooling 1×2 max pooling with stride [2 2] and padding [0 0 0 0] (HW Layer)

5 'CNN2' 2-D Convolution 24 1×8×16 convolutions with stride [1 1] and padding 'same' (HW Layer)

6 'ReLU2' ReLU ReLU (HW Layer)

7 'MaxPool2' 2-D Max Pooling 1×2 max pooling with stride [2 2] and padding [0 0 0 0] (HW Layer)

8 'CNN3' 2-D Convolution 32 1×8×24 convolutions with stride [1 1] and padding 'same' (HW Layer)

9 'ReLU3' ReLU ReLU (HW Layer)

10 'MaxPool3' 2-D Max Pooling 1×2 max pooling with stride [2 2] and padding [0 0 0 0] (HW Layer)

11 'CNN4' 2-D Convolution 48 1×8×32 convolutions with stride [1 1] and padding 'same' (HW Layer)

12 'ReLU4' ReLU ReLU (HW Layer)

13 'MaxPool4' 2-D Max Pooling 1×2 max pooling with stride [2 2] and padding [0 0 0 0] (HW Layer)

14 'CNN5' 2-D Convolution 64 1×8×48 convolutions with stride [1 1] and padding 'same' (HW Layer)

15 'ReLU5' ReLU ReLU (HW Layer)

16 'MaxPool5' 2-D Max Pooling 1×2 max pooling with stride [2 2] and padding [0 0 0 0] (HW Layer)

17 'CNN6' 2-D Convolution 96 1×8×64 convolutions with stride [1 1] and padding 'same' (HW Layer)

18 'ReLU6' ReLU ReLU (HW Layer)

19 'AP1' 2-D Average Pooling 1×32 average pooling with stride [1 1] and padding [0 0 0 0] (HW Layer)

20 'FC1' Fully Connected 11 fully connected layer (HW Layer)

21 'SoftMax' Softmax softmax (SW Layer)

22 'Output' Classification Output crossentropyex with '16QAM' and 10 other classes (SW Layer)

### Notice: The layer 'Input Layer' with type 'nnet.cnn.layer.ImageInputLayer' is implemented in software.

### Notice: The layer 'SoftMax' with type 'nnet.cnn.layer.SoftmaxLayer' is implemented in software.

### Notice: The layer 'Output' with type 'nnet.cnn.layer.ClassificationOutputLayer' is implemented in software.

### Compiling layer group: CNN1>>ReLU6 ...

### Compiling layer group: CNN1>>ReLU6 ... complete.

### Compiling layer group: AP1 ...

### Compiling layer group: AP1 ... complete.

### Compiling layer group: FC1 ...

### Compiling layer group: FC1 ... complete.

### Allocating external memory buffers:

offset_name offset_address allocated_space

_______________________ ______________ __________________

"InputDataOffset" "0x00000000" "480.0 kB"

"OutputResultOffset" "0x00078000" "4.0 kB"

"SchedulerDataOffset" "0x00079000" "32.0 kB"

"SystemBufferOffset" "0x00081000" "148.0 kB"

"InstructionDataOffset" "0x000a6000" "72.0 kB"

"ConvWeightDataOffset" "0x000b8000" "1.9 MB"

"FCWeightDataOffset" "0x0029b000" "8.0 kB"

"EndOffset" "0x0029d000" "Total: 2676.0 kB"

### Network compilation complete.

ans = struct with fields:

weights: [1×1 struct]

instructions: [1×1 struct]

registers: [1×1 struct]

syncInstructions: [1×1 struct]

constantData: {}

ddrInfo: [1×1 struct]

resourceTable: [6×2 table]

Program Bitstream onto FPGA and Download Network Weights

To deploy the network on the Zynq® UltraScale+™ MPSoC ZCU102 hardware, run the deploy function of the dlhdl.Workflow object. This function uses the output of the compile function to program the FPGA board by using the programming file.The function also downloads the network weights and biases. The deploy function verifies the Xilinx Vivado tool and the supported tool version. It then starts programming the FPGA device by using the bitstream, displays progress messages, and the time it takes to deploy the network.

deploy(hW)

### Programming FPGA Bitstream using Ethernet... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming FPGA device on Xilinx SoC hardware board at 192.168.1.101... ### Copying FPGA programming files to SD card... ### Setting FPGA bitstream and devicetree for boot... # Copying Bitstream zcu102_single.bit to /mnt/hdlcoder_rd # Set Bitstream to hdlcoder_rd/zcu102_single.bit # Copying Devicetree devicetree_dlhdl.dtb to /mnt/hdlcoder_rd # Set Devicetree to hdlcoder_rd/devicetree_dlhdl.dtb # Set up boot for Reference Design: 'AXI-Stream DDR Memory Access : 3-AXIM' ### Rebooting Xilinx SoC at 192.168.1.101... ### Reboot may take several seconds... ### Attempting to connect to the hardware board at 192.168.1.101... ### Connection successful ### Programming the FPGA bitstream has been completed successfully. ### Loading weights to Conv Processor. ### Conv Weights loaded. Current time is 10-Jan-2024 10:15:24 ### Loading weights to FC Processor. ### FC Weights loaded. Current time is 10-Jan-2024 10:15:24

Results

Classify five inputs from the test data set and compare the prediction results to the classification results from the Deep Learning Toolbox™. The YPred variable is the classification results from the Deep learning Toolbox™. The fpga_prediction variable is the classification result from the FPGA.

numtestFrames = size(rxTestFrames,4);

numView = 5;

listIndex = randperm(numtestFrames,numView);

testDataBatch = rxTestFrames(:,:,:,listIndex);

YPred = classify(trainedNet,testDataBatch);

[scores,speed] = predict(hW,testDataBatch, Profile ='on');### Finished writing input activations.

### Running in multi-frame mode with 5 inputs.

Deep Learning Processor Profiler Performance Results

LastFrameLatency(cycles) LastFrameLatency(seconds) FramesNum Total Latency Frames/s

------------- ------------- --------- --------- ---------

Network 675662 0.00307 5 3370098 326.4

CNN1 30158 0.00014

MaxPool1 15576 0.00007

CNN2 68775 0.00031

MaxPool2 11703 0.00005

CNN3 84279 0.00038

MaxPool3 8614 0.00004

CNN4 119542 0.00054

MaxPool4 7331 0.00003

CNN5 156192 0.00071

MaxPool5 5064 0.00002

CNN6 154937 0.00070

AP1 11965 0.00005

FC1 1480 0.00001

* The clock frequency of the DL processor is: 220MHz

[~,idx] = max(scores, [],2); fpga_prediction = trainedNet.Layers(end).Classes(idx);

Compare the prediction results from Deep Learning Toolbox™ and the FPGA side by side. The prediction results from the FPGA match the prediction results from Deep Learning Toolbox™. In this table, the ground truth prediction is the Deep Learning Toolbox™ prediction.

fprintf('%12s %24s\n','Ground Truth','FPGA Prediction');for i= 1:size(fpga_prediction,1) fprintf('%s %24s\n',YPred(i),fpga_prediction(i)); end

Ground Truth FPGA Prediction

16QAM 16QAM GFSK GFSK 64QAM 64QAM CPFSK CPFSK B-FM B-FM

References

O'Shea, T. J., J. Corgan, and T. C. Clancy. "Convolutional Radio Modulation Recognition Networks." Preprint, submitted June 10, 2016. https://arxiv.org/abs/1602.04105

O'Shea, T. J., T. Roy, and T. C. Clancy. "Over-the-Air Deep Learning Based Radio Signal Classification." IEEE Journal of Selected Topics in Signal Processing. Vol. 12, Number 1, 2018, pp. 168–179.

Liu, X., D. Yang, and A. E. Gamal. "Deep Neural Network Architectures for Modulation Classification." Preprint, submitted January 5, 2018. https://arxiv.org/abs/1712.00443v3