Evaluate Modelscape Deployments with Review Editor

This example shows how to use Review Editor to evaluate Modelscape deployments.

You can compare the model being validated with older versions of the same model, or with different challenger models. For details about validating a model with a challenger model deployed to a microservice using, for example, Flask (Python) or Plumber (R), see Validation of External Models.

Connect to Modelscape Instance

Connect your MATLAB session and Modelscape instance.

m = modelscape.validate.getModelscapeInstance();

Find Correct Deployment

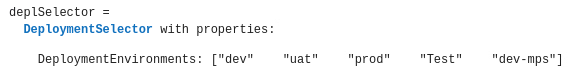

Construct a DeploymentSelector object for finding the model deployment you are interested in.

deplSelector = modelscape.validate.DeploymentSelector(m)

The selector object displays the deployment environments you can access in your Modelscape instance.

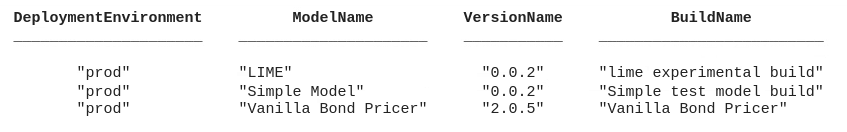

Use the list function with the argument DeploymentEnvironment to see all deployments in the production environment.

allDeployments = list(deplSelector, "DeploymentEnvironment", "prod")

The function returns a table with the following information, as well as four columns not shown here, which contain unique string identifiers for each deployment environment, model, version and build.

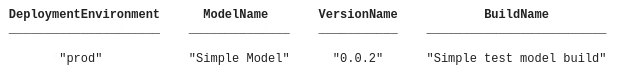

Use any combination of DeploymentEnvironment, ModelName, VersionName and BuildName arguments to filter the list down to the deployment you want to evaluate, for example:

testDeplSpecification = list(deplSelector, "DeploymentEnvironment", "prod", "ModelName", "Simple Model")

When you do not use any of these filtering arguments, list returns a table of all deployments across all deployment environments.

Evaluate Deployment

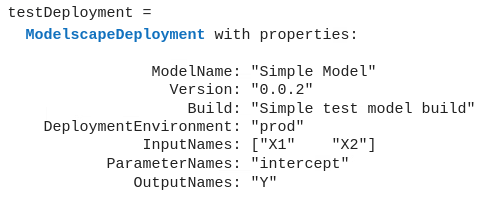

Use the getDeployment function to construct a ModelscapeDeployment object linked to your selected deployment.

testDeployment = getDeployment(deplSelector, testDeplSpecification)

The object displays its model name, version, and other information, including the names of its inputs, parameters and outputs.

Use the evaluate function to compute the model outputs. This function expects two inputs:

The first input is a table whose variable names match the

InputNamesshown by theModelscapeDeploymentobject. Each row of the table represents a single model input, or 'run', and the table represents a 'batch of runs'.The second input is a struct whose fields match the

ParameterNamesshown by theModelscapeDeploymentobject. The values carried by this struct apply to all the runs in the batch. If the model has no parameters, omit this input, or usestruct().

For example, call evaluate for the deployment of "Simple Model" above:

inputs = table([1;2], [2;3], VariableNames=["X1","X2"]); parameters = struct('intercept', 2); outputs = evaluate(testDeployment, inputs, parameters)

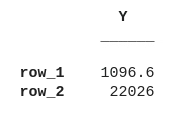

The output is a table of the same height as your inputs. Each row represents the model output for the corresponding row in the input table. The column names match the OutputNames of the ModelscapeDeployment object.

Use extra evaluate outputs for more information about the model evaluation:

[outputs, diagnostics, batchDiagnostics, batchId] = evaluate(testDeployment, inputs, parameters)

These are the contents of the outputs:

diagnosticsis a struct with a single field for each row of the input table, containing the run-level diagnostics computed by the model, if any.batchDiagnosticsa struct containing the batch-level diagnostics computed by the model or the Modelscape API, if any.batchIdis a string containing a unique identifier for the evaluation batch.